80% of Enterprises Will 'Go AI' Within 3 Years – How Far Are We from Large-Scale AI Adoption?

-

Within three years, 80% of enterprises will adopt AI!

Recently, analytics firm Gartner released a report predicting that by 2026, over 80% of enterprises will use generative AI application programming interfaces (APIs) or deploy applications powered by generative AI.

Currently, fewer than 5% of enterprises utilize generative AI in production environments. However, in just three years, the number of enterprises adopting or creating generative AI models is expected to grow 16-fold.

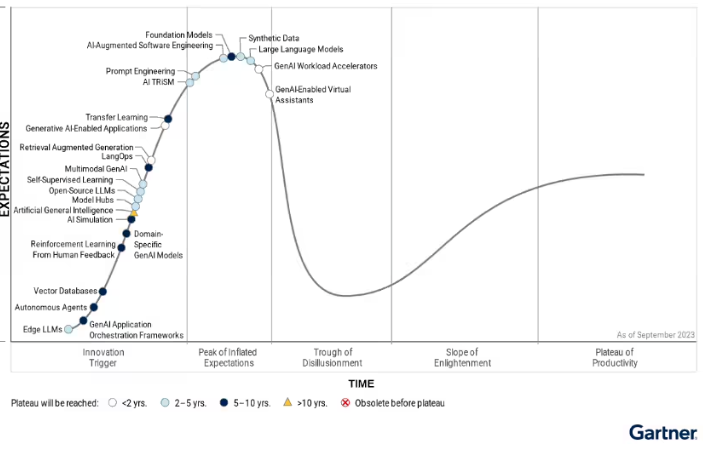

Foundation models will enter the 'peak stage' on the technology hype cycle. Source: Gartner

AI has become an unavoidable buzzword. At the recently held 2023 Shanghai International Consumer Electronics Technology Exhibition (Tech G), multiple experts and scholars discussed how enterprises can leverage AI to achieve intelligent digital transformation.

Will enterprises that fail to integrate 'intelligence' inevitably be left behind? In the era of large models, what opportunities exist for small and medium-sized enterprises to enter the field? How far are we from large-scale adoption? 'Top AI Player' has compiled key insights from several speakers and scholars at the conference for reference.

'In AI deployment, not every enterprise needs to follow Baidu or iFlytek's lead'

@Professor Lu Xianghua, Department of Information Management and Business Intelligence, Fudan University School of Management

Over the past five to six years, enterprises have been vigorously pursuing digital transformation. Now, they are leveraging the data accumulated from digitization to achieve intelligent processes. Intelligence here refers to better proactive prediction, prevention, action, agile response, and automated handling through models—key concepts that align perfectly with AIGC (AI-Generated Content).

According to data from iResearch, only 4.3% of people were willing to implement enterprise mobile informatization a decade ago. However, this year, the proportion of enterprises planning to deploy AIGC applications within a year has surged to 90.8%.

This indicates that the focus of AIGC industry development will increasingly emphasize the commercialization and sustainable development of the technology itself.

Previously, an article by Sequoia Capital titled Generative AI Enters the Second Phase pointed out that early signs of success do not change the fact that neither OpenAI nor LLaMA have yet produced products with a high degree of market fit.

Now, the focus is on how to implement industry-specific, product-specific, and scenario-specific large models into enterprise functions to achieve better commercial application in real-world scenarios.

Whether in marketing, office automation (OA), finance, HR, R&D, or supply chain management, many vendors are exploring various applications of AI. For example, AIGC can assist enterprises in training new employees and daily operations through internal knowledge bases, information retrieval functions, and direct calls to other applications.

In different scenarios, the maturity of applications varies, and AIGC also faces many constraints.

The much-discussed 'wave of unemployment' brought by artificial intelligence is, in my opinion, more accurately described as 'AIGC replaces tasks, not jobs.' Humans are irreplaceable, but tasks can be taken over with the help of technology. Businesses should focus on which tasks can be replaced by AIGC, not which positions.

For example, articles written by AI are often unreadable to users; posters designed by AI are frequently rejected by clients; and event plans generated by AI are overly formulaic. It often takes dozens of interactions with AI to get the desired output, indicating that AIGC's current value requires patient fine-tuning.

This is precisely why it demands employee effort. Companies need to foster an environment where employees actively engage with AIGC technology. The value of AIGC doesn’t emerge automatically.

Moreover, not every company needs to emulate Baidu or iFlytek, nor does every business need to develop its own large-scale model. For most small and micro-enterprises, leveraging open-source APIs to embrace AIGC scenarios is likely the most practical approach.

For AIGC, the driving costs are high, and its current value may seem limited, but I believe the dawn is near. The AIGC industry has entered a phase of scenario exploration and crossing the chasm. Once it passes this breakeven point, perceptions of AIGC will become much clearer.

"One of AI's shortcomings is the extremely high computational cost" — Yan Mingfeng, President of Shanghai Technology Exchange.

AI is still an emerging technology, and like all new technologies, it follows its own developmental trajectory. From a productivity perspective, achieving high-quality technological innovation requires optimizing the allocation of production factors. This is precisely what modern technology markets, represented by the Shanghai Technology Exchange, aim to accomplish—enhancing the role of technological elements in more suitable scenarios.

For instance, determining which fields offer the highest application value for AI technology is closely tied to identifying the right buyers and application scenarios.

How should we understand AI? In my view, 'intelligence' refers to cognition, while 'ability' refers to action.

Currently, AI companies are transitioning from the cognitive domain to the action domain. One of AI's existing shortcomings is its extremely high computational cost. The government's vigorous development of computing platforms aims to address this cost issue. Additionally, increasing societal supply—such as enriching datasets—requires comprehensive optimization and planning.

As part of the technology market, we continuously explore AI applications in technology transactions, identifying opportunities to empower innovation systems and better connect scenarios, technology, and capital.

"MaaS is a crucial outlet for large models in the future" — Zhou Ming, Founder and CEO of LanZhou Technology. Embracing AI large models has become a major trend in technological innovation this year. How can large models be applied in enterprises? In the past, AI models were designed to solve one task per model, such as traditional machine learning models. In the era of large models, one model can solve N tasks, and it can even be imagined that in the future, one model could solve an infinite number of tasks. You tell the model what to do, and it can complete certain tasks. If the performance is unsatisfactory, you can provide it with examples or guide it step-by-step, allowing the model to gradually reach a certain level of proficiency. From AI 1.0 to AI 2.0, one model handling multiple tasks has resolved the issue of fragmentation.

An ecosystem has been built around large models. At the bottom layer of this ecosystem are chips, followed by the cloud computing platform, then the so-called model factories, and finally the application layer.

To transition from general-purpose large models to industry-specific large models, industry data and knowledge must be integrated into the general models through mixed training. Currently, some large model teams only perform SFT (Supervised Fine-Tuning), making their models relatively fragile. There are numerous scenarios in any industry, and it’s unrealistic to expect an industry model to excel in all products. Taking finance as an example, financial industry large models require continuous training with financial data (fine-tuning based on pre-trained models) to achieve higher accuracy. This includes data from the broader financial field, such as news, announcements, research reports, consultations, financial expertise, and other relevant data.

When performing SFT, it’s essential to have a deep understanding of financial task scenarios. Therefore, we have summarized 105 financial task scenarios, including comprehension tasks, generation tasks, and other tasks. All underlying optimization algorithms are applied to refine the training process. After training, the model must be integrated with plugin libraries, external knowledge bases, and vector databases to execute key business scenarios.

With such industry-specific large models, simply deploying the model on the cloud allows users to choose from various fields through localized deployment or public sharing models. They can then access corresponding large model services on a pay-as-you-go basis. I believe that MaaS is a crucial outlet for large models in the future. Only by building from general-purpose to industry-specific and scenario-specific layers can we effectively serve enterprises.

"If AIGC cannot understand the human world well, it does not possess human-like intelligence" — Chen Gen, Member of the Central Science and Technology Committee of the Jiusan Society and Science Writer. This year, both domestically and internationally, the attention on ChatGPT and AIGC has reached unprecedented heights. Whether AIGC includes ChatGPT or vice versa, both are products of the same type. The future competition between AIGC and ChatGPT will depend on the speed of technological iteration; whoever can gain an advantage in computational power and data faster will take the lead.

For AIGC, a very real challenge now is whether computational power can be broken through. Without a breakthrough in computational power, the further development of AIGC will fall far short of the functions we truly envision. Additionally, safety is a crucial bottom line. For example, if digital avatars replace us and interact between the real and virtual worlds, their real-time performance and generated content must be safe. This includes the compliance of digital content and the accuracy of data.

If AIGC cannot understand the human world well, it is not yet an artificial intelligence with human-like intelligence. We can only define it as an AI statistician with advanced statistical functions. For AIGC to understand the human world means we need it to speak correctly and do the right things, which is a significant challenge. However, AIGC presents a great opportunity for us, as it will bring revolutionary changes to industries. For instance, one of the key technologies behind the metaverse is generative AI technology, which digitizes everything in the physical world, generates digital twins through AI, and then overlays the digital and real worlds to create an interactive, blended reality—this is the technical core of the metaverse. Without AIGC, it would be difficult to generate the physical world in real-time and achieve synchronous mirroring and driving.

The future portrait of AIGC is the datafication of everything, making everything wearable and interconnected. At its core lies a revolution in production models driven by the reinvention of productivity. In healthcare, AI-trained digital twins can monitor critical health indicators in real-time and provide disease warnings. Doctors only need to intervene when abnormalities arise. In the future, combining genetic testing could enable personalized disease prevention, laying the technological foundation for realizing Traditional Chinese Medicine's concept of "preventive treatment."

In education, AI can transform traditional knowledge-based teaching methods. Immersive digital learning environments generated by AI can enhance learning efficiency. Similarly, enterprises and government agencies are actively deploying AI in education. Sectors like tourism and marketing also face opportunities for AI-driven disruption. For instance, AI-powered digital avatars can conduct 24/7 live broadcasts, travel commentary, and interactive engagements, significantly improving efficiency and heralding a major transformation in traditional service industries. This is the societal change AIGC will bring—an era we eagerly anticipate.

How far are we from large-scale applications? Since the end of 2022, the rapid rise of AIGC, particularly the explosive growth of large-scale AI models in China, has prompted questions about whether we've reached a so-called "singularity moment." Data shows that at least 130 companies in China are researching large-scale model products. For businesses and users, the focus is on when these models can be deployed at scale and how they can be utilized.

Zhang Tian, Chief Analyst at Hua'an Securities, notes that it has only been a year since GPT's emergence, and many products and models are still in the refinement stage. Large-scale models will undergo three phases:

- Advice: Providing informational and advisory input for humans.

- Agent: Assisting in executing tasks once safety, regulatory, and reliability issues are resolved.

- AGI (Artificial General Intelligence): Achieving true general-purpose AI capable of serving as a productive force.

Currently, large-scale models are in their "infancy," with Elon Musk predicting they will reach "AGI adulthood" by 2029. By then, society will undergo profound changes. To empower industries with these models, the cost of computational and communication infrastructure must decrease, alongside lowering usage barriers and risk factors. Zhong Junhao, Secretary-General of the Shanghai Artificial Intelligence Industry Association, states that AGI is not a singularity—unless it develops autonomous consciousness, marking the point when a robot truly becomes human-like. Until then, it remains a technology, a productivity tool, or a means of production.

Gai Lishan, Chairman of Elitesland and Vice President of the China Association of Small and Medium Enterprises, believes that AIGC is a system rather than a single technology. Currently, AIGC technology is still in its growth phase, and broader application requires improvements in data accuracy and model reliability. Additionally, the decision-making process of large models is opaque and unexplainable. If misoperations occur, the losses for enterprises could be significant. While the prospects of AI are widely optimistic, achieving maturity and reliability in its implementation remains a current challenge and practical difficulty.