Video Synthesis Tool MAGVIT-v2 Transforms Visual Content into Tokens for Large Models

-

Recently, Carnegie Mellon University, Google Research, and Georgia Institute of Technology jointly introduced MAGVIT-v2, a video tokenization tool that successfully converts image and video inputs into tokens recognizable by large language models (LLMs).

Project address: https://magvit.cs.cmu.edu/

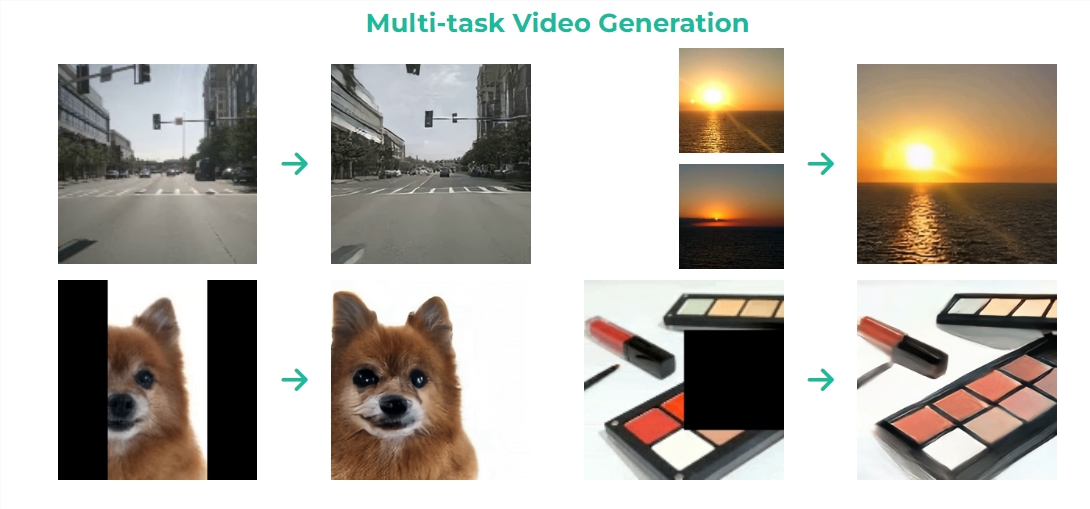

MAGVIT-v2's unique algorithm enables developers to achieve astonishing applications, ranging from panoramic videos to intelligent removal, image-to-animation conversion, and automatic flipping. MAGVIT not only provides creators with unlimited inspiration but also brings unprecedented convenience to video editing.

Through the application of MAGVIT-v2, LLMs have significantly outperformed traditional diffusion models in visual generation tasks. Video tokenization is the process of converting visual content (such as images or videos) into tokens that large language models can understand and process. The advent of MAGVIT-v2 undoubtedly provides new opportunities for large language models in visual tasks.

In visual generation tasks, this new tokenization tool has demonstrated great potential, significantly improving model performance. Overall, the release of MAGVIT-v2 heralds a major breakthrough in the field of visual generation.