AI-Generated Music Now Suitable for Short Video BGM

-

Undoubtedly, the emergence of AI has brought technological innovation to many industries, and the music scene is no exception.

Not only in vocal simulation, but AI is also making significant strides in music composition, with various text-to-music models emerging one after another:

Examples include OpenAI's MuseNet, Google's MusicLM, Meta's MusicGen, and the recently released Stable Audio by Stability AI, among many others.

These are just some of the more well-known AI music models; there are countless others that are less prominent.

With so many AI models for generating music, their main selling point is enabling even those without musical expertise to compose. All it takes is some typing and descriptive skills.

Hearing this, even someone like me with little music theory knowledge is intrigued—composition may not be my forte, but textual description certainly is.

Therefore, we decided to personally test several popular AI music composition models currently on the market to see if they can truly compose from scratch and whether the resulting music is pleasant to listen to and meets expectations.

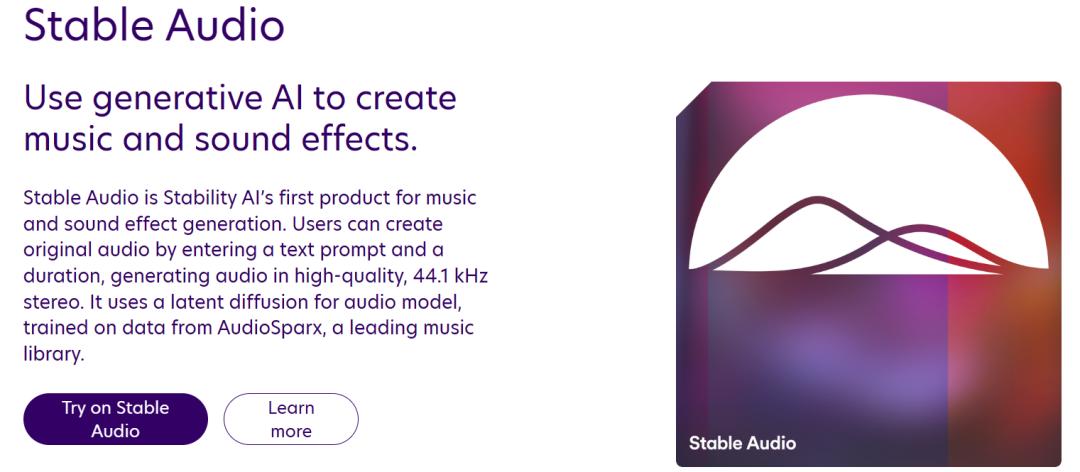

First up is Stability AI's new music composition AI: Stable Audio.

According to the official statement, the model was trained on over 800,000 audio files, including music, sound effects, and solo instrument performances, with the entire dataset totaling more than 19,500 hours.

Moreover, the AI can generate music up to 90 seconds long using just text descriptions.

The range of styles is also incredibly diverse. After listening to examples on their official website, we found everything from purely instrumental pieces like piano and drum solos.

There are various genres and styles available, such as ethnic percussion, hip-hop, heavy metal, and more.

It can even generate white noise, like the bustling sounds of a restaurant—honestly, it sounds quite realistic.

Of course, the official demos likely showcase the best examples. To truly gauge its performance, you’ll need to try it out yourself.

So, we signed up to see what kind of music a complete novice like me could create using this model.

Since it was just released, it took some time to access the Stable Audio webpage.

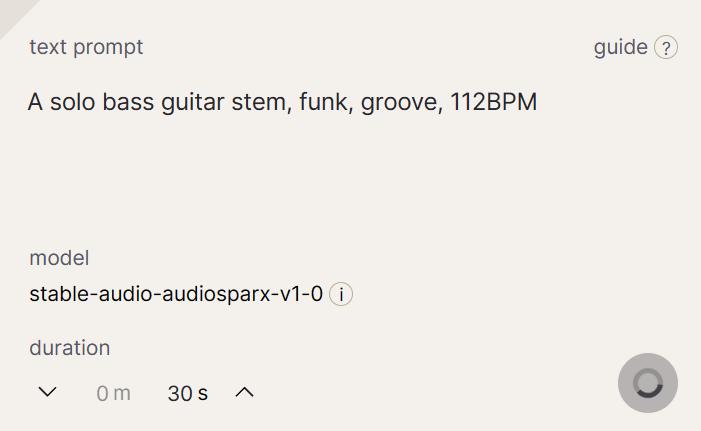

Once inside, we asked it to generate a 30-second bass solo with 112 beats, funky and rhythmic.

The generation process took about one to two minutes. After listening to the result, Shichao was somewhat surprised - it did produce bass guitar music with accurate musical style, but the only flaw was that the bass tone wasn't very clear, sounding like a middle state between fingerstyle and slap bass.

Next, we increased the difficulty by requesting a more complex instrumental arrangement: a catchy pop dance track with tropical percussion elements, featuring a cheerful rhythm suitable for beach listening.

This time Stable Audio made a small mistake. While the rhythm was indeed cheerful and suitable for dancing on the beach, the tropical percussion mentioned in the prompt couldn't be clearly heard in these 30 seconds.

Then we asked it to generate a rock-style music piece, which was completed within minutes. Although the sound still wasn't very clear, the rock style along with the sounds of electric guitar and drums were recognizable.

Overall, after this testing experience, Stable Audio's performance in music generation indeed shows no major flaws, with occasional surprisingly good performances.

At least for creators looking to add background music to short videos, this is completely sufficient.

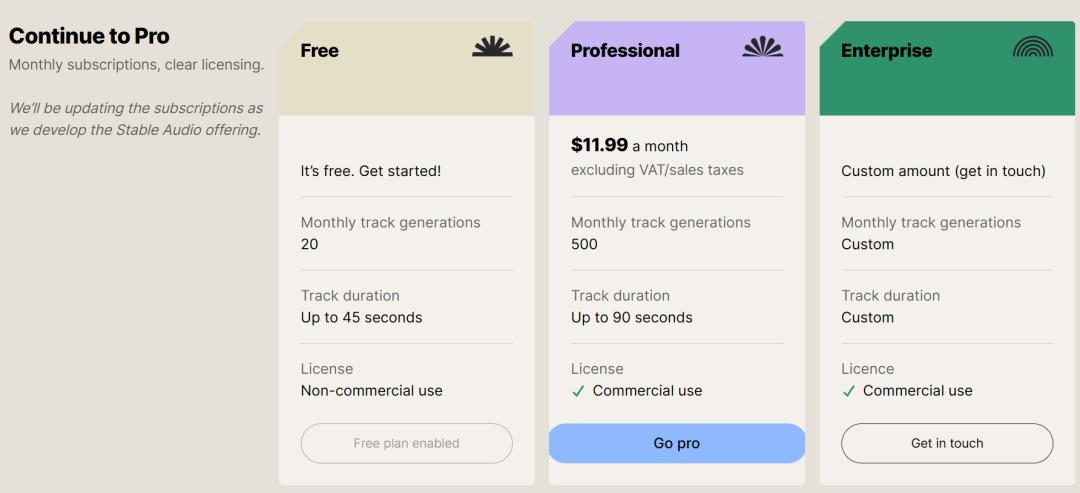

This time, Stable Audio has specifically focused on duration - the standard version can generate audio up to 45 seconds. For longer durations, users can upgrade to the PRO version which supports continuous generation of up to 90 seconds.

Next up is our second contender: Meta AI's MusicGen. Built on Transformer architecture, it generates subsequent audio segments by predicting from previous audio clips.

Currently, MusicGen has only released a demo version, available for limited testing on huggingface.

For example, when generating hip-hop style music, the results are quite catchy with clean, crisp rhythms.

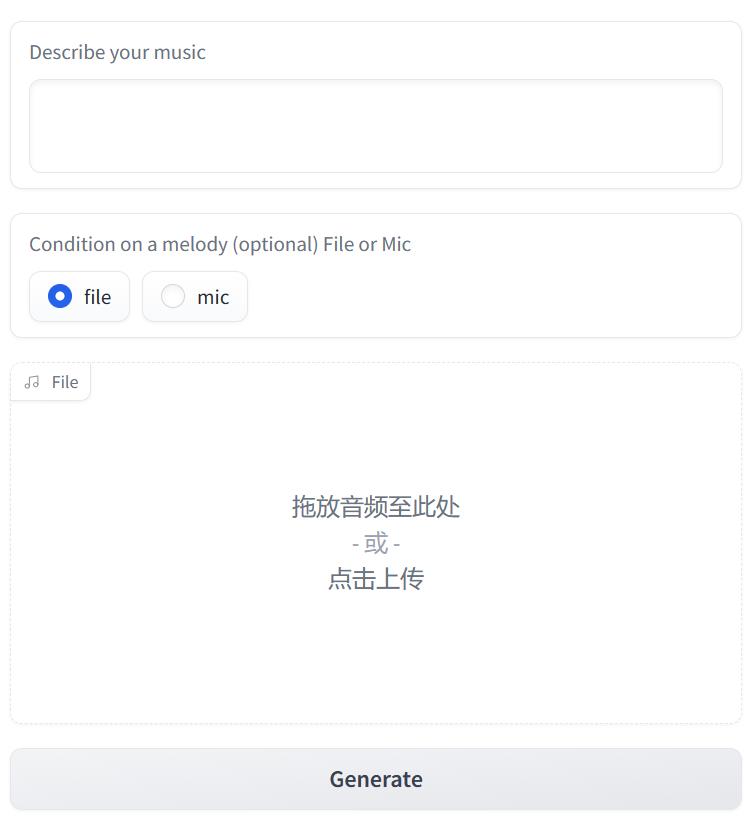

Unlike Stable Audio, MusiacGen provides more freedom in generating music. It not only accepts text prompts but also allows users to supplement with audio files.

The process is straightforward: input your prompt, drag and drop a reference music clip into the file box, or record live audio. The audio prompt is optional.

Although MusiacGen can only generate up to 30 seconds of audio at a time, with the help of audio prompts, creating a longer piece is possible—though it can be cumbersome. By generating 30-second segments and using 10-second clips from each as prompts for the next, users can eventually stitch together a longer track.

However, one major drawback is the generation speed, which can be frustratingly slow. Waiting three to four minutes is common, and sometimes, after several minutes, the system may crash unexpectedly.

Earlier this year, Google launched its music generation model MusicLM, which currently offers the most comprehensive features among existing AI composition tools.

Beyond basic text-to-music generation, MusicLM incorporates several innovative features.

For example, its Story Mode can generate a 1-minute musical piece with distinct sections: 0-15s for meditation, 16-30s for waking up, 31-45s for running, and 46-60s for conclusion.

While the generated audio generally meets requirements, it still suffers from common AI music issues - unclear instrument sounds and somewhat abrupt transitions between sections.

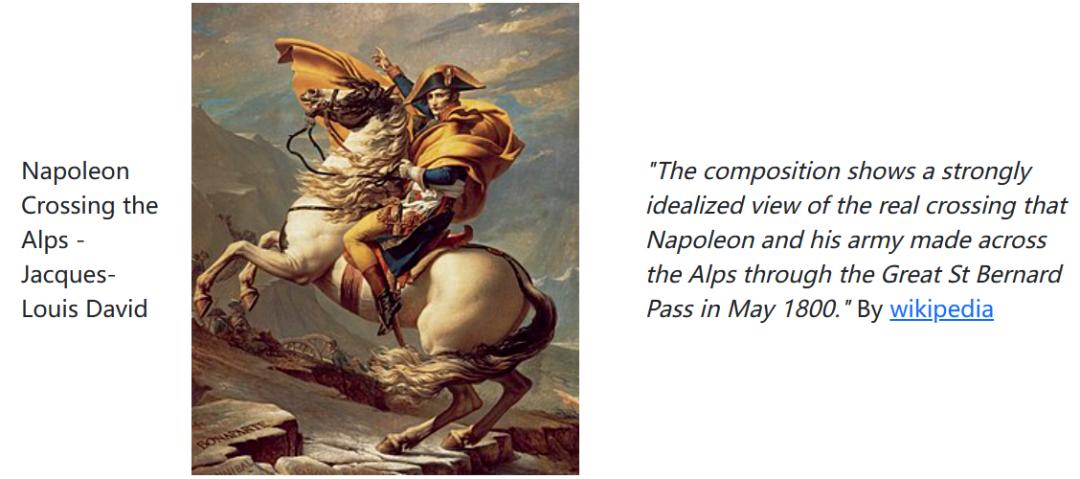

Another notable feature is image-to-music generation. When provided with an image (like the classic Napoleon crossing the Alps) and its description, MusicLM can create a 30-second accompanying soundtrack.

This time it really sounds quite dramatic.

MusicLM is also not publicly released yet. To experience it, you need to join the waitlist for beta access on AI Test Kitchen.

OpenAI's MuseNet was already announced on their official website three years ago.

In recent years, there haven't been many updates, and it still relies on the same technology as GPT-2. Moreover, after three years, this AI has not been made available for public use.

But judging from the introduction and examples provided on its official website, MuseNet seems poised to outperform the aforementioned models.

Without even considering the quality of the generated music, the duration alone is impressive—it can produce music up to 4 minutes long.

Compared to the other models mentioned earlier, the quality of the music it generates is far superior. I downloaded an example from the official website, and you can listen to it together.

If not for knowing it was AI-generated, I would have thought it was a new composition by a master musician. It has an introduction, a climax, and clear instrument sounds. With minor adjustments, it could easily be a complete musical piece.

Of course, achieving such results is not only due to the neural network but also the critical role played by the training dataset.

OpenAI trained MuseNet using hundreds of thousands of MIDI files. The image below shows part of the dataset, which includes everything from Chopin, Bach, and Mozart to Michael Jackson, The Beatles, and Madonna—spanning classical, rock, pop, and almost every musical style imaginable.

Not only abroad, but domestically, AI music has been thriving in recent years. At last year's Huawei Developer Conference, a music AI called the Singer model was unveiled. NetEase Cloud Music introduced NetEase Tianyin for musicians, enabling AI to handle lyric writing, composition, and arrangement directly.

At the recent 2023 World Artificial Intelligence Conference, Tencent's Multimedia Lab also showcased its self-developed AI universal composition framework, XMusic.

Overall, these AI music composition models each have their strengths and can generate music in almost any desired style. Sometimes, the music they produce is so convincing that, without careful scrutiny, it's hard to tell it was AI-generated. Such music can easily "pass" in short videos.

However, from a professional perspective, these AI models all have their shortcomings. The most obvious issue is that the music they generate often lacks clarity in instrument performance, as mentioned earlier.

Moreover, like AI-generated art, AI music is a major hotspot for copyright issues. With laws struggling to keep pace with AI advancements, copyright infringement lawsuits are becoming increasingly common.

For example, in January of this year, the Recording Industry Association of America (RIAA) submitted an infringement report to the government, urging them to address the problem of AI music copyright violations.

Even the researchers behind MusicLM have openly acknowledged the copyright issue, stating in their paper that there is a potential risk of misappropriating creative content.

During the testing of this model, it was found that approximately 1% of the generated music was directly copied from the training dataset.

It's no wonder that most music AI models either don't offer public trials or only provide demos or closed beta tests. Even publicly available models like Stable Audio repeatedly emphasize that their datasets are authorized by AudioSparx.

Setting aside copyright issues, the current development of AI in music is astonishing, and embracing AI music has become an industry trend.

For example, Endel, a company specializing in AI-generated background music, has received investments from major music labels like Warner and Sony. Similarly, the AI music creation platform Soundful has secured funding from Universal Music, Disney, and Microsoft.

Of course, entering the AI music field is driven by commercial and technological considerations. In terms of musicality and artistry, current AI still falls far short of human creators, which should be the top priority for future AI development.

Image and Data Sources:

MusicLM, musenet, Stable Audio, Internet

36Kr, Will 2023 Be the Year AI Music Goes Head-to-Head with Humans?

Machine Heart, MusicLM is Here! Google Tackles Text-to-Music Generation but Hesitates to Release Due to Copyright Risks