Microsoft Enhances Training and Inference Capabilities for ChatGPT and Other Open-Source Models

-

Currently, the renowned AI model open-source platform Hugging Face hosts over 320,000 large models, with rapid daily growth. According to statistics, only about 6,000 models support the ONNX format, but Microsoft states that in reality, over 130,000 models are compatible with this format.

ONNX format models can leverage Microsoft's ONNX Runtime to optimize performance, save computational resources, and enhance training/inference capabilities. For example, accelerating the inference capability of the speech recognition model Whisper via ONNX Runtime results in a 74.30% improvement over PyTorch, significantly boosting the model's response efficiency.

Microsoft has established deep technical collaboration with Hugging Face, expanding the support scope of ONNX Runtime. It now supports over 90 architectures of large models, including Llama (ChatGPT-like large language models), Stable-Diffusion (diffusion models), BERT, T5, RoBERTa, and others, covering the 11 most popular large models today.

ONNX format open-source models: https://huggingface.co/models?library=onnx&sort=trending

Learn more about and use ONNX Runtime: https://onnxruntime.ai/

ONNX Runtime open-source repository: https://github.com/microsoft/onnxruntime

What is the ONNX Format

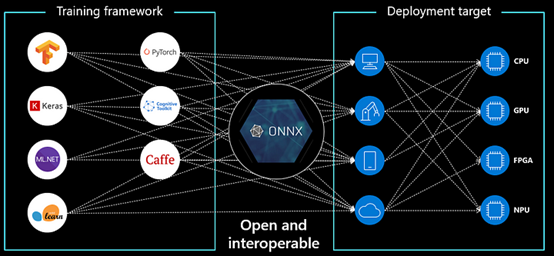

ONNX, short for Open Neural Network Exchange, is an open standard file format for representing machine learning models. It facilitates interoperability between different deep learning frameworks.

This format was jointly developed by tech giants such as Microsoft and Facebook, aiming to simplify the development and deployment process of AI models. ONNX supports multiple deep learning frameworks, including PyTorch, TensorFlow, Caffe2, MXNet, and more.

This allows developers to train models in one framework, convert them to the ONNX format, and deploy or perform inference in another framework.

Additionally, ONNX models are highly portable and can run on various hardware platforms (such as CPUs, GPUs, FPGAs, and edge devices), offering extensive deployment flexibility.

This means developers are no longer constrained by specific hardware or software environments during deployment.

Introduction to ONNX Runtime Inference Platform

ONNX Runtime is an open-source, high-performance cross-platform library developed by Microsoft, primarily designed for running and accelerating machine learning models in the ONNX format. Currently, leading global companies such as Adobe, AMD, Intel, NVIDIA, and Oracle utilize this platform to enhance the efficiency of AI model development.

Key Features of ONNX Runtime:

-

Support for Multiple Model Architectures: It accommodates various types of models, including those for computer vision, natural language processing, speech recognition, and machine learning, offering high flexibility for diverse tasks.

-

Framework Compatibility: ONNX Runtime supports ONNX models exported from multiple deep learning frameworks, such as PyTorch, TensorFlow, scikit-learn, and Apache MXNet, simplifying model deployment and improving portability.

-

Cross-Platform Support: It runs on multiple operating systems, including Windows, macOS, Linux, as well as cloud, mobile, and IoT devices, ensuring broad deployment flexibility.

Performance Optimization: ONNX Runtime is specifically optimized for different hardware platforms such as CPUs, GPUs, and edge devices to achieve efficient model inference. It supports various hardware accelerators, including NVIDIA TensorRT, Intel's OpenVINO, and DirectML.

Microsoft has stated that there are still 100,000 models on the Hugging Face open-source platform that do not support the ONNX format. They encourage more technical research institutions and open-source projects to join the ONNX community to enhance development efficiency through ONNX Runtime.

-