Baichuan Intelligence Launches Baichuan 2, Outperforming LLaMA 2 in Both Liberal Arts and Sciences

-

On September 6, under the guidance of the Beijing Municipal Science and Technology Commission, Zhongguancun Science Park Management Committee, and Haidian District Government, Baichuan Intelligence held a large model launch event themed 'Converging Rivers into the Sea, Open Source for Mutual Benefit.' Academician Zhang Bo of the Chinese Academy of Sciences attended and delivered a speech. The event announced the open-source release of the fine-tuned Baichuan 2-7B, Baichuan 2-13B, Baichuan 2-13B-Chat, and their 4-bit quantized versions, all free for commercial use.

Baichuan Intelligence also open-sourced the model training Check Point and announced the upcoming release of the Baichuan 2 technical report, detailing the training process to help academic institutions, developers, and enterprise users better understand the model's development and advance large-scale model research and community technical progress.

Baichuan 2 download address: https://github.com/baichuan-inc/Baichuan2

Outstanding Performance in Both Liberal Arts and Sciences, Surpassing LLaMA 2

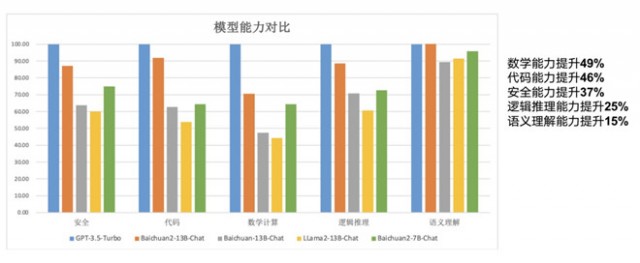

Baichuan 2-7B-Base and Baichuan 2-13B-Base were trained on 2.6 trillion high-quality multilingual data points. While retaining the strengths of the previous generation—such as excellent generation and creative capabilities, smooth multi-turn dialogue, and low deployment barriers—the new models show significant improvements in mathematics, coding, security, logical reasoning, and semantic understanding. Compared to the previous 13B model, Baichuan 2-13B-Base achieved a 49% improvement in math, 46% in coding, 37% in security, 25% in logical reasoning, and 15% in semantic understanding.

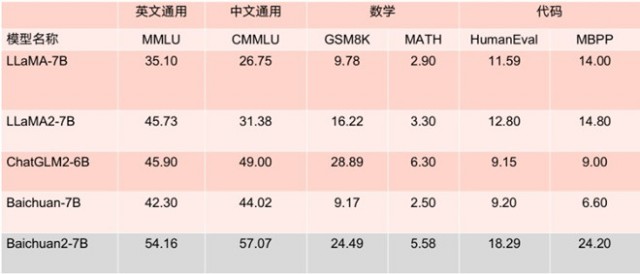

The two open-sourced models performed exceptionally well on major evaluation benchmarks, such as MMLU, CMMLU, and GSM8K, significantly outperforming LLaMA 2 and other models of similar scale.

Notably, Baichuan2-7B, with 7 billion parameters, matched the performance of LLaMA 2 (13 billion parameters) on mainstream English tasks according to authoritative benchmarks like MMLU.

Benchmark results for the 7B parameter model

Benchmark results for the 13B parameter modelBaichuan2-7B and Baichuan2-13B are fully open for academic research. Developers only need to apply via email for official commercial licensing to use them free of charge.

Pioneering Open-Source Model Training Check Point in China, Boosting Academic Research

Training large models involves multiple complex stages including acquiring massive high-quality data, maintaining stable large-scale training clusters, and optimizing model algorithms. Each phase requires substantial investments in talent and computing resources. The prohibitive cost of training models from scratch has hindered in-depth academic research on large-scale models.

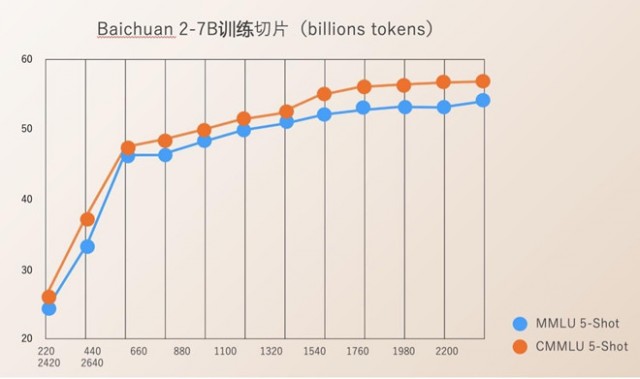

In the spirit of collaboration and continuous improvement, Baichuan Intelligence has open-sourced all training checkpoints from 220B to 2640B parameters. This provides tremendous value for research institutions studying large model training processes, continued training, and value alignment. This unprecedented move will significantly advance China's large model research landscape.

Technical Report Reveals Training Details to Enrich Open-Source Ecosystem

Currently, most open-source models only release model weights without disclosing training details. This limits enterprises, research institutions, and developers to superficial fine-tuning rather than deep research.

Embracing transparency, Baichuan has published a comprehensive technical report detailing Baichuan 2's training process - including data processing, model architecture optimization, scaling laws, and performance metrics. The report is available at:

https://baichuan-paper.oss-cn-beijing.aliyuncs.com/Baichuan2-technical-report.pdfSince its founding, Baichuan has prioritized fostering China's large model ecosystem through open-source initiatives. Within four months, it released Baichuan-7B and Baichuan-13B (top-ranked Chinese LLMs with 5M+ downloads), along with the search-enhanced Baichuan-53B.

As the only newly-founded AI company approved under China's generative AI regulations, Baichuan has partnered with industry leaders including Tencent Cloud, Alibaba Cloud, Huawei, and MediaTek.

Moving forward, Baichuan will continue open-sourcing advanced capabilities to accelerate China's large model ecosystem development through collaborative innovation.