Custom ChatGPT Store Launching Next Week! Users Can Create Custom ChatGPT Assistants

-

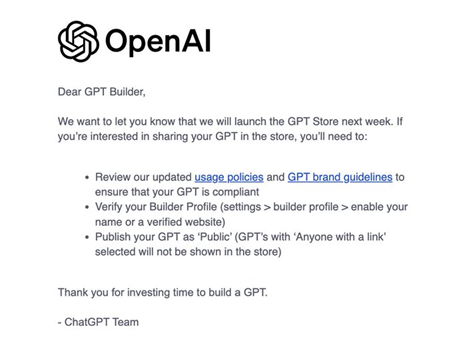

OpenAI announced the upcoming launch of a custom GPT store, providing users with a platform to share their self-developed custom ChatGPT assistants. This store functions similarly to Apple's App Store, offering new business opportunities in the field of large language models. Users can quickly develop assistants for specific domains such as law, finance, and healthcare through the visual click operations of custom GPTs, and choose whether to make them publicly available for paid income.

Steps to Develop Custom ChatGPT Assistants

Users can develop their custom ChatGPT assistants through simple steps. First, they need to register for a Plus account and enter the custom build mode. Then, users can input instructions in the dialog box according to their needs, upload additional data, name the assistant, and configure its functionalities. Users have the option to add internet connectivity, image generation capabilities, and code interpretation features to achieve multimodal outputs. Finally, users can choose to save the assistant as a private model or share it with users worldwide.

Custom GPT Application Scenarios Across Different Fields

Custom GPT assistants can play roles in various fields. Scientists can develop an assistant capable of understanding and answering highly specialized questions, helping to consult and respond to professional literature and research notes, and sharing it with other researchers in the same field. Teachers can create an assistant for practicing conversations, correcting pronunciation, and grading assignments, built using student work and teaching resources, and shared with students. Product managers can develop an assistant that identifies user needs and guides product iteration, helping to recognize common issues in user-generated content.

Safety and Collaboration of AI Chatbots

OpenAI has formulated plans to address the potential dangers posed by artificial intelligence in the development of ChatGPT. They employ AI model risk 'scorecards' to measure and track potential harms, while collaborating and coordinating with other laboratories and stakeholders to ensure the beneficial and ethical use of AI for humanity.