Microsoft Researchers Introduce New AI Method to Improve High-Quality Text Embeddings Using Synthetic Data

-

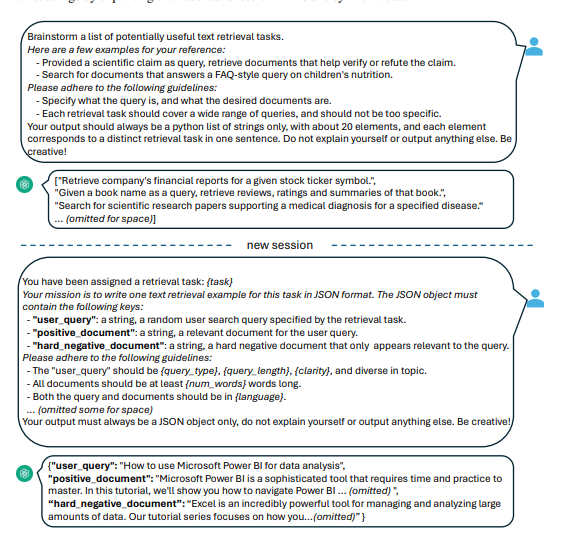

January 4th News: Microsoft's research team recently proposed a unique and simple method for generating high-quality text embeddings. This new approach achieves remarkable results using only synthetic data and minimal training steps (fewer than 1,000). Compared to existing methods, this technique does not rely on multi-stage pre-training or limited labeled data fine-tuning, avoiding cumbersome training processes and the issues of manually collecting datasets, which often suffer from limited task diversity and language coverage.

This method leverages proprietary large language models to generate various synthetic data for text embedding tasks in approximately 100 languages. Unlike complex pre-training phases, the approach uses a basic contrastive loss function to fine-tune open-source decoder-only large language models on the generated synthetic data.

The research team conducted several tests to validate the method's effectiveness. The model demonstrated outstanding results in highly competitive text embedding benchmarks without using any labeled data. When improved with a combination of synthetic and labeled data, the model set new records on the BEIR and MTEB benchmarks, becoming the state-of-the-art method in the field of text embeddings.

Proprietary large language models such as GPT-4 are used to generate various synthetic data, including multilingual instructions. By leveraging the powerful language understanding capabilities of the Mistral model, this method achieves outstanding performance across nearly all job categories in the highly competitive MTEB benchmark.

The study demonstrates that using large language models can significantly improve the quality of text embeddings. The training process of this research greatly reduces the need for intermediate pre-training, making it more concise and efficient compared to current multi-stage systems.

Paper URL: https://arxiv.org/abs/2401.00368