From 2023 to 2024: The Advancements, Challenges, and New Entrepreneurial Opportunities in AI

-

In December 2023, Nature magazine released its annual 'Ten People Who Mattered in Science' list, and for the first time in history, a 'non-human' was included—ChatGPT made the list.

Nature noted: 'Although ChatGPT is not an individual and does not fully meet the selection criteria, we decided to make an exception to acknowledge that generative AI is fundamentally changing the trajectory of scientific development.'

▲ Image source: Nature

In the 2023 technology landscape, generative AI has undoubtedly marked a significant turning point. Its development has not only attracted widespread attention from the industry but has also had a profound impact on the global economy, social structures, and even our expectations for the future.

This is an AI revolution in which every ordinary person can participate. From the continuous development of large language models to the widespread application of AI technologies across various industries, and the ongoing competition between open-source and closed-source strategies, every step of AI's progress is outlining the contours of future trends.

Facing this surging wave, the Chinese government has introduced a series of policy measures to support AI development in documents such as the 14th Five-Year Plan for National Informatization and the Guidelines on Accelerating Scenario Innovation to Promote High-Quality Economic Development through High-Level AI Applications. China's AI industry has also rapidly expanded, giving rise to a group of internationally competitive AI enterprises.

As the year comes to a close, we reflect on the advancements in generative AI in 2023, discussing its impact on humanity, industry trends, and future opportunities in entrepreneurship and investment. This is not only a review of the past year's developments in the AI field but also a contemplation on the direction of AI's future.

Key takeaways to share first:

Before the ecosystem of truly valuable AI applications flourishes, betting on core technologies like large models and 'shovel-selling' companies makes sense. However, the currently booming AI applications are equally vital as sources of value creation and represent the vast frontier we aspire to explore.

Closed-source large language models like OpenAI charge APP applications that connect to their ports for traffic fees. To reduce the burden of these traffic costs, application companies have two main approaches: one is to utilize open-source models and train their own small-to-medium-sized models, and the other is to optimize their business models to balance the traffic expenses.

With the advancement of AI technology, work methodologies will undergo transformation. AI technology has the potential to restructure both human workflows and the workflows of language models themselves.

Effectively utilizing such highly intelligent tools as AI presents a significant challenge for humanity. However, we need not be overly pessimistic, as the capabilities of AI have their limits.

In the field of AI technology, the development paths of the United States and China each have their own characteristics. The US has largely established its leading large language model camp, while China's large language models present a flourishing landscape. For China, the more important focus is vigorously developing the AI application ecosystem.

AI Agent is a noteworthy entrepreneurial direction. An AI Agent is an intelligent software capable of autonomously executing tasks, making independent decisions, actively exploring, self-iterating, and collaborating with others.

Although the large language model field has achieved numerous technological breakthroughs, there are still many areas that can be iterated and improved, such as reducing 'hallucinations,' increasing context length, achieving multimodal capabilities, embodied intelligence, complex reasoning, and self-iteration.

Key points for entrepreneurship in the AI application field: Create high-quality native new application experiences; Be more forward-looking, discover non-consensus areas, and have disruptive potential; Focus on user growth and commercialization potential; Seize the dividends of macro trends; Maintain a safe distance from large models while having your own business depth; Most importantly, it's about the team.

Startups should dare to do what is right rather than easy in non-consensus areas.

From an industry perspective, the development of AI can be divided into two stages so far:

The 1.0 phase primarily focused on analysis and judgment, while the 2.0 phase emphasizes generation more. Representative models of the 2.0 stage are large language models and image generation models, with Transformer and Diffusion Model being the two algorithmic models driving the development of generative AI.

For most of 2023, OpenAI's products dominated the performance rankings of large language models, especially after the company released its GPT-4 language model in March, leaving competitors far behind. However, Google successfully launched its latest large language model Gemini in December, creating a duopoly with GPT-4 in the field.

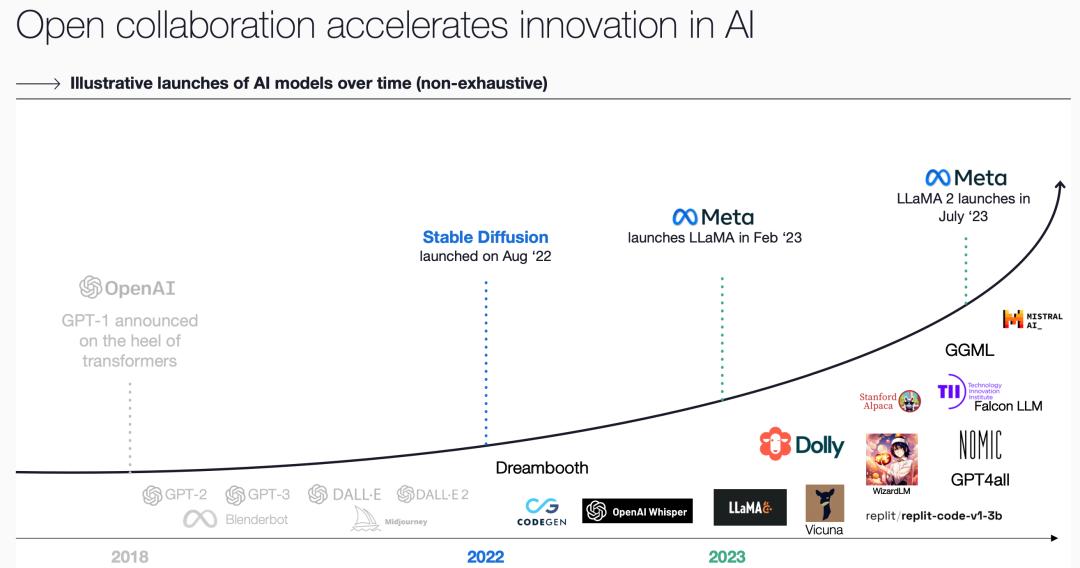

In the field of AI, the open-source model community has always been present. Supported by Meta's (formerly Facebook) open-source large language models LlaMa and LlaMa2, the community is intensively engaged in scientific research and engineering iterations. For example, efforts include attempting to achieve capabilities similar to large models with smaller models, supporting longer contexts, and adopting more efficient algorithms and frameworks for model training.

Multimodal AI (images, videos, and other multimedia forms) has become a hot topic in AI research. Multimodality involves both input and output aspects. Input refers to enabling language models to understand information embedded in images and videos, while output involves generating media forms beyond text, such as text-to-image. Considering that human capacity to generate and acquire data is limited and may not sustain AI training in the long term, future training of language models may rely on data synthesized by AI itself.

In the field of AI infrastructure, NVIDIA has become an industry leader with its GPUs' massive market demand, joining the $1 trillion market capitalization club. However, it also faces fierce competition from traditional rivals like AMD and Intel, as well as major tech companies such as Google, Microsoft, OpenAI, and emerging language model startups.

Beyond large models, the industry has strong demand for various types of AI applications. Generative AI has made significant progress in multiple areas, including images, videos, programming, voice, and intelligent collaboration applications.

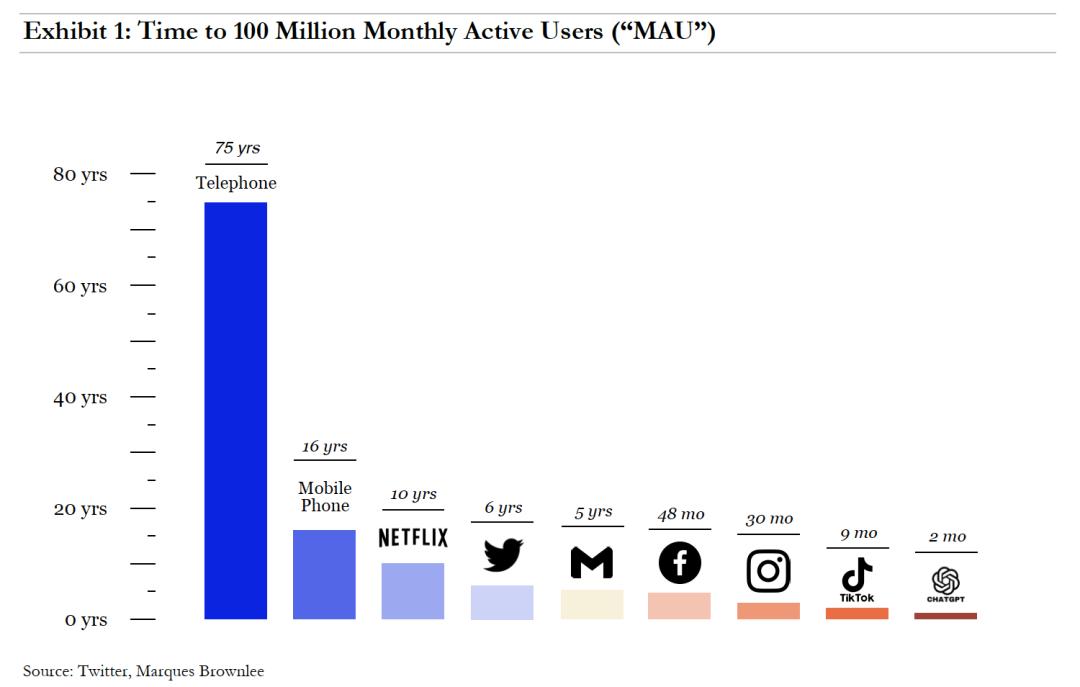

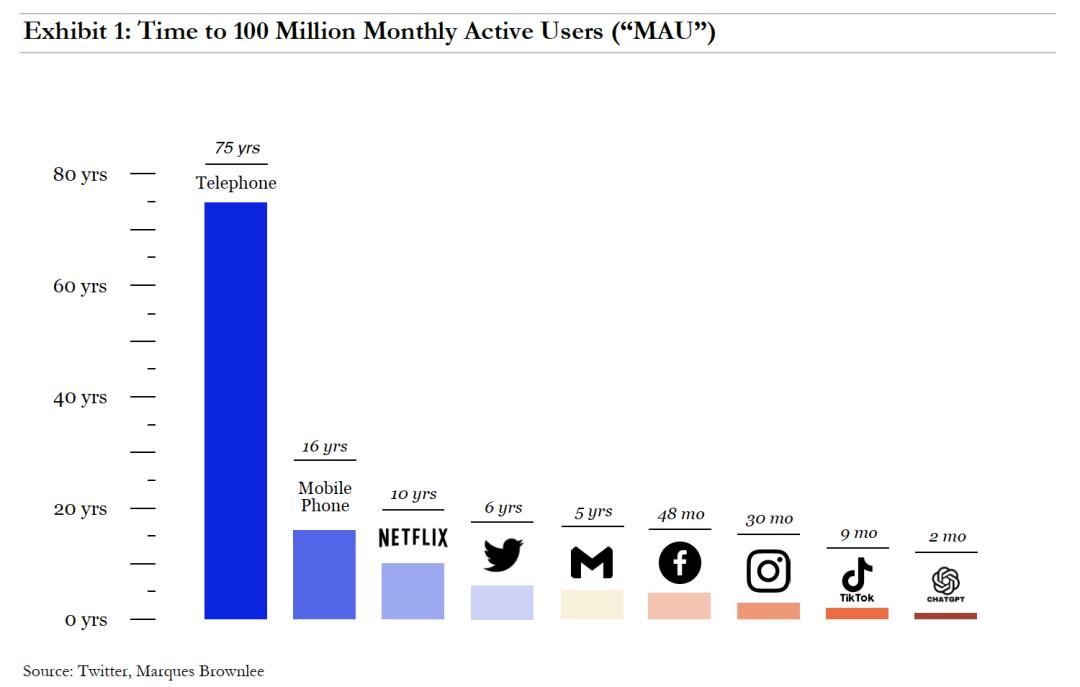

Global users have shown tremendous enthusiasm for generative AI. ChatGPT reached 100 million monthly active users in just 2 months. Compared to the super apps of the smartphone era, which required significant promotional budgets, TikTok took 9 months, Instagram took 2.5 years, WhatsApp took 3.5 years, and YouTube and Facebook each took 4 years to achieve the same milestone.

▲ Time taken for different types of tech applications to reach 100 million monthly active users. Image source: 7 Global Capital

Venture capital firms are also investing heavily to support advancements in the AI field.

According to statistics from the U.S. investment firm COATUE, as of November 2023, venture capital firms have invested nearly $30 billion in the AI sector. Approximately 60% of this investment has gone to emerging large language model companies like OpenAI, about 20% to the infrastructure supporting and delivering these models (AI cloud services, semiconductors, model operation tools, etc.), and around 17% to AI application companies.

▲ Image source: COATUE

Before the flourishing of truly valuable AI application ecosystems, the investment logic focusing on core technologies and 'shovel-selling' companies makes sense. However, the currently booming AI applications are also a source of value creation and the vast frontier we aspire to explore.

In 2022, following the open-sourcing of Stable Diffusion, we witnessed a surge in "text-to-image" products (generating images from text). This year can be regarded as the year when the problem of image generation was solved.

Then in 2023, technologies using AI to recognize voices and produce audio also made significant progress. Today, AI's speech recognition and synthesis technologies have become highly mature, making synthesized voices nearly indistinguishable from human voices.

With the continuous advancement of technology, video generation and processing will be the next major focus in AI development. Currently, there have been several technological breakthroughs in the field of "text-to-video" (generating videos from text), showcasing AI's potential and possibilities in video content generation. With the help of emerging AI video models and applications like Runway Gen-2, Pika, and Stanford University's W.A.L.T, users can simply input a description of an image to obtain a video clip.

NVIDIA's renowned engineer Jim Fan believes that in 2024, AI is highly likely to make significant progress in the field of video.

If we consider media formats from a different dimension, a two-dimensional image becomes a video when a time dimension is added. Adding a spatial dimension transforms it into 3D. By rendering 3D models, we can achieve more precisely controllable videos. In the future, AI may gradually master 3D models, but this will take more time.

In 2023, OpenAI's Chief Scientist Ilya Sutskever proposed the idea that "compression is intelligence" during an external sharing session. This suggests that the higher the compression ratio of a language model on text, the higher its level of intelligence.

Compression equals intelligence—this may not be strictly rigorous, but it provides an explanation that aligns with human intuition: the most extreme compression algorithms, in their quest to compress data to the utmost, must abstract higher-level meanings based on a thorough understanding.

Take Llama2-70B, a language model developed by Meta, as an example. It is the 70-billion-parameter version of the Llama2 model and is currently one of the largest open-source language models available.

Llama2-70B was trained on approximately 10 trillion bytes of text data, resulting in a 140GB model file with a compression ratio of about 70 times (10T/140G).

In daily work, we usually compress large text files into Zip files, which typically have a compression ratio of around 2 times. By comparison, you can imagine the compression strength of Llama2. Of course, Zip files use lossless compression, while language models use lossy compression, so they are not directly comparable standards.

▲Screenshot shared by OpenAI VP Andrej Karpathy. Image source: Web3 Sky City

The marvel lies in the fact that a 140GB file can encapsulate human knowledge and intelligence. Most laptops can accommodate a 140GB file. When the laptop's computing power and VRAM are sufficient, just adding a 500-line C code program can run a large language model.

Open research forms the foundation of AI technology development. The world's top scientists and engineers publish numerous papers on platforms like Arxiv, sharing their technical practices. From early breakthroughs like the AlexNet convolutional neural network model to Google's foundational Transformer algorithm, and to the model implementation papers published by companies like OpenAI and Meta - these all represent significant scientific and technological breakthroughs that guide the advancement of AI technology.

The development and iteration of open source communities are especially worth noting. With the support of open-source large language models, researchers and engineers can freely explore various new algorithms and training methods. Even closed-source large language models can learn from and draw inspiration from the open-source community.

It can be said that open source communities have achieved a certain degree of technological democratization, allowing people worldwide to share the latest advancements in the field of AI.

Returning to the essence of business, the training costs of large language models are extremely expensive. Taking GPT as an example, according to statistics from Yuanchuan Research Institute, training GPT-3 cost over $10 million, training GPT-4 cost more than $100 million, and the training cost for the next-generation model may reach $1 billion. Additionally, when running these models and providing external services, the consumption of computing power and energy is also very costly.

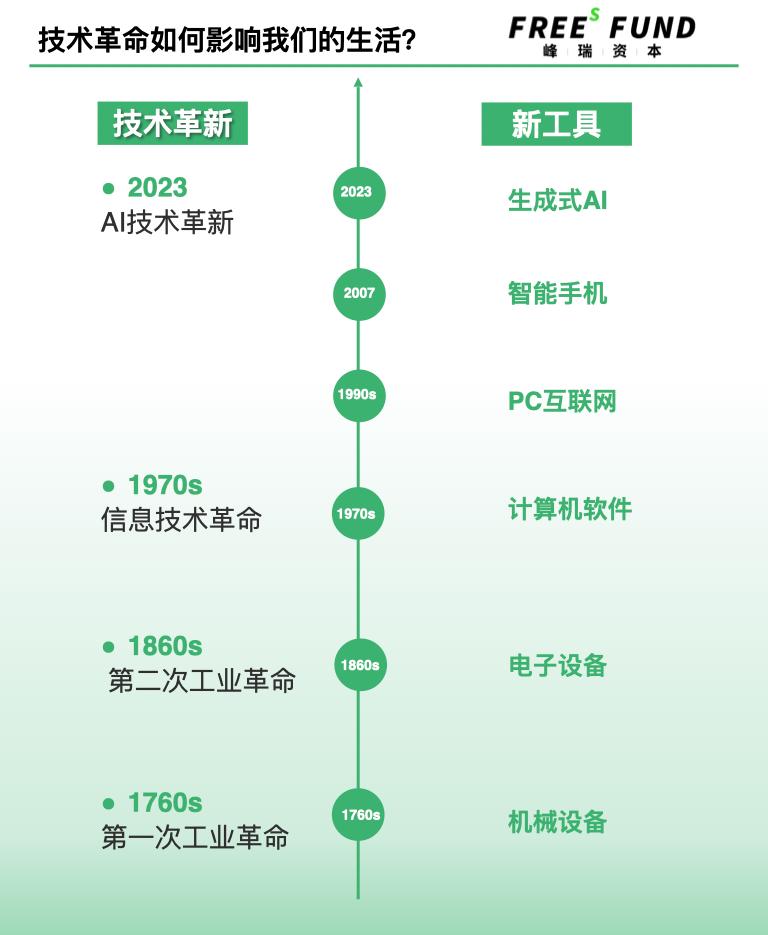

The business model for large language models is MaaS (Model as a Service), where the billing method for intelligence output is based on input and output traffic (or tokens). Due to the high training and operational costs of large language models, the traffic fees charged are highly likely to 'rise with the tide.'

▲ Image source: openai.com

Taking OpenAI as an example, the image above shows part of the traffic billing plan for some models displayed on its official website. Some have made rough estimates that, based on the median level of traffic usage for AI applications calling GPT-3.5 Turbo, if there is one user using the application daily (DAU), the app company behind the user would need to pay OpenAI approximately 0.2 RMB in traffic fees. Extrapolating from this, if an app with tens of millions of daily active users integrates GPT's API, it would need to pay OpenAI 2 million RMB in traffic fees daily.

▲ Image source: WeChat Official Account @AI Empowerment Lab

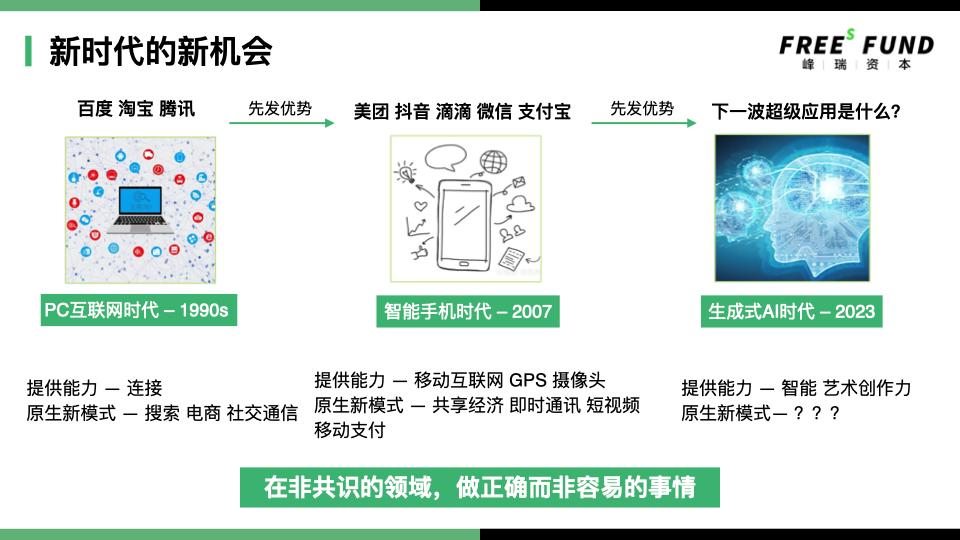

The traffic fee pricing for domestic large models is as shown in the image above, which is basically on par with OpenAI's prices. Some smaller models are cheaper, but there is a performance gap.

Traffic costs significantly impact how AI applications design their business models. To alleviate the burden of traffic expenses, some startups consider leveraging the capabilities of open-source ecosystems by developing medium-small models to handle the majority of user demands. Only when user requests exceed the capabilities of these medium-small models do they resort to calling upon large language models.

These medium-small models may be deployed directly on the user's nearest terminal side, becoming "edge-side models." Edge-side models heavily test hardware integration levels. In the future, our computers and mobile devices might more widely integrate hardware chips like GPUs, enabling the ability to run small models on the terminal side. Google and Microsoft have already introduced small models capable of running on the terminal side. Nano, the smallest variant of Google's Gemini large model, is specifically designed to run on mobile devices without requiring an internet connection, operating locally and offline directly on the device.

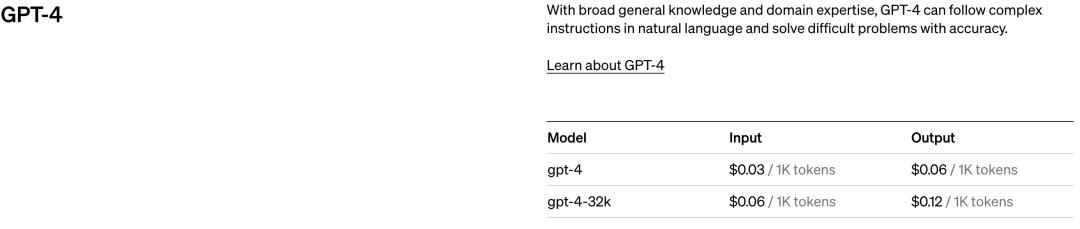

There have been several major technological revolutions in human history. The First Industrial Revolution, which emerged around 1760, introduced mechanical equipment. The Second Industrial Revolution, after 1860, brought about electronic devices. From the 1970s onward, we experienced three more technological advancements: computer software, PC internet, and smartphones. Some collectively refer to these as the Third Industrial Revolution, or the Information Revolution.

The generative AI revolution starting in 2023 may be called the fourth industrial revolution, as we've created new intelligence. Generative AI serves as humanity's new tool for cognition and transforming the world, having become a new abstract tool layer.

Historical experience shows that each technological revolution significantly enhances human productivity. After the first and second industrial revolutions, two abstract tool layers emerged in the natural world: mechanical and electronic device layers. In the 1970s, the information technology revolution represented by computers introduced a new abstract layer - software. Through software, people began to understand, transform, and interact with the world more efficiently. Subsequently, the rise of PC internet and smartphones further propelled software technology development.

Beyond focusing on the efficiency improvements brought by AI, we must also pay attention to how machines are replacing human jobs.

According to statistics, before the first Industrial Revolution in Britain, the agricultural population accounted for about 75%, which dropped to 16% after the Industrial Revolution. After the Information Revolution in the United States, the industrial population decreased from 38% to 8.5%, with most of those industrial workers transitioning to white-collar jobs. Now, with the AI-driven intelligence revolution, white-collar workers are the first to be affected.

As AI technology advances, the organizational forms and collaboration methods in the business society may undergo a series of changes.

First, companies may trend towards becoming smaller. Business outsourcing could become very common. For example, companies might outsource sectors like research and development or marketing.

Second is the restructuring of workflows, meaning standard operating procedures (SOPs) might change. Everyone has different capabilities and energy levels, so workflows should enable people to improve efficiency and focus on their respective roles. Researchers are exploring how workflows should adjust in scenarios where AI might replace certain functions. Current language models also have areas where efficiency and capabilities can be enhanced, and these models might need to collaborate through workflow orchestration.

Beyond technical skills, improving other abilities has become crucial. For example, enhancing aesthetic appreciation and taste allows AI to assist you in generating better solutions or works. Similarly, strengthening critical thinking helps you better judge and evaluate AI-generated content.

We should more actively utilize AI, treating it as an auxiliary tool in work and life—or a co-pilot—to fully leverage its potential and advantages. (For more thoughts on the future of work, feel free to read "How Will Humans Work in the Future? | Fengrui Report 26").

In the current era of rapid AI development, many have raised concerns about the potential threats posed by AI, fearing its negative impacts on humanity. Indeed, humans have now created tools that appear more intelligent than themselves. Controlling such 'silicon-based lifeforms' as AI undoubtedly presents a tremendous challenge for mankind. Scientists are actively working to address this issue, and OpenAI has previously published papers discussing similar concerns.

However, we shouldn't be overly pessimistic. At least for now, the current level of digitalization in human society can serve to limit the boundaries of AI's capabilities.

Today's large language models are primarily trained on vast amounts of text data. Text is highly digitized and has been abstracted by humans, resulting in high information density, which makes AI training very effective.

However, outside the realm of text, AI's intelligence faces numerous limitations because it hasn't been trained on corresponding data. So for now, we don't need to worry too much—AI isn't as powerful or comprehensive as it might seem. We have ample time to familiarize ourselves with and adapt to it, finding ways to coexist harmoniously with these silicon-based entities.

Globally, large language models demonstrate notable regional development characteristics. For instance, the development paths in the United States and China each have their unique features. In the US, the leading camp of large language models has largely been established, primarily concentrated among a few major tech companies or their collaborations with top model startups. It can be said that the AI field in the US has entered a high-cost arms race phase, making it difficult for new entrants to break in.

In contrast, China's large language models present a flourishing landscape, with over a hundred projects currently claiming to be developing large models. China may rely more on the open-source ecosystem to create new language models through secondary development.

Currently, no country outside the US has developed a large language model comparable to GPT-4. In the field of large model technology, there remains a gap between China and the US.

However, the global competition in AI is far from over. For China, the most important task is to vigorously develop the AI application ecosystem. During the internet and digital economy era, China excelled in application development and exported related practical experiences overseas. By keeping up with the latest advancements in large model technology and fostering a thriving application ecosystem, China may then reverse-engineer technological breakthroughs as a potential solution.

Although the field of large language models has achieved numerous technological breakthroughs, there are still many areas that can be iterated and improved upon, such as reducing "hallucinations," increasing context length, achieving multimodality, embodied intelligence, performing complex reasoning, and enabling self-iteration.

Hallucinations can be understood as incorrect outputs, which Meta defines as "confident falsehoods." The most common cause of hallucinations is insufficient density of knowledge or data collected by the language model. However, hallucinations can also be seen as a manifestation of creativity, much like how poets can write beautiful verses after drinking—AI hallucinations might also bring us fascinating content.

There are many methods to reduce hallucinations, such as training with higher-quality corpora; improving model accuracy and adaptability through fine-tuning and reinforcement learning; and incorporating more contextual information into the model's prompts to enable more precise understanding and responses.

Context length is akin to the brain capacity of a language model, currently typically 32K, with a maximum of 128K—equivalent to less than 100,000 Chinese characters or English words. For language models to comprehend complex texts and handle intricate tasks, this length is still far from sufficient. The next generation of models will likely focus on expanding context length to enhance their ability to process complex tasks.

Humans primarily rely on vision to acquire information, while current language models mainly depend on text data for training. Visual data can help language models better understand the physical world. In 2023, visual data was incorporated into model training on a large scale. For example, GPT-4 introduced multimodal data, and Google's Gemini model is said to have used vast amounts of image and video data. From the performance shown in Gemini's demo videos, its multimodal interaction appears to have significantly improved, though enhancements in complex reasoning and other intellectual capabilities are not yet evident.

Embodied intelligence refers to an intelligent system based on a physical body that can perceive and act, capable of acquiring information from the environment, understanding problems, making decisions, and taking action. This concept is not overly complex; all living organisms on Earth can be considered forms of embodied intelligence. For instance, humanoid robots are also regarded as a form of embodied intelligence. Essentially, embodied intelligence extends AI with functional 'limbs' that can move and interact.

Typically, GPT provides answers in one go, without obvious multi-step reasoning or iterative backtracking. When humans think about complex problems, they often list steps on paper, repeatedly deducing and calculating. Researchers have devised some methods, such as utilizing thinking models like the Tree of Thoughts, attempting to teach GPT complex multi-step reasoning.

Current language models primarily rely on humans to design algorithms, provide computing power, and feed them data. Looking ahead, can language models achieve self-iteration? This may depend on new model training and fine-tuning methods, such as reinforcement learning. It is said that OpenAI is experimenting with a training method codenamed "Q*" to study how AI can self-iterate, but the specific progress remains unknown.

Large models are still in a period of rapid development, with significant room for improvement. Beyond the points mentioned above, there are many areas that still need to be addressed and enhanced, such as explainability, improving safety, and ensuring outputs align more closely with human values.

In September 2023, Sequoia Capital published an article titled Generative AI’s Act Two on its official website, highlighting that generative AI has entered its second phase. The first phase primarily focused on the development of language models and simple peripheral applications, while the second phase shifts focus toward creating intelligent new applications that truly address customer needs.

Future application software may gradually shift to AI Agents—a type of intelligent software capable of autonomously executing tasks, making independent decisions, proactively exploring, self-iterating, and collaborating with others. Existing traditional software may need corresponding adjustments and enhancements. Compared to traditional Version 1.0 software, AI Agents can deliver a more realistic, high-quality one-on-one service experience.

However, the challenge in developing AI Agents lies in the current immaturity and instability of language models. To achieve a good application experience, it is necessary to supplement language models with smaller models, rule-based algorithms, and even manual services in certain critical steps, thereby delivering stable performance in vertical scenarios or specific industries.

Multi-Agent collaboration has become a hot research direction. Based on standard operating procedures, multiple AI Agents working together can achieve better results than individually calling language models. Here's an intuitive explanation: each Agent may have its own strengths, weaknesses, and specialized areas, similar to human division of labor. When combined, they follow a new standard operating procedure (SOP) to perform their respective roles, inspire each other, and collaborate under mutual supervision.

In a new era, as startups, it is essential to carefully consider what native new business model opportunities exist based on this technological innovation. At the same time, it is also necessary to think about which opportunities are for new entrants and which are for existing industry leaders.

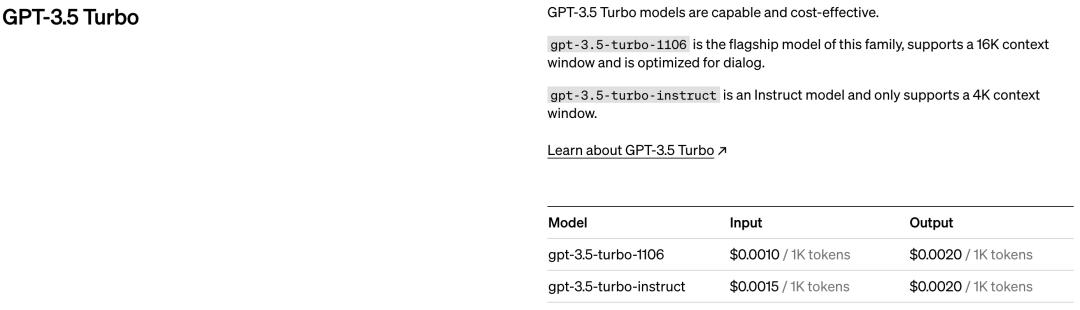

We can look back at the two technological transformations of PC internet and smartphones to see how new opportunities emerged.

In the PC internet era, the primary capability provided was connectivity, linking PCs, servers, and other devices globally. This era gave rise to native new models such as search engines, e-commerce, and social communication, leading to the emergence of industry leaders like BAT (Baidu, Alibaba, Tencent) across various sectors.

In the smartphone era, the main capability is that most people own a mobile phone equipped with mobile internet, GPS, cameras, and other functions. This foundational condition enabled new models such as the sharing economy, instant messaging, short video sharing, and mobile financial payments. Leading companies from the previous era had strong first-mover advantages and seized many opportunities in these new models—for example, Tencent and Alibaba created WeChat and Alipay, respectively. However, we also see new players like Meituan, Douyin (TikTok), and Didi achieving tremendous success. How did they manage to do so?

I believe the key to its success lies in doing what is right rather than easy in non-consensus areas.

Taking Meituan and Douyin as examples. Meituan's original new model is called "food delivery," which belongs to the "O2O (online to offline)" part of the "sharing economy." On the left are numerous restaurants, on the right are various consumers, and in the middle are thousands of delivery riders. It is a "heavy model," but early internet giants preferred and were more adept at "light models." Entering the catering industry was a "non-consensus" choice. The fulfillment service chain for food delivery is too long and difficult to digitize, making it hard to operate with precision. However, Meituan eventually succeeded, and these difficult tasks became its greatest core advantage and competitive barrier.

Looking at TikTok, its chosen native new model is called "short video sharing," which was part of the then-popular "creator economy." TikTok's biggest "counter-consensus" move was: it built a bridge between the video creator economy and the trillion-scale e-commerce GMV, forming a large-scale and efficient conversion.

Before the rise of e-commerce live streaming, there were two types of live streaming: one called game live streaming, and the other called influencer live streaming, with monetization mainly relying on audience tipping. This monetization model had a very small economic scale and couldn't accommodate so many excellent creators. However, through various efforts such as recommendation algorithms, developing creator and merchant ecosystems, establishing the TikTok Shop closed loop, and optimizing content-to-e-commerce conversion, TikTok successfully created this huge commercial closed loop of converting content into e-commerce. After achieving this, TikTok could invite the largest number of excellent creators nationwide to create content on its platform, rewarding them with massive e-commerce sales revenue.

After TikTok, the international version of Douyin, went global, many local short video and live streaming platforms couldn't compete with it. This is because TikTok isn't just a video content platform connecting creators and consumers—it represents a new hybrid model that combines the creator economy with massive e-commerce GMV conversion. It's an entirely new species with compound competitive advantages.

In summary, startups should have the courage to choose and enter non-consensus fields, striving to succeed even in challenging environments.

From the perspective of entrepreneurial direction, the large model field is dominated by giants, making it unlikely to be the first choice for entrepreneurs. Between large models and applications lies a 'middle layer,' which mostly consists of infrastructure, application frameworks, model services, etc. This segment is susceptible to pressure from both models and applications, with some areas heavily dominated by giants, leaving little room for startups.

In summary, we tend to believe that, considering the current technological and commercial environment, we should vigorously develop the AI application ecosystem.

The above shows the generative AI-related startups we have invested in, including: a new DevOps platform designed for language models, a social gaming platform, intelligent companionship services, AI-assisted RNA drug development, automated store marketing, a global intelligent commercial video SaaS, a new online psychological counseling platform, and a remote hiring platform for engineers between China and the US, among others.

We have summarized several key points for entrepreneurship in the AI application field:

First, it is essential to create high-quality native new applications. Leverage the new capabilities provided by the AI era, such as intelligent supply and artistic creativity, to deliver unique and superior native application experiences. This is actually quite challenging. As mentioned earlier, the intelligence of language models is not yet mature or stable, with clear limitations. Startups may need to focus on relatively niche scenarios, employing various technical and operational strategies to achieve a good user experience.

Second, non-consensus, more forward-looking, and disruptive. Non-consensus refers to not following the crowd in choosing a path, daring to enter difficult fields, and "doing what is right rather than what is easy." More forward-looking means selecting challenging business and technological routes.

For example, adopting more advanced technological architectures that are still in development, such as entrepreneurs prioritizing Agent over CoPilot, as CoPilots seem more like opportunities for industry leaders (think Microsoft and Github). Additionally, startup teams can consider designing applications based on the capabilities of next-generation language models (e.g., GPT-5).

Disruptiveness refers to the ability to create a transformative impact on the targeted industry, such as through disruptive product experiences or overturning existing business models. The advantage of such disruption is the potential to outpace industry leaders. For example, Fengrui Capital's investment in Babel (Babel Technology) capitalizes on emerging technological trends like "Serverless" and large language models, aiming to revolutionize software development tools and production factors by leveraging AI for programming, debugging, deployment, and operations.

Third, focus on user growth and commercialization potential. The importance of user growth potential is easily understood—even if you start in a niche market, it can eventually scale into something much larger.

Why should we focus on commercialization early?

This brings us back to the 'traffic tax' of large models mentioned earlier. If you choose to integrate with a large model, from the very first day of your startup, you will have to pay this traffic tax to the large model.

For consumer-facing applications, there are typically three main pathways to commercialization: direct user payments (such as in games or premium services), advertising, and e-commerce. Only a very few applications can successfully build an e-commerce business (examples include Taobao and Douyin). It's challenging for new applications to charge users directly, and most entrepreneurs are hesitant to do so, often opting for more indirect methods—hoping to commercialize by scaling up their user base and then monetizing through in-app advertising.

Looking at the smartphone era, apart from e-commerce applications, China's top general information apps are estimated to earn between 0.1 to 0.3 yuan per daily active user from advertising—this already represents the peak level of advertising monetization. For average-sized apps, the revenue per user likely falls far short of even 0.1 yuan.

We previously mentioned the 'traffic tax' of language models, where the daily cost per user is approximately 0.2 yuan. Advertising revenue often struggles to cover such costs. The larger the user base, the more severe the losses become, unless measures like on-device models are adopted to reduce this 'traffic tax'.

Therefore, AI applications may need to prioritize forward charging in their business model design. Of course, in this new era of AI intelligence, entrepreneurs might discover alternative commercialization paths beyond the three traditional scaling methods. Let's wait and see.

Fourth, seize macro trend dividends. It's crucial to anticipate and capture China's macroeconomic trend dividends, such as cross-border e-commerce, video commerce, and engineer dividends. We must strive to capture the era's β opportunities.

Fengrui Capital's portfolio company Tecan Technology is also capitalizing on new trends like China's cross-border e-commerce and next-generation video commerce. The company aims to build a world-class commercial video SaaS platform through product innovation technology, empowering overseas video entrepreneurs and merchants.

Fifth, maintain a safe distance from large models and establish your own business depth. The concept of a safe distance should be familiar to many, with several well-known negative examples overseas. For instance, some commercial applications for generating copywriting experienced "flash-in-the-pan" rapid growth but ultimately couldn't withstand the dual impact of large models and other startups. Additionally, the business depth of a startup project is crucial. This business depth refers to areas beyond the reach of large models, particularly scenarios that are difficult to digitalize or insufficiently digitalized.

Of course, the most important factor is the team. Strong technical skills are essential, and team members must also understand the industry and specific scenarios—what we call "technology first, scenarios foremost." We look forward to collaborating with innovators in the AI field and welcome you to reach out to us.