Mistral's Valuation Soars Sevenfold in Just Six Months, Open Source Reshapes the AI Landscape

-

When LLaMA was leaked and made available for anyone to download, the gears of open-source fate began to turn, culminating in Mistral AI's latest funding round. Seven months ago, researchers from Meta and Google founded Mistral AI in Paris. In just six months, this 22-employee startup raised $415 million in its recent Series A funding, skyrocketing its valuation from $260 million to $2 billion—a sevenfold increase.

At the same time, the company quietly released its large language model, Mixtral 8X7B.

Mixtral 8x7B employs a unique architectural approach—Mixture of Experts (MoE)—to produce fluent, human-like responses, setting it apart from traditional LLM methods.

According to company data, Mixtral 8X7B outperforms several competitors, including Meta's Llama 2 series and OpenAI's GPT-3.5.

Established for just four weeks, it secured $113 million in seed funding with a valuation of approximately $260 million. Six months later, its valuation rose to $2 billion after a Series A funding round.

Unlike the ironically named OpenAI, Mixtral-8x7B is open-source, meaning it can be used commercially for free. Developers can also modify, copy, or update the source code and distribute it along with a copy of the license.

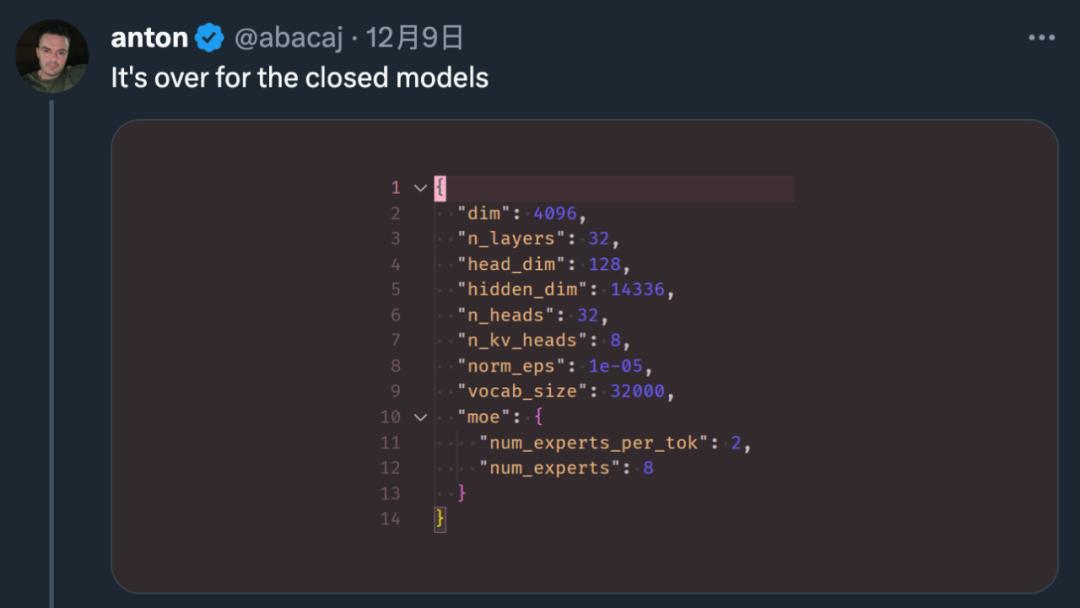

Many netizens are actively cheering for Mixtral-8x7B, praising how fast and fun it is. Some researchers even claimed: 'Closed-source large models have reached their end.'

Prior to this, their Mistral 7B, released at the end of September, is still hailed as the 'best 7B model,' outperforming Llama-2 13B in every benchmark and surpassing LLaMA-1 34B in code, math, and reasoning.

Some researchers claim: "The end of closed-source large models has come."

OpenAI has consistently kept its latest LLM closed-source, which has provoked a certain degree of backlash from the public.

OpenAI and Google have warned that releasing such powerful models in the open-source domain is highly dangerous, as the technology could be used to spread misinformation.

They also tend to adopt a defensive release model to strictly control how the models are used. They spent months developing safety guardrails for their LLMs, ensuring they wouldn't be used to spread misinformation and hate speech or generate biased answers to questions.

Mixtral focuses on open-sourcing all its AI software, firmly positioning itself on the opposite side of the increasingly intense culture war.

They firmly believe that generative AI technology should be open-source, allowing free replication and modification of LLM code, through which they aim to help other users quickly build their own chatbots.

Their pursuit is also clear: "An open, responsible, and decentralized technological approach."

Sharing the underlying code of AI widely is the safest approach, as more people can review the technology, identify its flaws, and work to eliminate or mitigate them. Anjney Midha, General Partner at top venture capital firm Andreessen Horowitz, stated in an interview with The New York Times that he led the Series A investment in Mistral AI.

"No engineering team can find every bug," he said. "Large communities are better at building cheaper, faster, better, and safer software."

Domestic AI startup Mianbi Intelligence is committed to the commercial implementation of open-source LLMs.

Facing market competition, the free use of open-source software serves as a highly attractive customer acquisition strategy, while low-cost trial-and-error also helps accelerate innovation. "By adopting the open-source approach, we can more quickly reach potential user groups and reduce enterprises' cognitive and decision-making barriers," co-founder Zeng Guoyang once told Machine Heart.

Additionally, LLMs involve many technical problems that are difficult for a single enterprise to solve alone. By opening up technologies and relying on community efforts, these issues can be collectively addressed while sharing intellectual property for mutual benefit.

This tension between centralization and decentralization has consistently run through the history of modern computing technology development.

As netizens have pointed out, "Open source is not just the future, but also the past."

On Reddit, users have sparked a discussion about open source versus proprietary models following Mistral's latest funding round. Will open source be the future of LLMs?

The broader context for the acceptance of the open source community's mainstream ideology is the monopoly of large corporations over the software industry. Most major technologies driving modern computing are open source, including operating systems, programming languages, and databases.

Meta has also been on the side of open source large models from the beginning and is seen as one of the biggest beneficiaries of open source.

However, opinions still differ on who will ultimately win this game.

Many AI researchers, tech executives, and venture capitalists believe this race will be won by companies that build the same technology and then provide it for free—even if that means having no guardrails in place.

A much-talked-about internal memo leaked from Google (titled "We Have No Moat") questions the company's steadfast commitment to proprietary models.

"We cannot win this arms race, and neither can OpenAI. While we're busy squabbling, a third faction (open source) is quietly eating our lunch," the memo states.

Open source possesses significant advantages that we cannot replicate. Although Google's models still hold a slight edge in quality, the gap is closing at an astonishing rate.

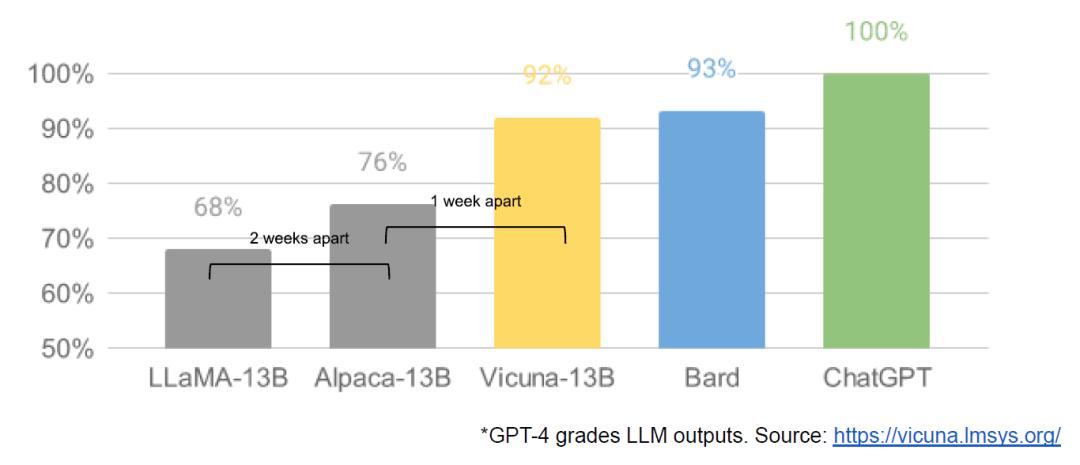

The author of a Google memo found that since the LLaMA leak, there has been an immediate surge in innovations, such as Alpaca and Vicuna, which can run on smart terminals, and the intervals between major development achievements have been shortening. Meta is an obvious winner. They effectively harnessed the free labor of the entire planet, with most open-source innovations occurring on their architecture, and nothing prevents them from directly integrating these into their products.

Open source alternatives can and will eventually eclipse proprietary models. "When free, unrestricted alternatives are comparable in quality, people won't pay for restricted models."

This concern has been partially validated.

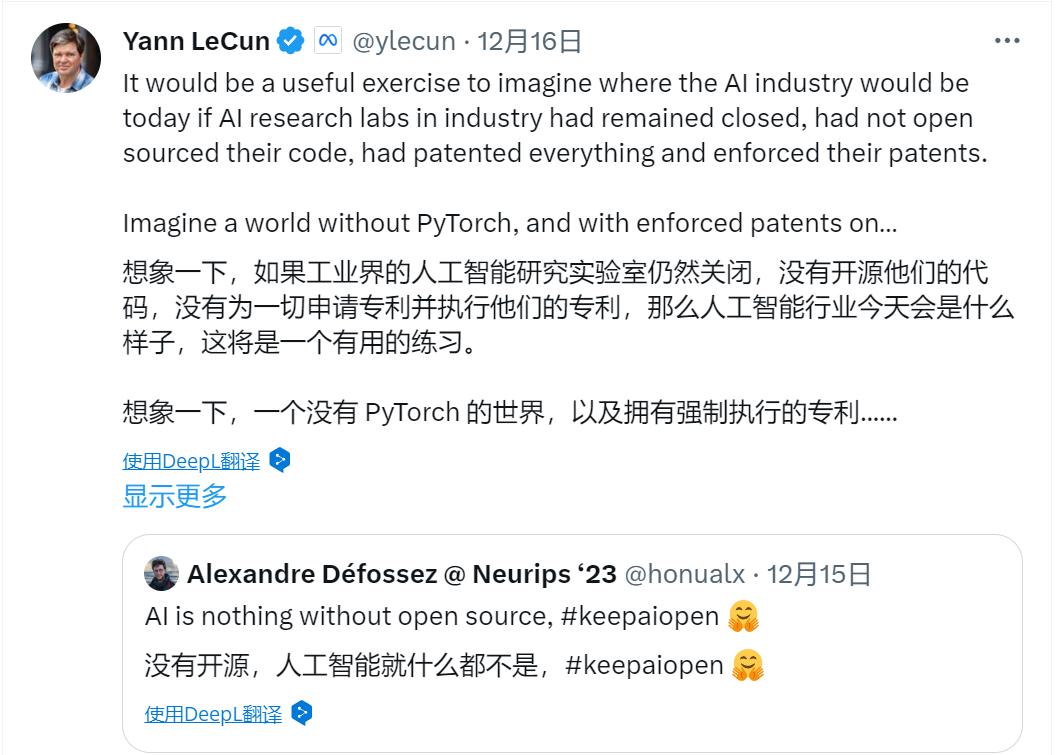

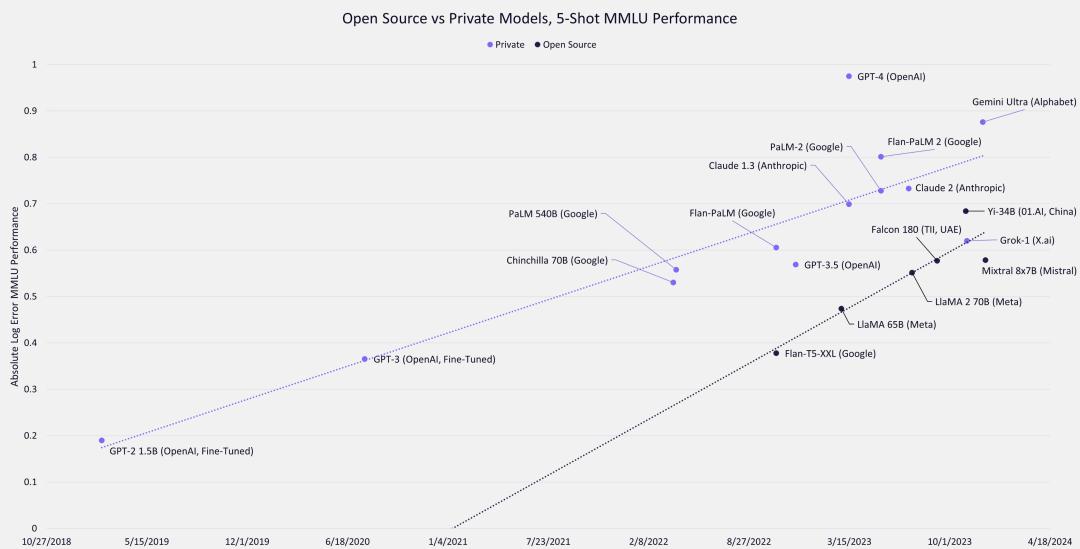

Recently, Yann LeCun, Meta's Chief AI Scientist, shared a trend chart created by ARK Invest, illustrating the development of generative AI in the open-source community versus proprietary models.

"Open source artificial intelligence models are on the path to surpassing proprietary models," he remarked.

Shortly thereafter, Ark Investment updated this widely circulated chart, adding several models including Gemini and Mixtral 8X7B.

Scatter plots have been recently updated with additional models such as Gemini and Mixtral. We can observe that the performance of open-source models is continuously catching up with proprietary models. Some netizens even commented that we are approaching a critical point. Given the current development speed of open-source community projects, we may reach GPT-4 level performance within the next 12 months.

We can see from the chart that leading frontier models still maintain advantages in absolute capabilities, but open-source community researchers are leveraging free online resources to achieve results comparable to the largest proprietary models.

When Meta initially released LLaMA, the parameter counts ranged from 7 billion to 65 billion. These models demonstrated outstanding performance:

The 13-billion-parameter Llama model "outperforms GPT-3 (with 175 billion parameters) on most benchmarks" and can run on a single V100 GPU.

The largest 65-billion-parameter Llama model can rival Google's Chinchilla-70B and PaLM-540B.

Llama 2's open-source release has once again dramatically transformed the landscape of large models.

Compared to Llama 1, Llama 2 has 40% more training data, doubled context length, and employs grouped-query attention mechanisms.

Falcon-40B immediately claimed the top spot on Huggingface's OpenLLM leaderboard upon release, breaking Llama's monopoly. Currently, the largest publicly available model is Falcon 180B.

There's also the Yi model, capable of processing 400,000 Chinese characters at once while dominating both Chinese and English benchmarks. Yi-34B has become the first and only domestic model to successfully top Hugging Face's open-source model leaderboard.

Mixtral 8X7B's highlight lies in the 'cost-effectiveness' of its model size and performance (defeating GPT-3.5). In the future, we are more eager to see more such open-source MoE models rather than larger ones.

Scatter plots outline a wave of innovation that is reshaping the landscape of large models. Open-source forces may compete head-to-head with proprietary models in the next 1-2 years.

But not everyone agrees with this perspective.

Analysts point out that while both open source and proprietary models have their pros and cons, open source has become the clear winner in most other areas of the technology industry, such as Infrastructure as a Service (IaaS) and Platform as a Service (PaaS).

However, this is not always the case, as most leading platforms in the Software as a Service (SaaS) sector remain proprietary (closed-source) software. Therefore, it remains uncertain which approach will win the race.

Some netizens also believe that open source versus proprietary is not a zero-sum game where one must choose between the two.

LLMs might find a similar balance—just as the internet runs on open-source software, we still cannot do without proprietary paid software like Adobe and Windows.

While open source is undoubtedly appealing, some netizens have questioned how companies like Mistral AI generate revenue. From an investment return perspective, why are investors placing such significant emphasis on companies like Mistral?

Open source is often associated with the spirit of free sharing and the free internet, seemingly at odds with profitability. However, in reality, open source does not mean companies cannot make money, with Red Hat being a prime example.

Before being acquired by IBM, Red Hat's last reported revenue was $3.4 billion in 2018. Its software was essentially free, with revenue mainly coming from providing support services to enterprises.

For example, the New York Stock Exchange uses the free Linux system for stock trading. Every few years, chip hardware upgrades, and the NYSE also wants to adopt new processors to improve efficiency, which requires deploying new systems and applications.

The NYSE could maintain an in-house team to handle all system maintenance and development tasks, or it could pay Red Hat to do the job. A significant number of corporate users have chosen the latter option.

Mixtral also provides pay-as-you-go API access, catering to users who want quick and easy access to its functionalities without the need to manage supporting infrastructure, similar to OpenAI's ChatGPT and Anthropic's Claude models.

Reddit网友猜想的开源的商业模式。就像当年的网景浏览器变身为流量入口,带动了广告、游戏等其他业务的兴起。

机器之心采访面壁智能时也曾聊过商业模式——他们形容为一种类似于数据库的生意:

The company is responsible for providing model libraries, much like the commonly seen database enterprises today. While database companies focus on refining database performance, Facewall Intelligence's task is to enhance the performance of large models. Governments, businesses, small developers, and even students can access this infrastructure through standard interfaces to achieve the capabilities required for their operations based on their specific needs.

However, The Economist has warned that while some open-source companies may be good businesses, investors must accept that they will not generate the operating profits of traditional software companies, let alone profits comparable to Microsoft's. Being 'open-source' means they are a form of public property and cannot leverage patents to establish monopolies and reap substantial returns, as Microsoft does with Windows.

On the other hand, as The Economist once pointed out, while owning such a platform is extremely beneficial for its owner in the short term, it goes against the interests of all other companies in the industry and slows down the pace of overall technological innovation and development.

In any case, one thing is now clear—compared to a year ago, the possibility of democratizing the use of LLMs has significantly increased, and the likelihood of technology being monopolized by a few companies has decreased.

This marks another turning point in the field of computing.