Zhipu AI Open-Sources Visual Language Model CogAgent with GUI Interface Q&A Support

-

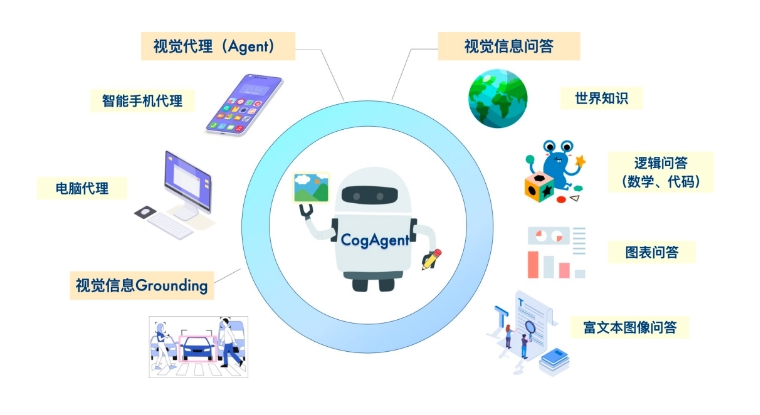

Zhipu AI has open-sourced CogAgent, a visual language model with 18 billion parameters. The model excels in GUI understanding and navigation, achieving state-of-the-art (SOTA) general performance on multiple benchmarks.

It also supports high-resolution visual input and conversational Q&A, and can perform question-answering on arbitrary GUI screenshots.

The model can perform task inference by uploading screenshots, returning plans, next actions, and specific operation coordinates.

CogAgent also supports OCR-related tasks, with significantly improved capabilities through pre-training and fine-tuning.

Github:

https://github.com/CogNLP/CogAGENT

cogagent-chat:

https://modelscope.cn/models/ZhipuAI/cogagent-chat/summary

cogagent-vqa:

https://www.modelscope.cn/models/ZhipuAI/cogagent-vqa/summary