UIUC and Tsinghua Jointly Release New Code Model Magicoder with Under 7B Parameters

-

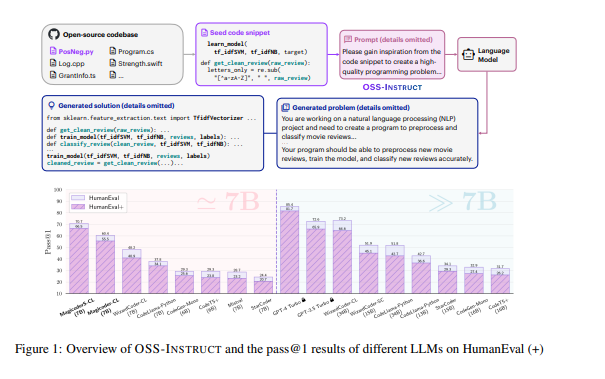

In the field of code generation, UIUC and Tsinghua have collaborated to release Magicoder, a new large language model with only 7B parameters that rivals top-tier models, while being fully open-source with shared code, weights, and data. The key to Magicoder lies in its OSS-INSTRUCT method, which draws inspiration from open-source code to generate diverse, authentic, and controllable coding instruction data, emphasizing the importance of authenticity for instruction tuning.

Paper address: https://arxiv.org/pdf/2312.02120.pdf

Historically, code generation has been a challenging problem in academia, but recent breakthroughs have been achieved by training large language models on code. In this context, the release of Magicoder signifies the arrival of more efficient and powerful code generation models. Performance evaluations show that Magicoder excels in Python, other programming languages, and data science libraries, particularly improving by 8.3 percentage points on the DS-1000 dataset, demonstrating its potential in practical applications.

The key OSS-INSTRUCT method enables Magicoder to draw inspiration from open-source code, generating diverse and authentic coding instruction data. Meanwhile, Magicoder's performance evaluation results demonstrate significant improvements across different programming languages and real-world application scenarios, surpassing other open-source models. This proves the superiority of adopting the OSS-INSTRUCT method and Magicoder's potential in enhancing code generation model capabilities.

Although Magicoder still has room for improvement, its release marks an important step forward in the field of code generation. By open-sourcing all data and code details, Magicoder's launch may just be one among many advanced code models. Looking ahead, we can anticipate more innovations and progress.