Zhipu AI Releases AlignBench: The First Chinese LLM Alignment Evaluation Benchmark

-

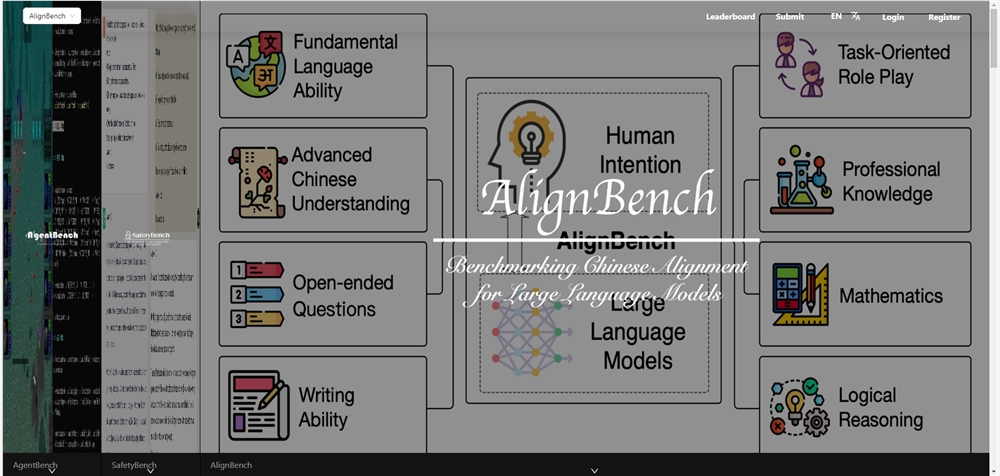

Zhipu AI has released AlignBench, an alignment evaluation benchmark specifically designed for Chinese large language models (LLMs). This is currently the first evaluation benchmark targeting Chinese LLMs, capable of meticulously assessing the alignment between models and human intentions across multiple dimensions.

AlignBench's dataset is derived from real-world usage scenarios and undergoes several steps, including initial construction, sensitivity screening, reference answer generation, and difficulty filtering, to ensure authenticity and challenge. The dataset is divided into eight major categories, covering various types of questions such as knowledge Q&A, writing generation, and role-playing.

To achieve automation and reproducibility, AlignBench employs scoring models (such as GPT-4 and CritiqueLLM) to evaluate each model's responses, representing their answer quality. The scoring models feature multi-dimensional, rule-calibrated evaluation methods, improving consistency between model scores and human ratings while providing detailed analysis and evaluation scores.

Developers can use AlignBench for evaluation and leverage high-performance scoring models like GPT-4 or CritiqueLLM. By logging into the AlignBench website and submitting results, CritiqueLLM can be used as the scoring model, with evaluation results typically available in about 5 minutes.

Experience Address: https://llmbench.ai/align