The Unstoppable Trend of AI Development: Global AI Status Report

-

For our increasingly digital and data-driven world, artificial intelligence serves as a force multiplier for technological advancement. Therefore, understanding the current state of AI development is crucial for our work. This "2023 AI Status Report" summarizes the current state of AI from various perspectives including research, industry, politics, and security, while also making predictions about AI developments over the next 12 months. We hope this report helps you stay informed about AI trends.

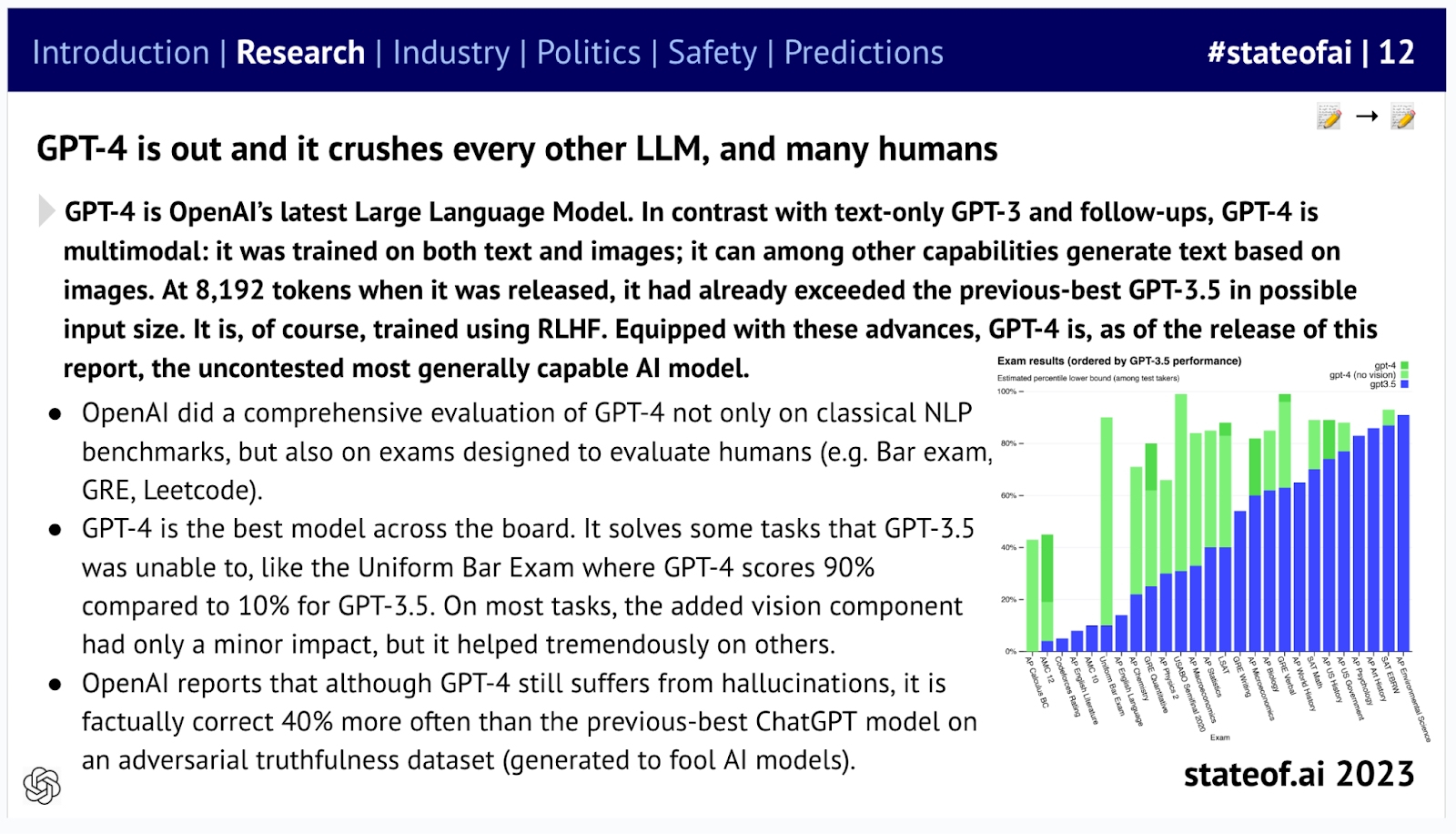

2023 has undoubtedly been the year of large language models (LLMs). OpenAI's GPT-4 astonished the world by outperforming all other LLMs—whether in classic AI benchmark tests or in exams designed for humans.

GPT-4's capabilities surpass other large models: OpenAI has tested it not only on classic natural language processing benchmarks but also on human assessment tests (such as the bar exam, GRE, LeetCode, etc.). GPT-4 also performs better than previous models in addressing hallucination issues.

Due to concerns about safety and competition, we have observed a decline in the openness of artificial intelligence. Regarding GPT-4, OpenAI has only released a very limited technical report, Google has disclosed little about PaLM2, and Anthropic has not shared any technical details—whether about Claude or Claude 2.

Whether it's tech giants or startups, leading companies are beginning to obscure the details of their artificial intelligence technologies.

However, Meta AI and other companies have stepped up to keep the open-source flame burning by developing and releasing open-source LLMs that can rival many of GPT-3.5's capabilities.

Meta open-sourced LLaMa, sparking a competition in open-source large models. With the help of open-source models, some have begun fine-tuning them to develop applications for vertical domains.

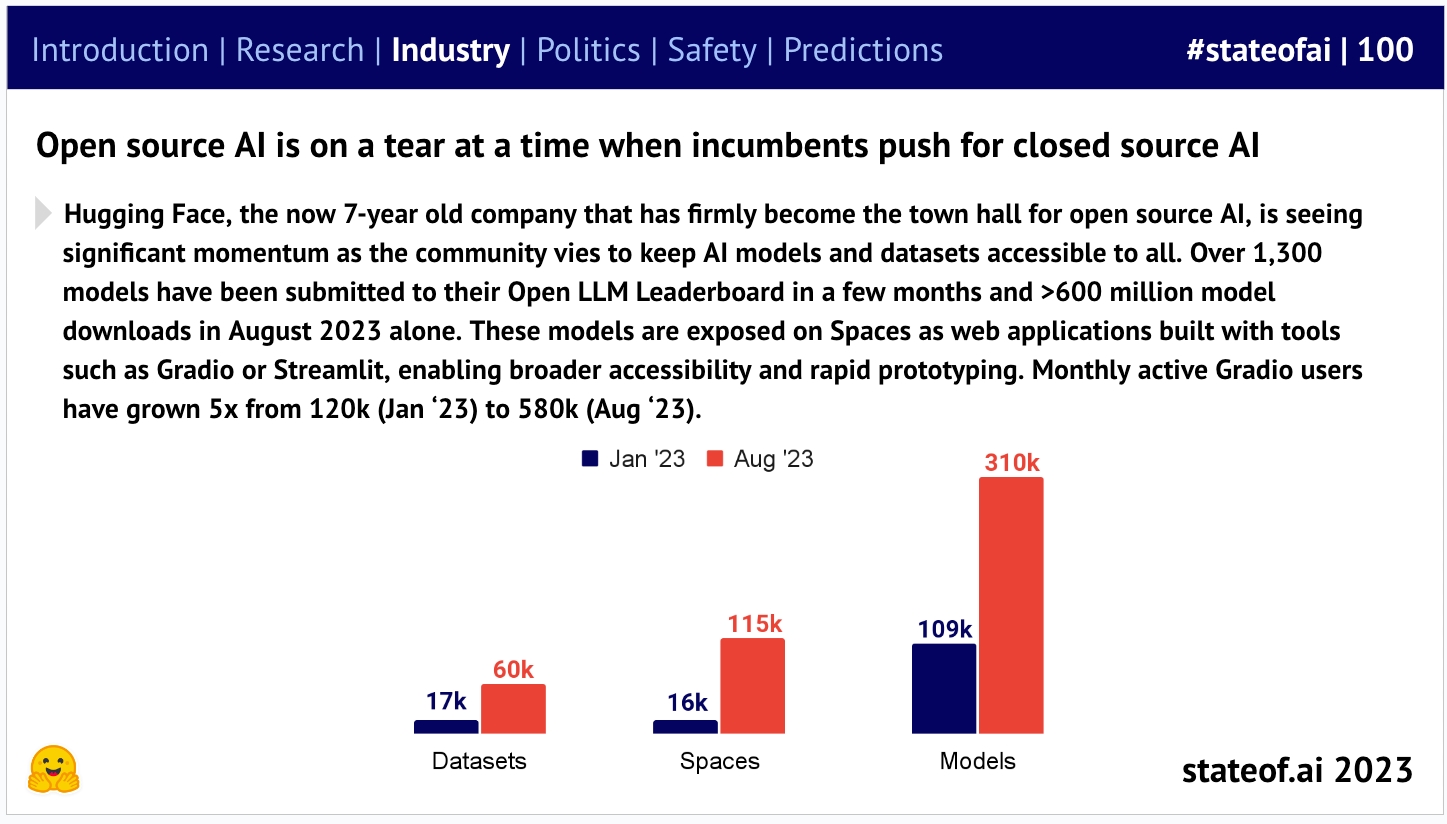

According to Hugging Face's leaderboard, open-source activity is more vibrant than ever, with downloads and model submissions soaring to historic highs. Notably, in the past 30 days, LLaMa models have been downloaded over 32 million times on Hugging Face.

Although there are many different benchmarks (mostly academic) to evaluate the performance of large language models, the greatest common denominator among these different evaluation criteria, and perhaps the most significant scientific and engineering benchmark, is this: (user) 'resonance'.

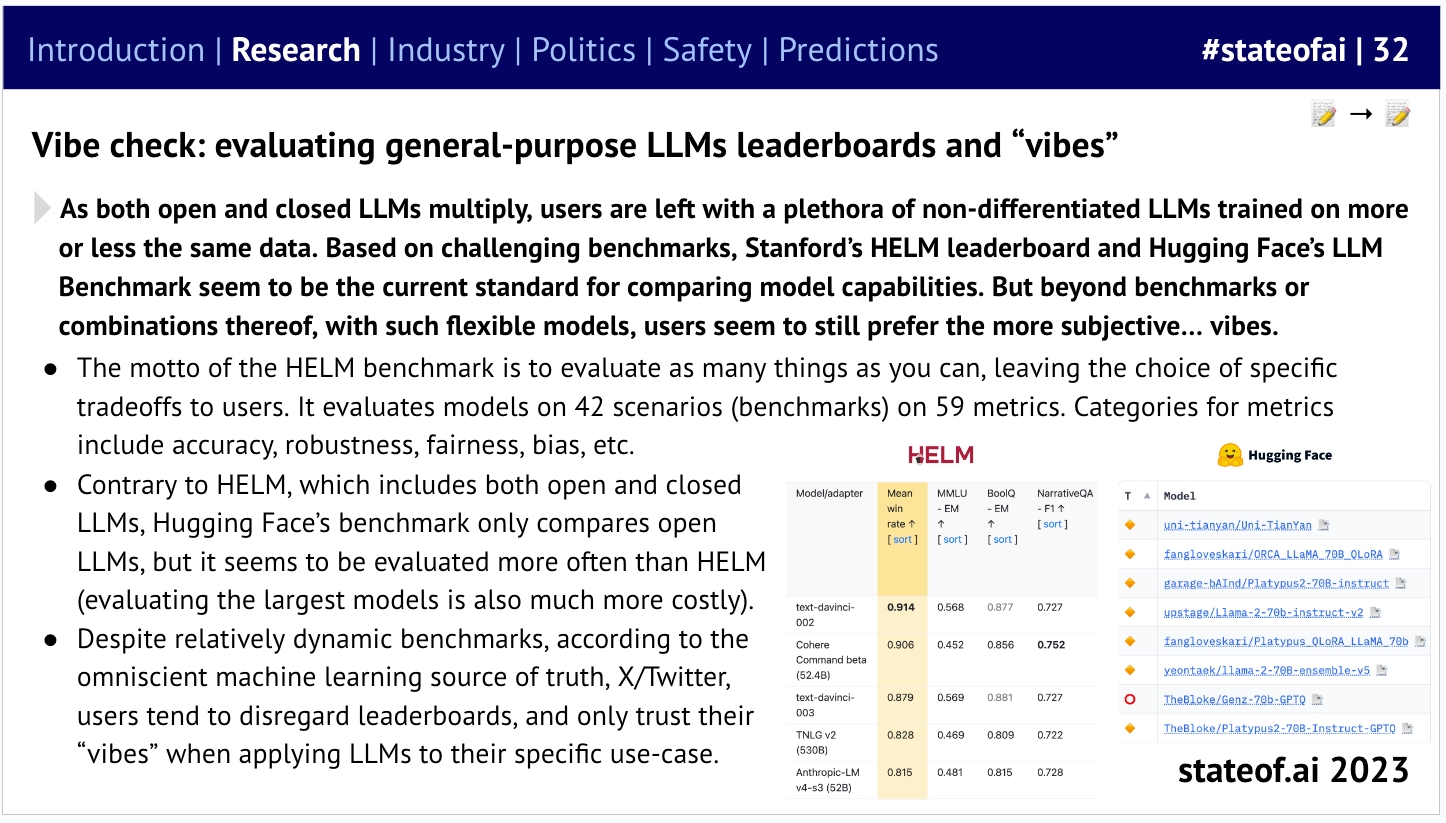

With the proliferation of open-source and proprietary large language models (LLMs) and the increasing similarity in their training data, differentiation between LLMs has become increasingly difficult, making model evaluation challenging. The current mainstream benchmarks for comparing model capabilities are Stanford's HELM leaderboard and Hugging Face's LLM Benchmark, but users seem to prefer more subjective evaluation methods: resonance.

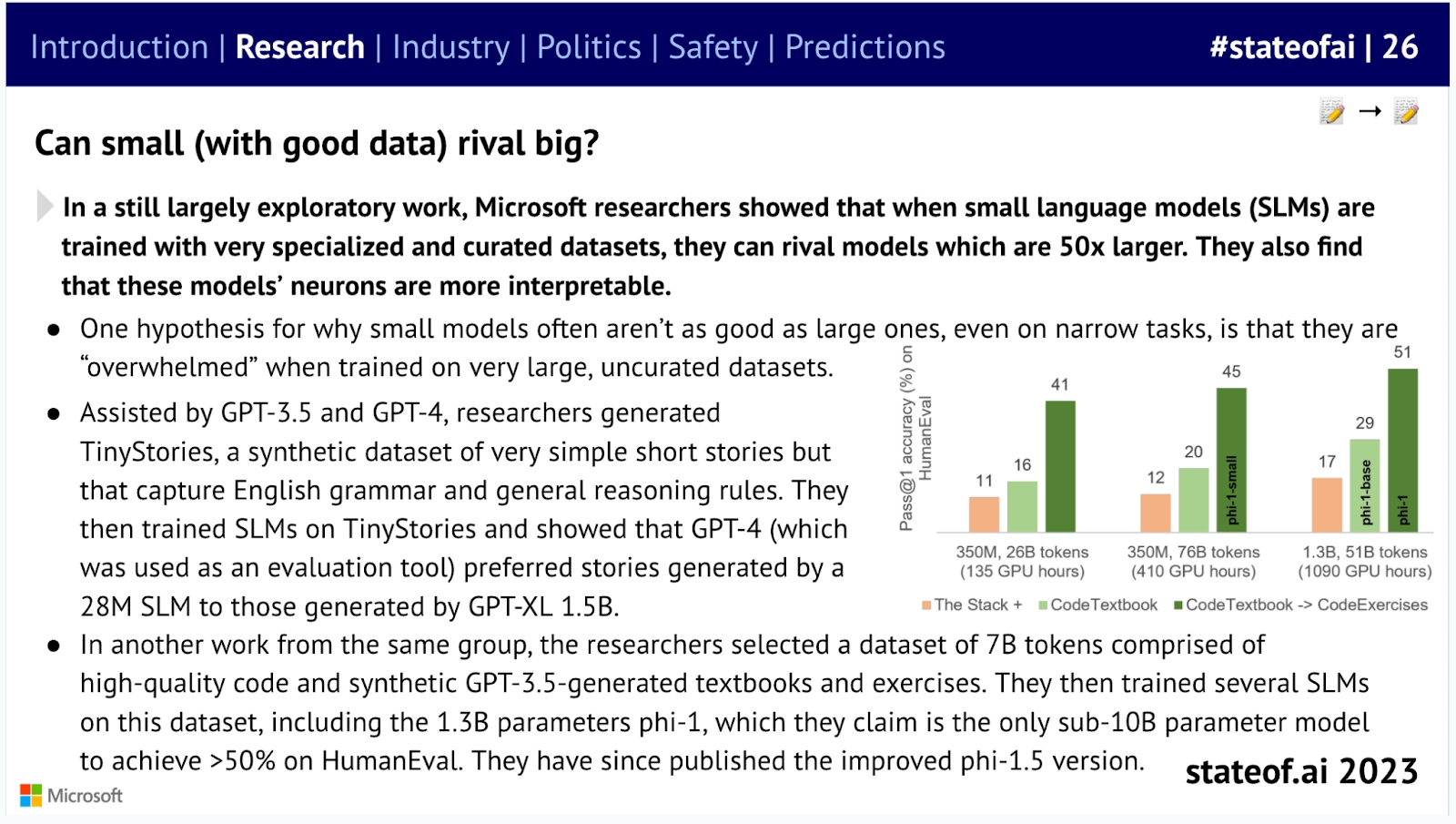

Beyond the excitement surrounding LLMs, researchers, including those at Microsoft, have been exploring the potential of small language models. They found that models trained on highly specialized datasets can compete with competitors 50 times their size.

Microsoft discovered that small language models trained on very specialized and carefully curated datasets can compete with models 50 times their size.

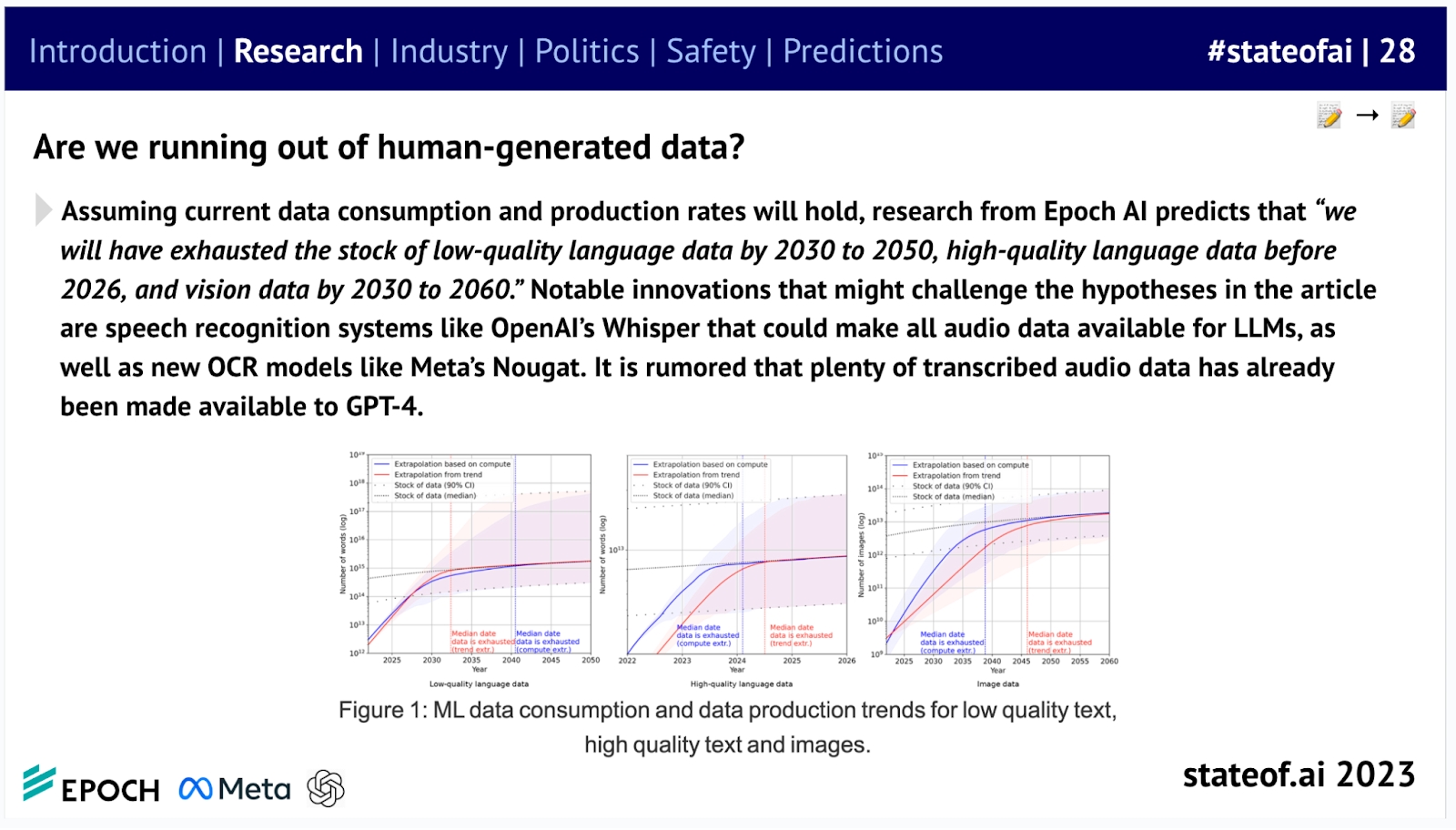

If Epoch AI's team is correct, this work may become more urgent. They predict we face the risk of exhausting high-quality language datasets within the next "two years," forcing labs to explore alternative sources of training data.

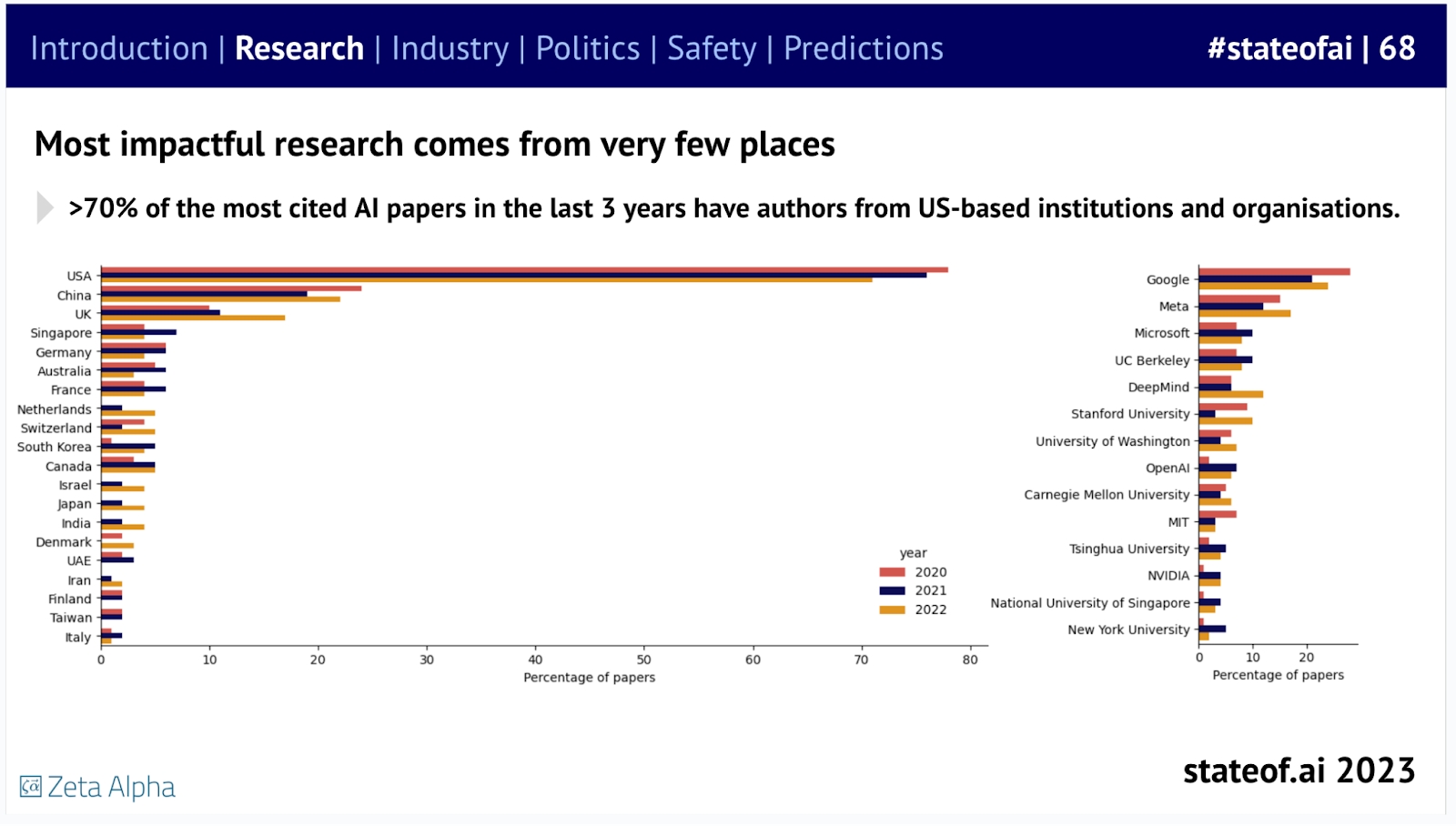

At a higher level of analysis - although gradually weakening in recent years, the United States still maintains its leading position, with the vast majority of highly cited papers coming from a small number of American institutions.

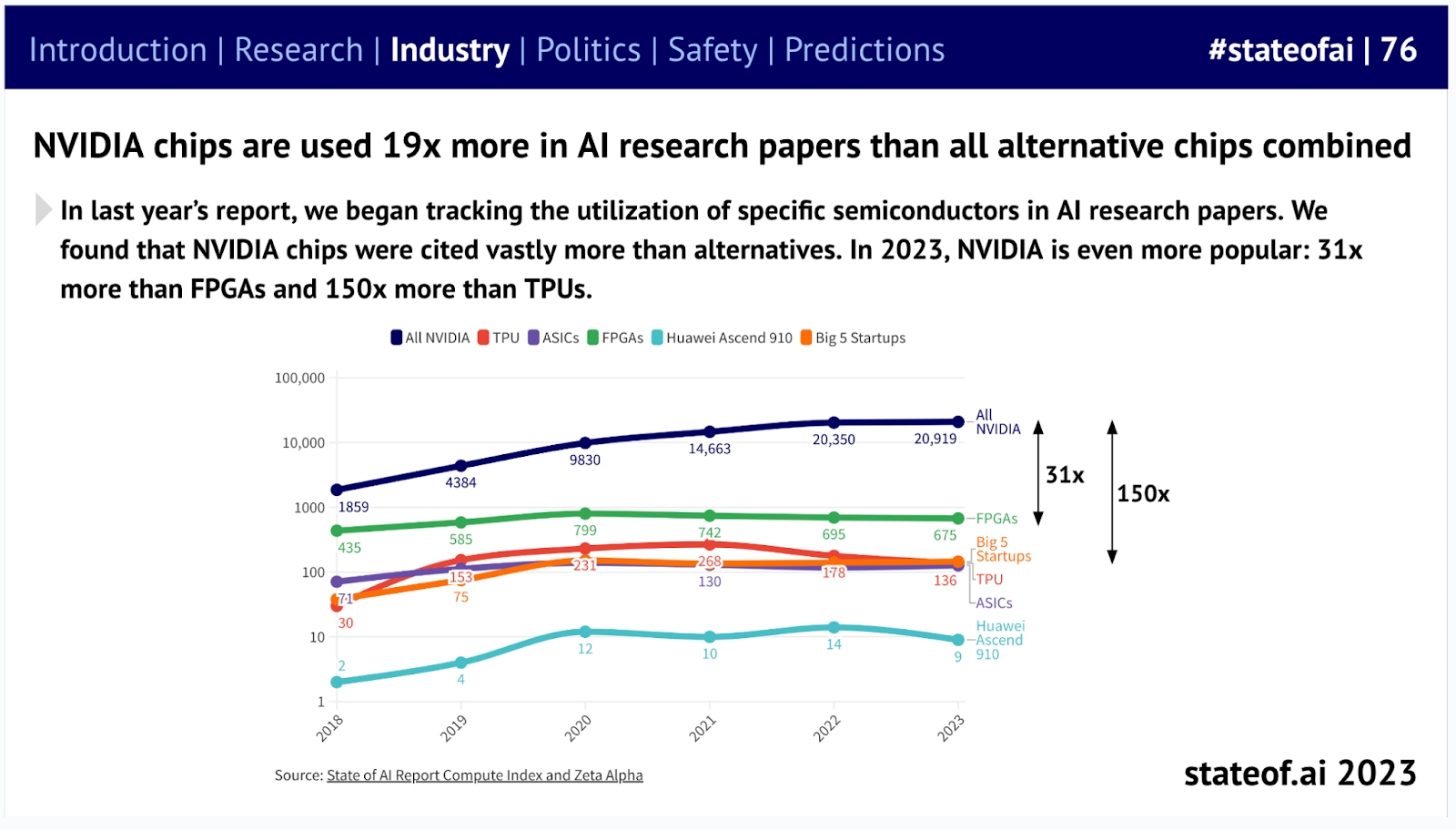

All these developments indicate that now is an excellent time to enter the hardware business, especially if you're NVIDIA. The demand for GPUs has propelled them into the trillion-dollar market cap club, with their chips being used in AI research 19 times more than "the sum of all alternatives."

While NVIDIA continues to release new chips, their older GPUs demonstrate extraordinary lifecycle value. The V100, released in 2017, was the most popular GPU in AI research papers in 2022. This GPU might be phased out within 5 years, meaning it will have served for a decade.

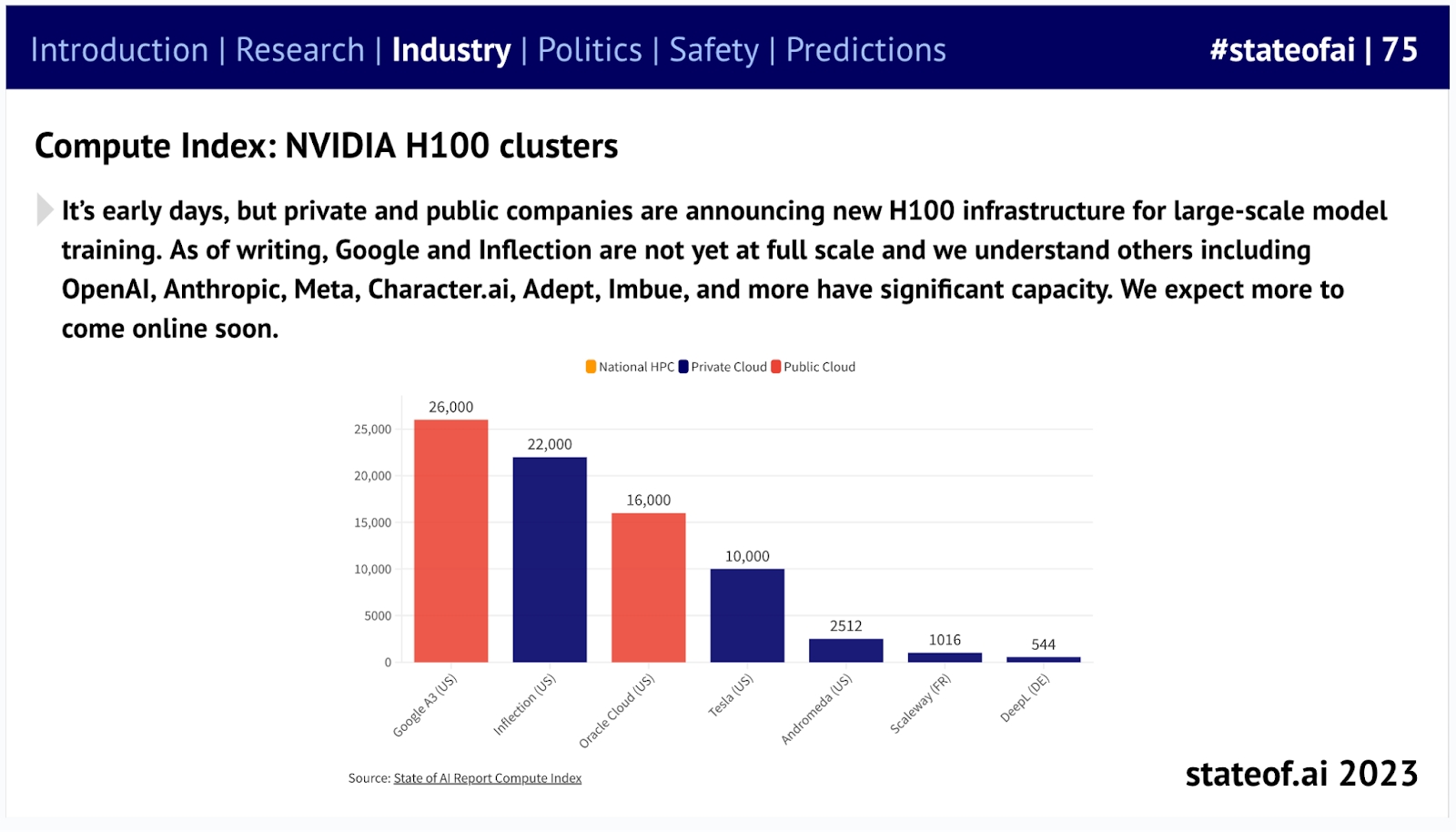

We've seen rapidly growing demand for NVIDIA's H100, with labs rushing to build large computing clusters—and likely more clusters under construction. However, we've heard these construction projects aren't without significant engineering challenges.

An AI computing cluster built with NVIDIA's latest H100 GPUs, with Google's largest A3 using 26,000 GPUs

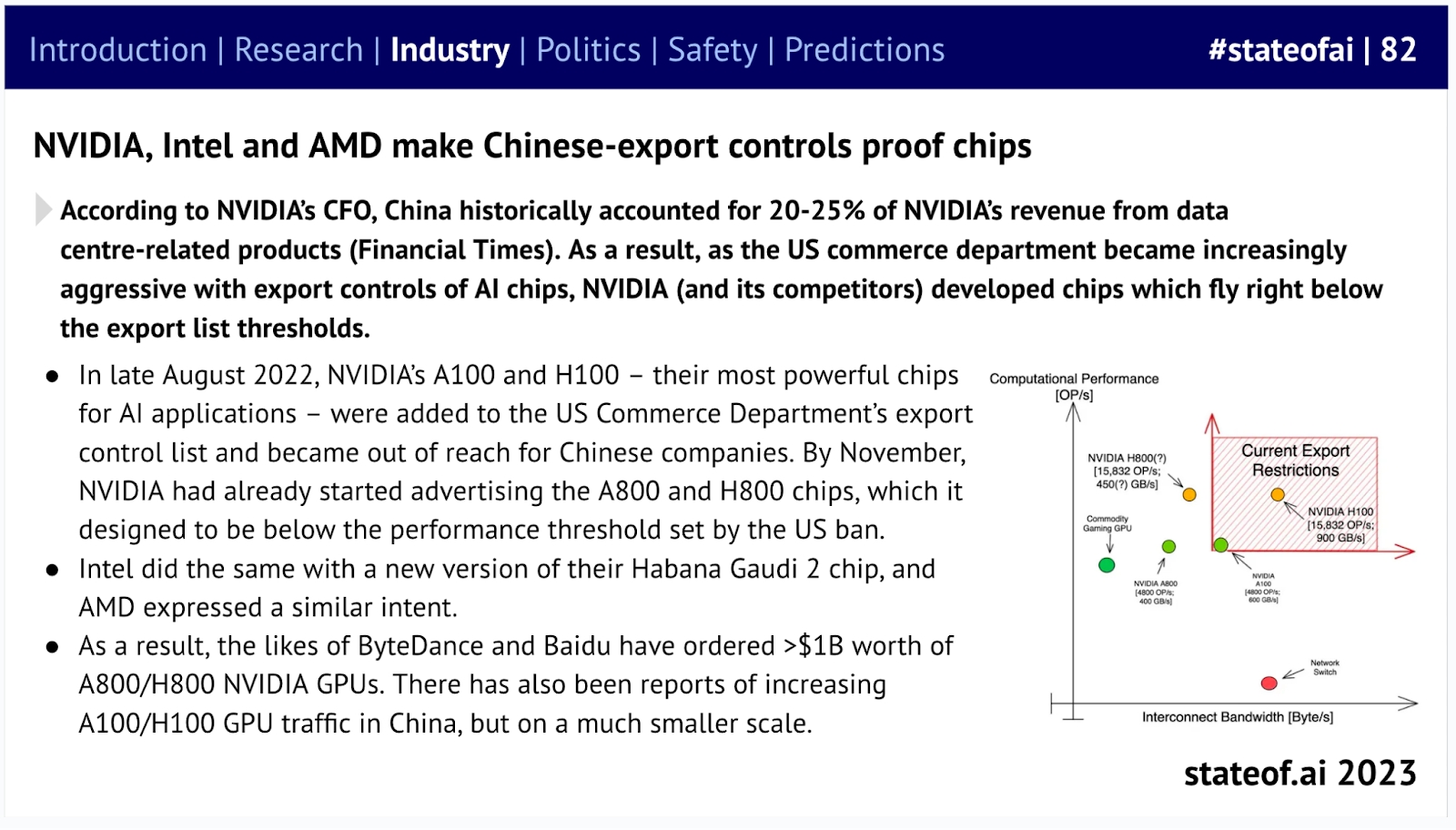

The "chip war" has forced industry adjustments, with NVIDIA, Intel, and AMD all developing special chips that comply with sanctions for their massive Chinese customer base.

Some chips in this image have been added to the US control list again

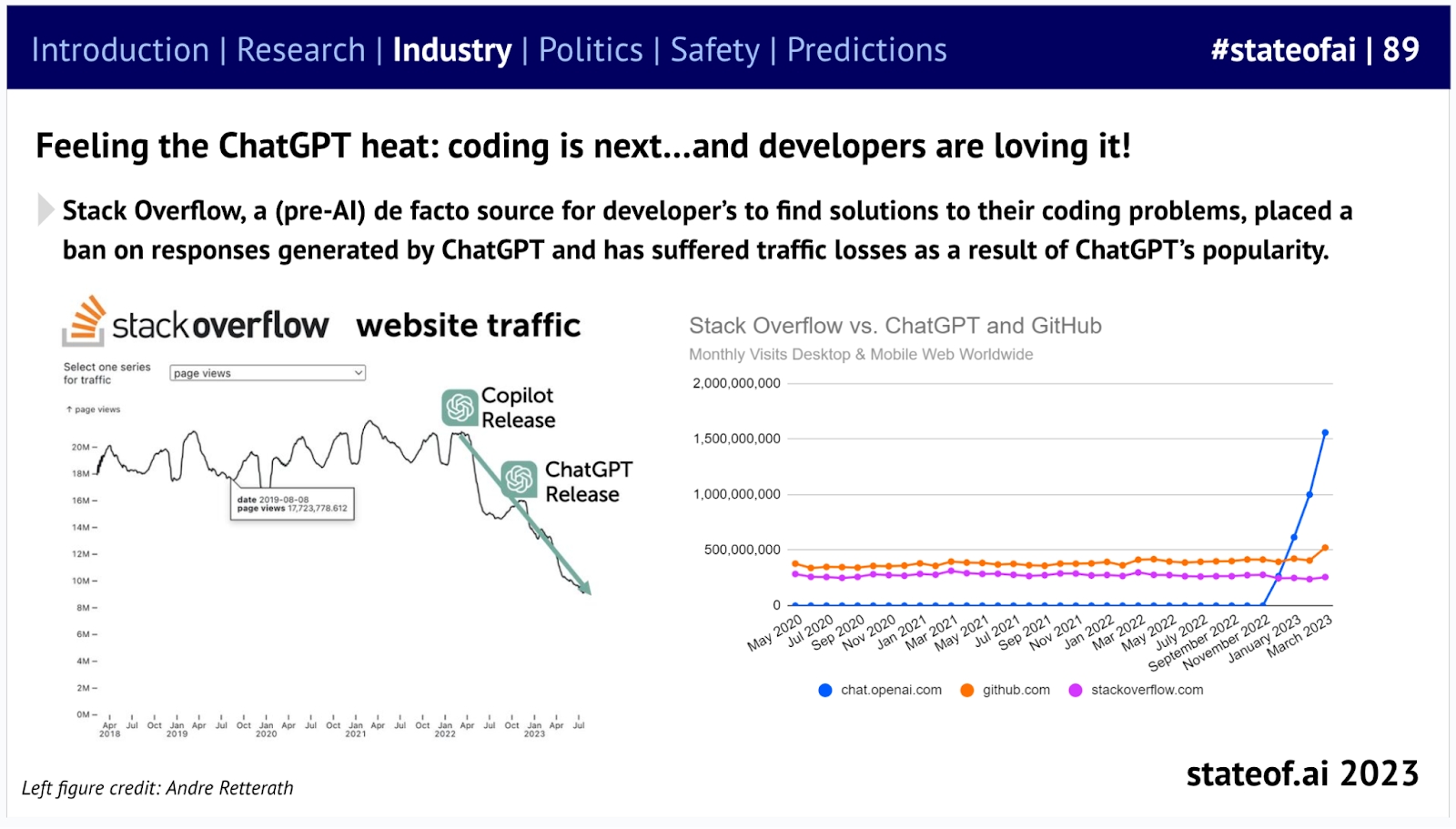

Perhaps the least surprising news is this: ChatGPT is one of the fastest-growing internet products in history. It has become particularly popular among developers, replacing Stack Overflow as the new go-to place for finding solutions when encountering coding problems.

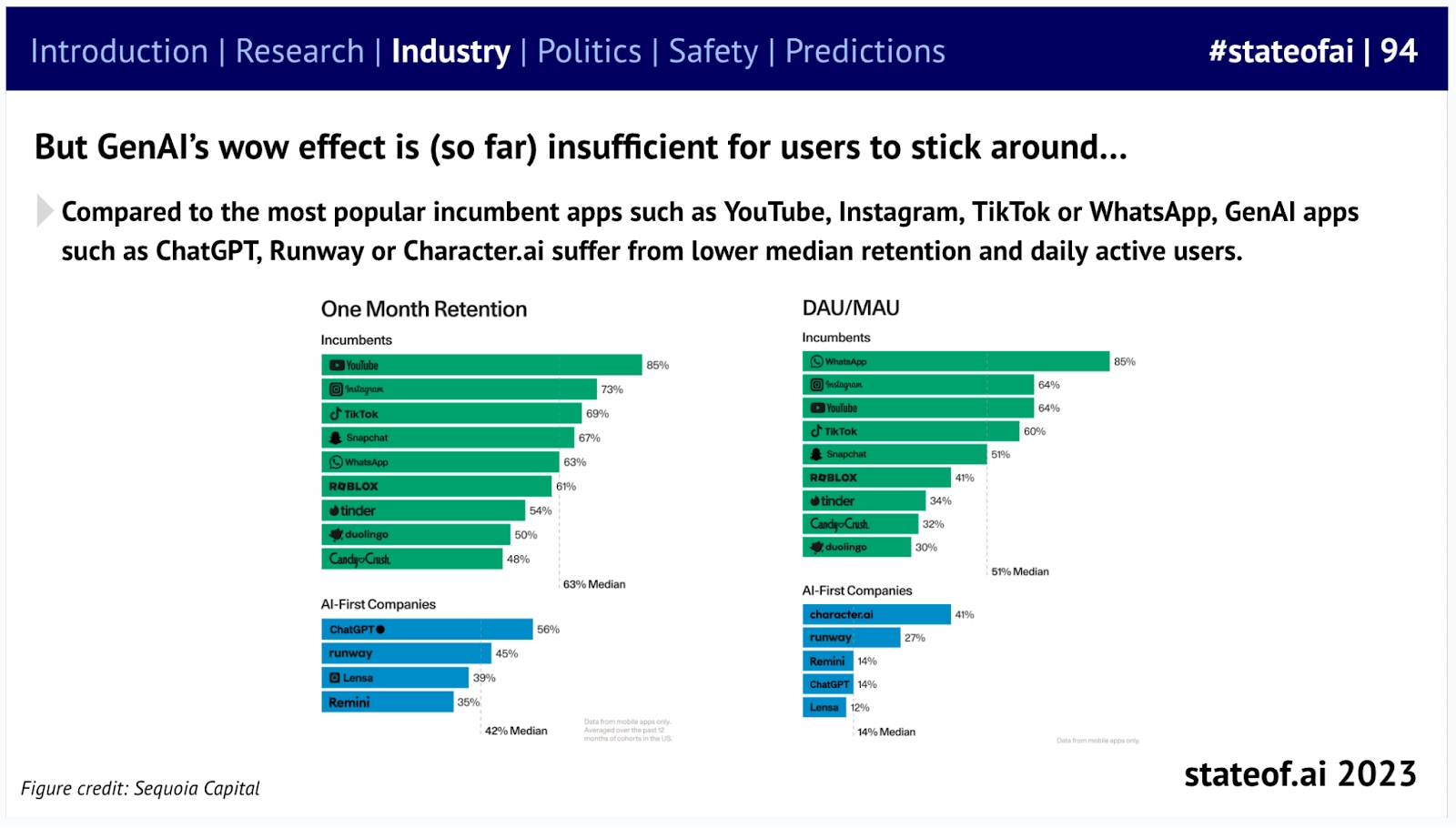

But according to data from Sequoia Capital, there are reasons to doubt the staying power of generative AI products—retention rates for various products, from image generation to AI companions, remain unstable.

The general public's interest in AI products seems to be a passing fad.

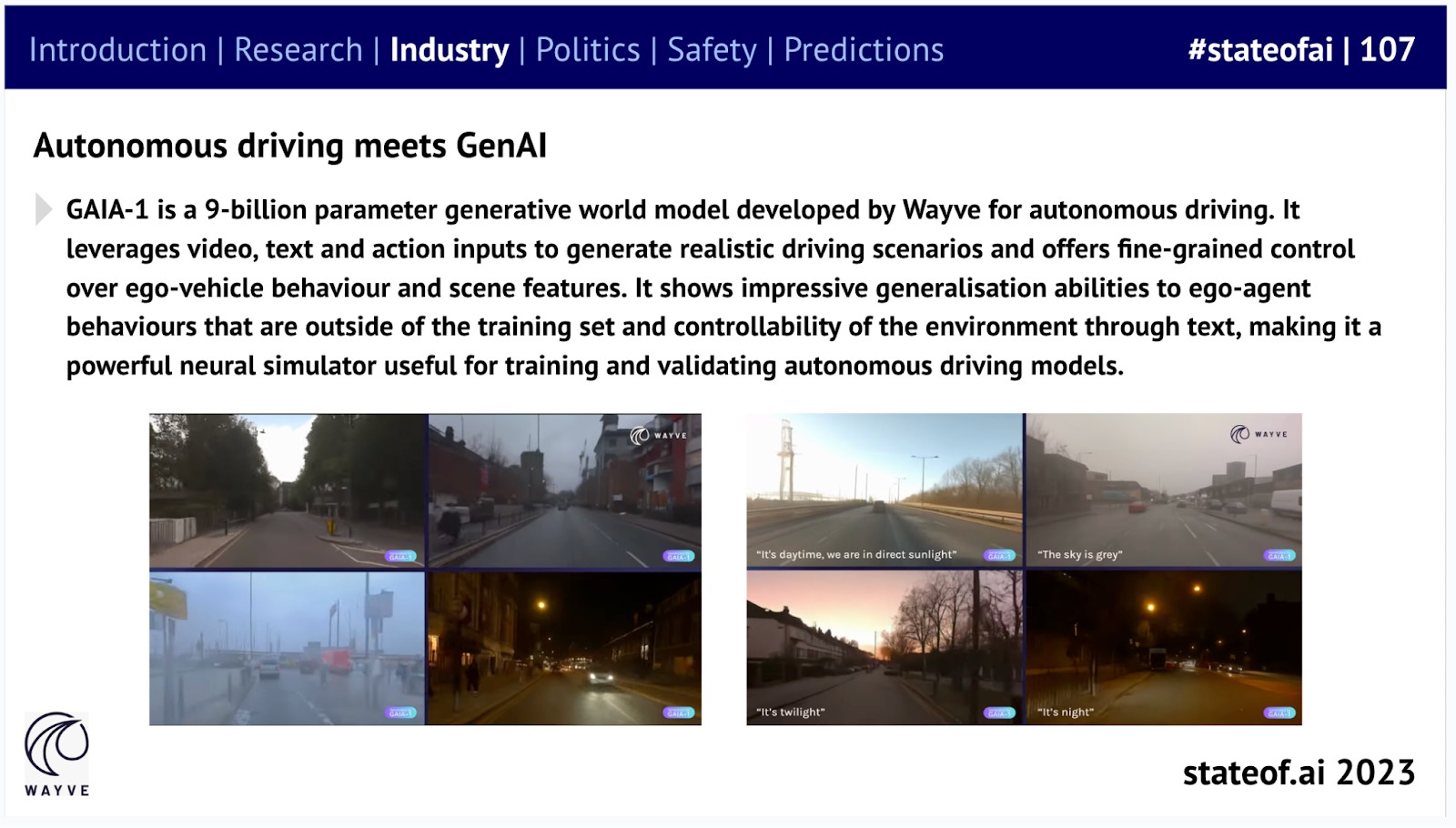

Beyond the consumer software sector, there are indications that generative AI can accelerate advancements in physical AI domains. Wayve's GAIA-1 demonstrates impressive versatility, serving as a powerful tool for training and validating autonomous driving models.

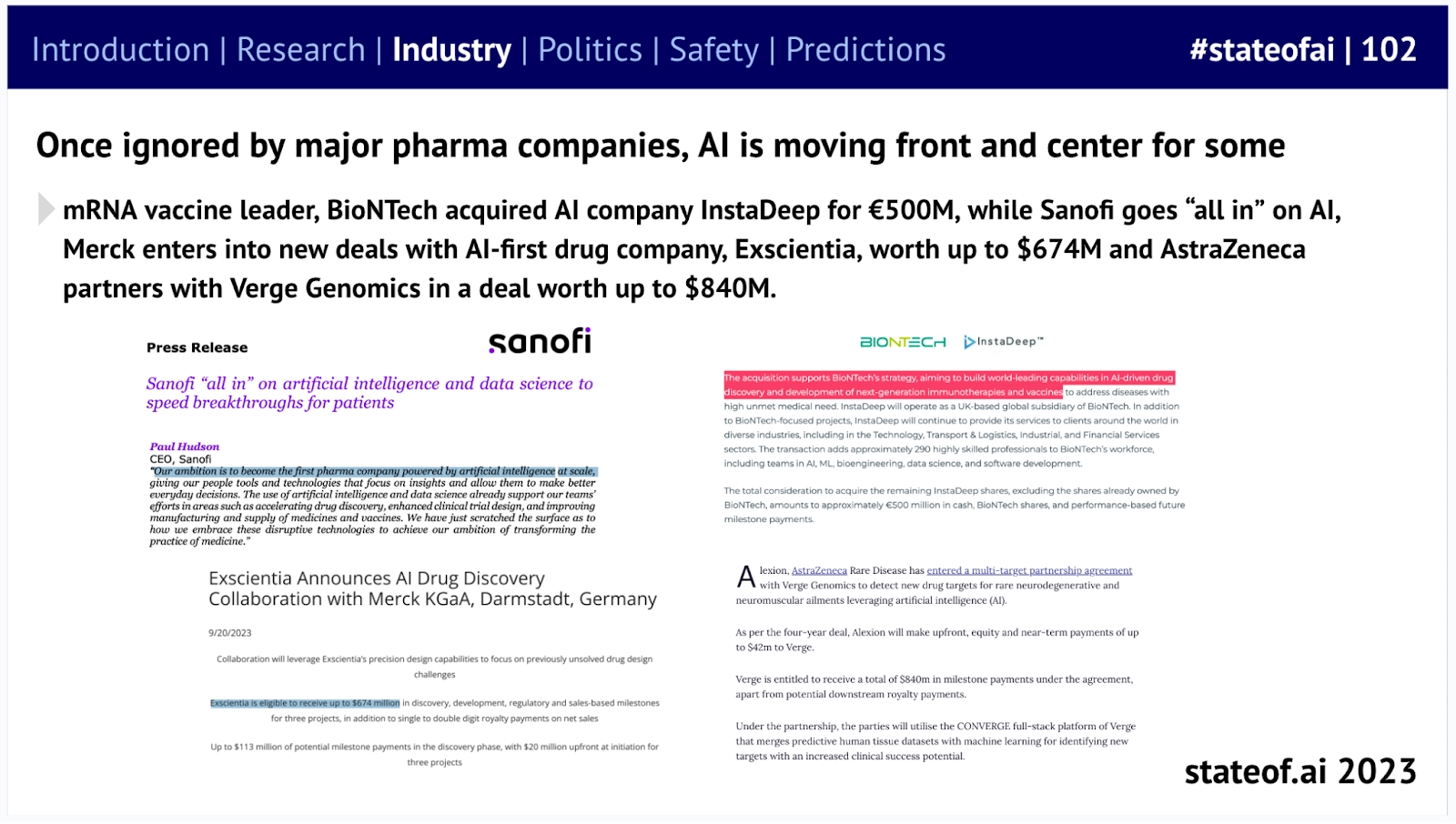

Beyond generative artificial intelligence, we are witnessing significant initiatives in industries that have long been striving to find suitable applications for AI. Many traditional pharmaceutical companies have gone all-in on artificial intelligence, striking multi-billion dollar deals with companies like Exscientia and InstaDeep.

As militaries rush to modernize their capabilities to address asymmetric warfare, the AI-first defense market is booming. However, conflicts between new technologies and established players are making it difficult for newcomers to gain a foothold.

Last year, US defense startups raised $2.4 billion in funding.

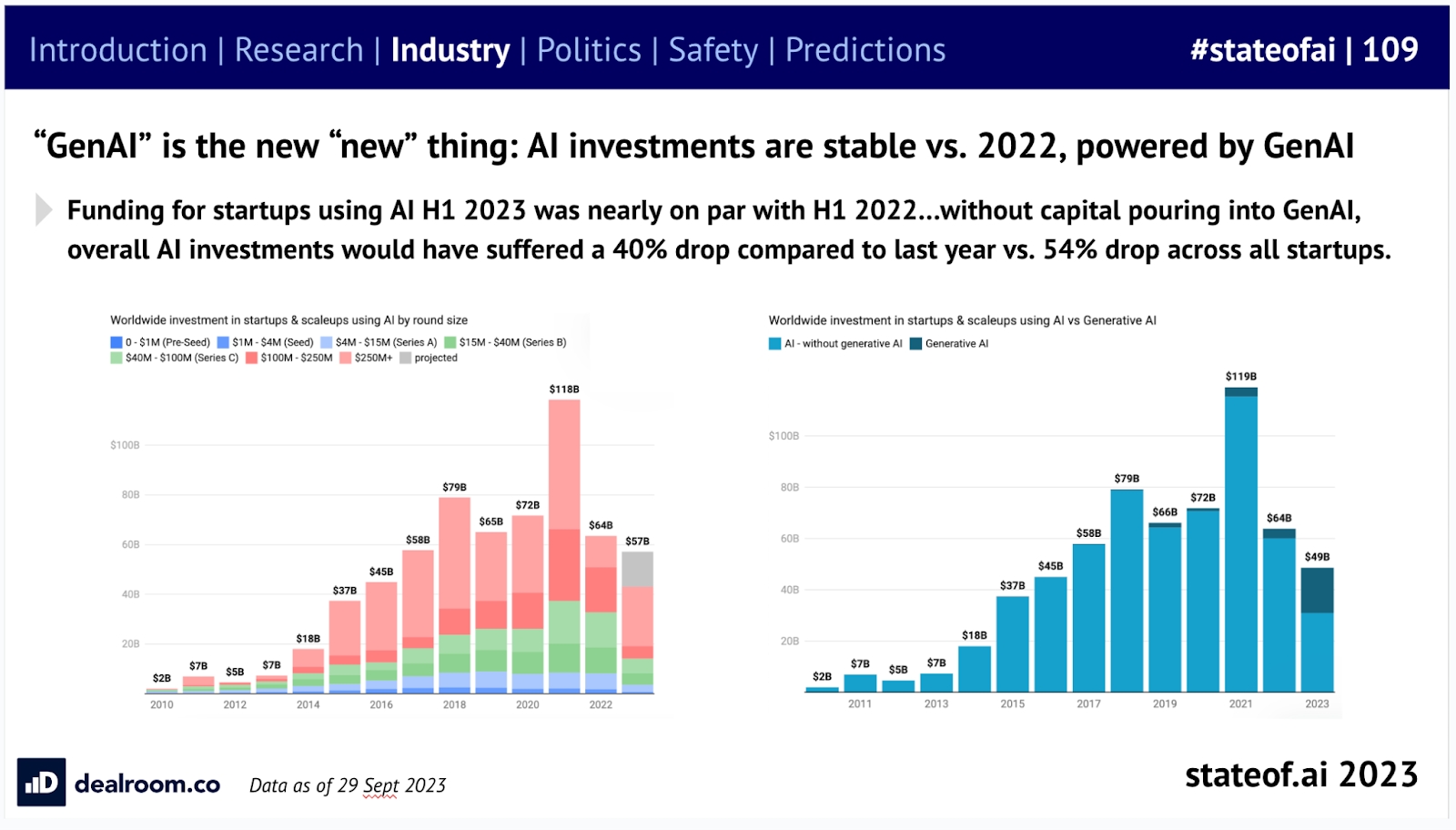

In addition to these successes, the venture capital industry has shifted its focus to generative AI, which has buoyed the tech private market including firms like Atlas. Without the market boom in generative AI, investments in AI would have declined by 40% compared to last year.

Global investments in AI have remained relatively stable, while generative AI has become the new darling of investors.

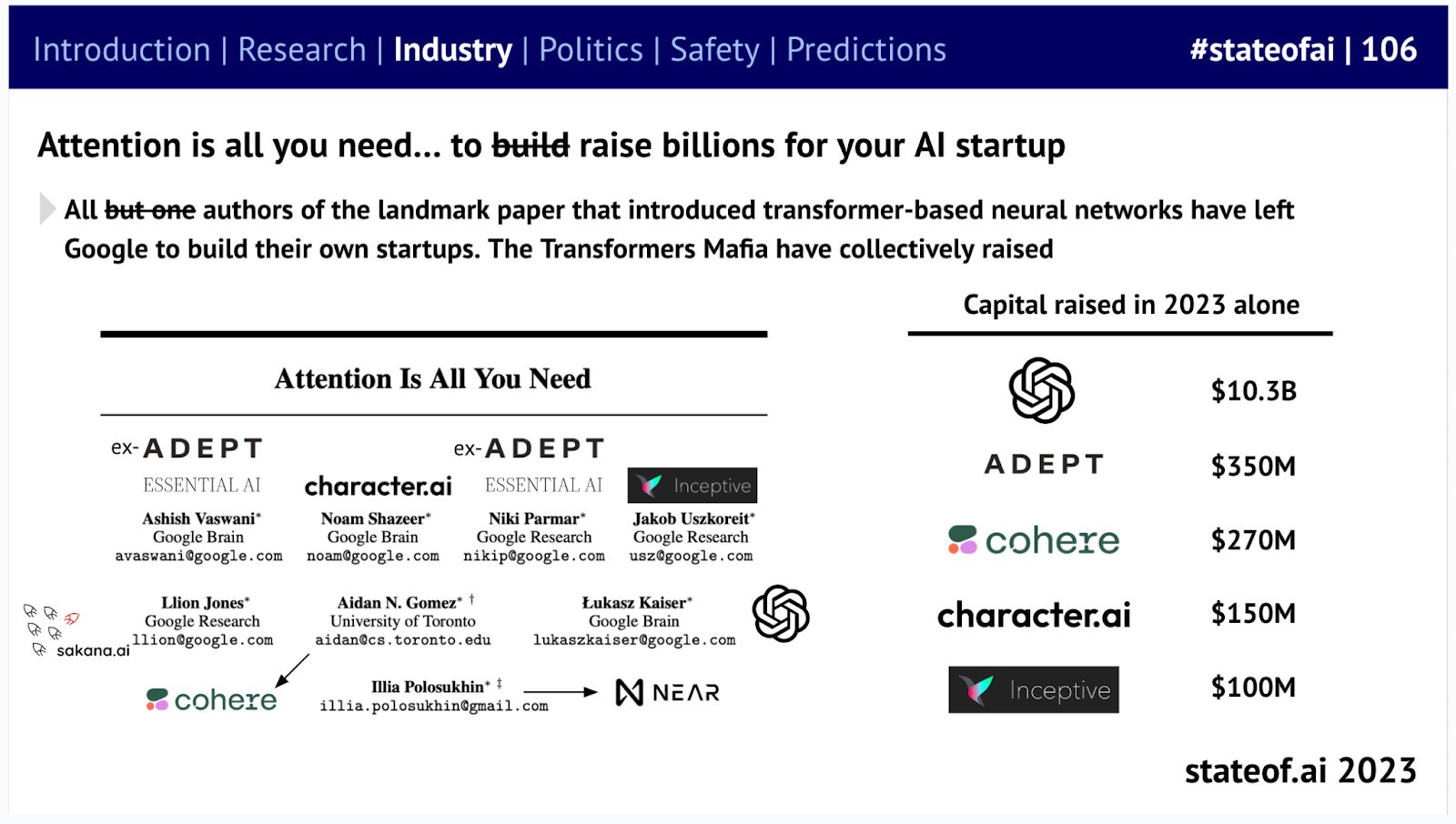

The authors of the seminal paper introducing the Transformer neural network are living proof—in 2023 alone, the "Transformer gang" secured billions of dollars in funding.

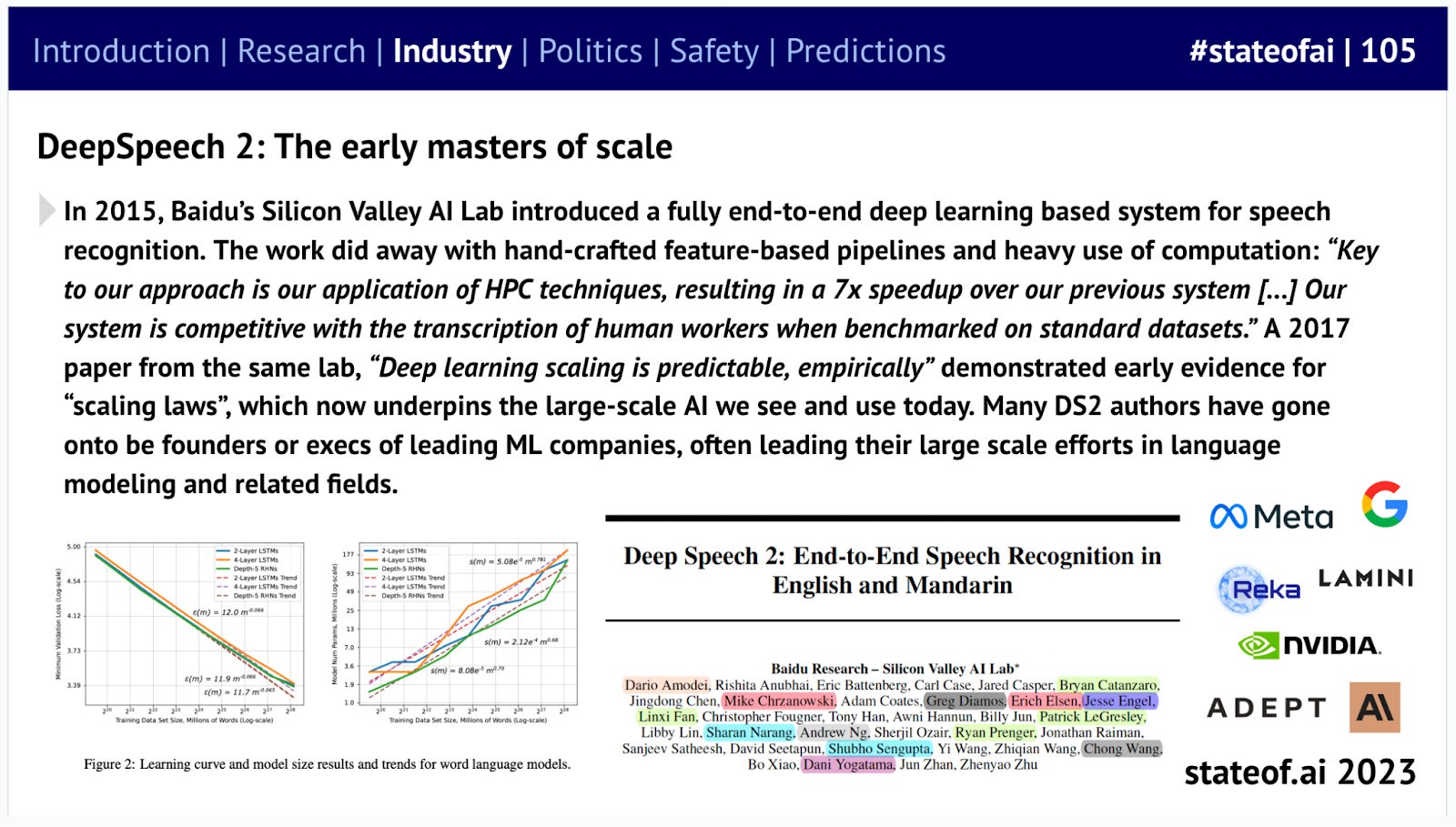

The same holds true for Baidu's Silicon Valley AI lab, the DeepSpeech2 team. Their work on deep learning for speech recognition demonstrated the scaling laws that now underpin large-scale AI. Most members of this team went on to become founders or senior executives at leading machine learning companies.

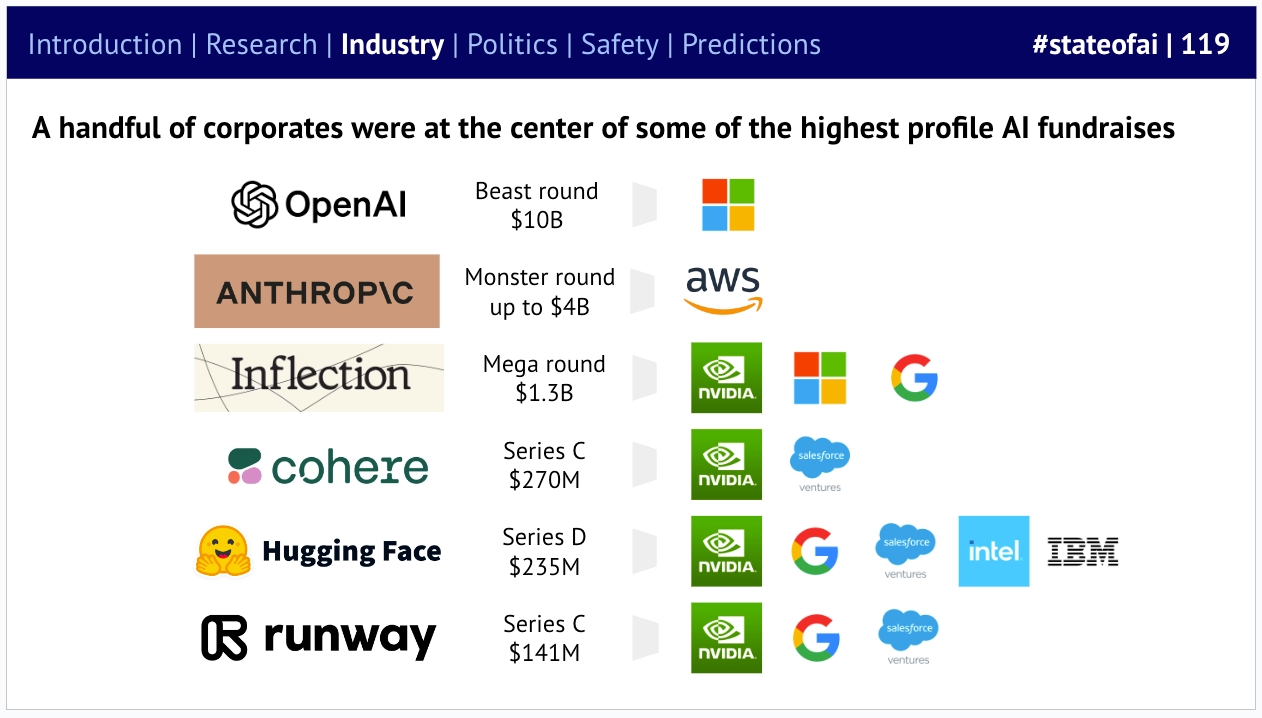

Many of the most eye-catching mega-financings were not led by traditional venture capital firms. 2023 was the year of corporate venture capital, with large tech companies effectively utilizing their war chests.

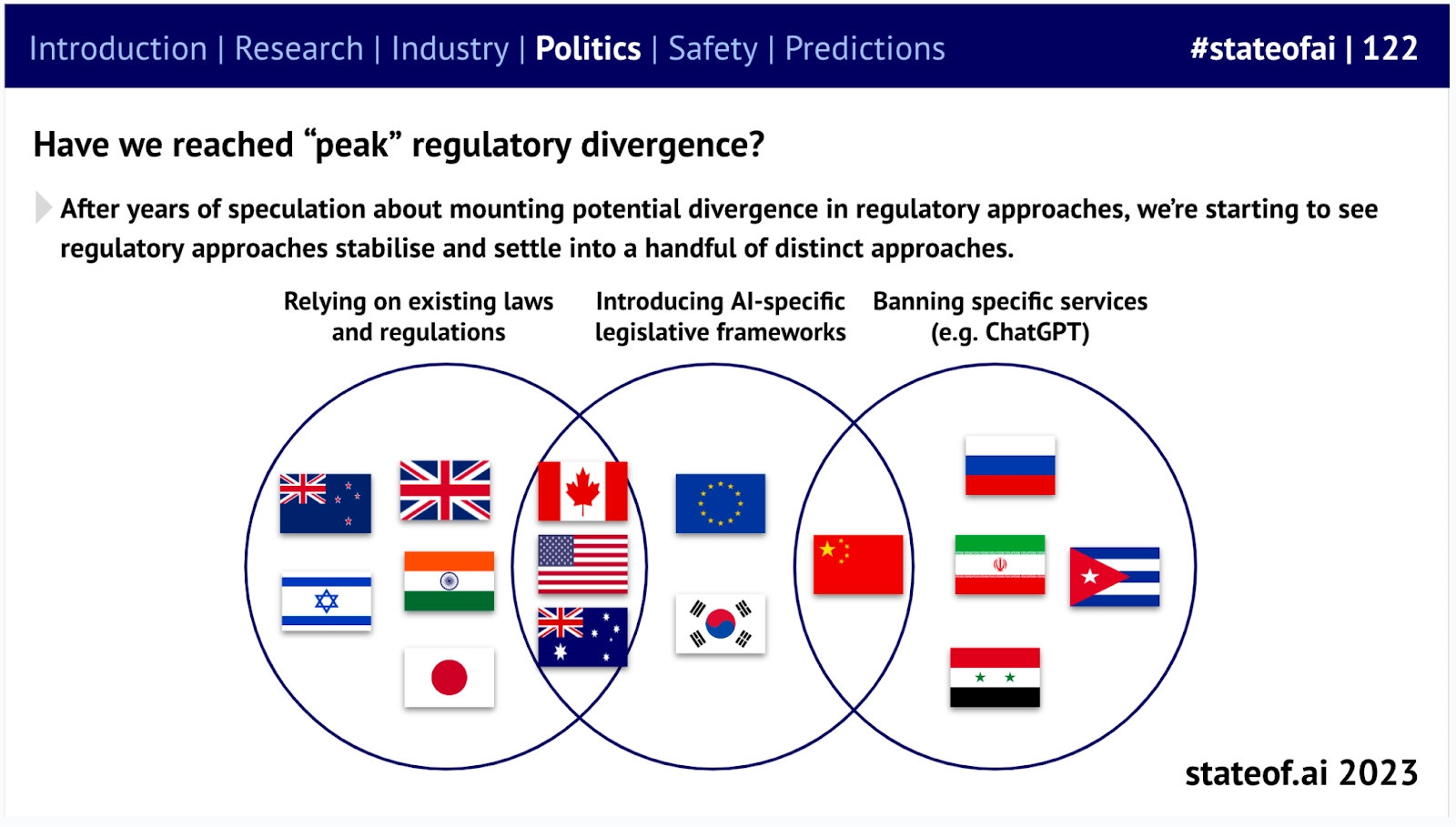

Not surprisingly, billions of dollars in investment, coupled with significant leaps in capabilities, have placed artificial intelligence at the top of policymakers' agendas. The spectrum ranges from lenient to strict, with several approaches to regulation globally.

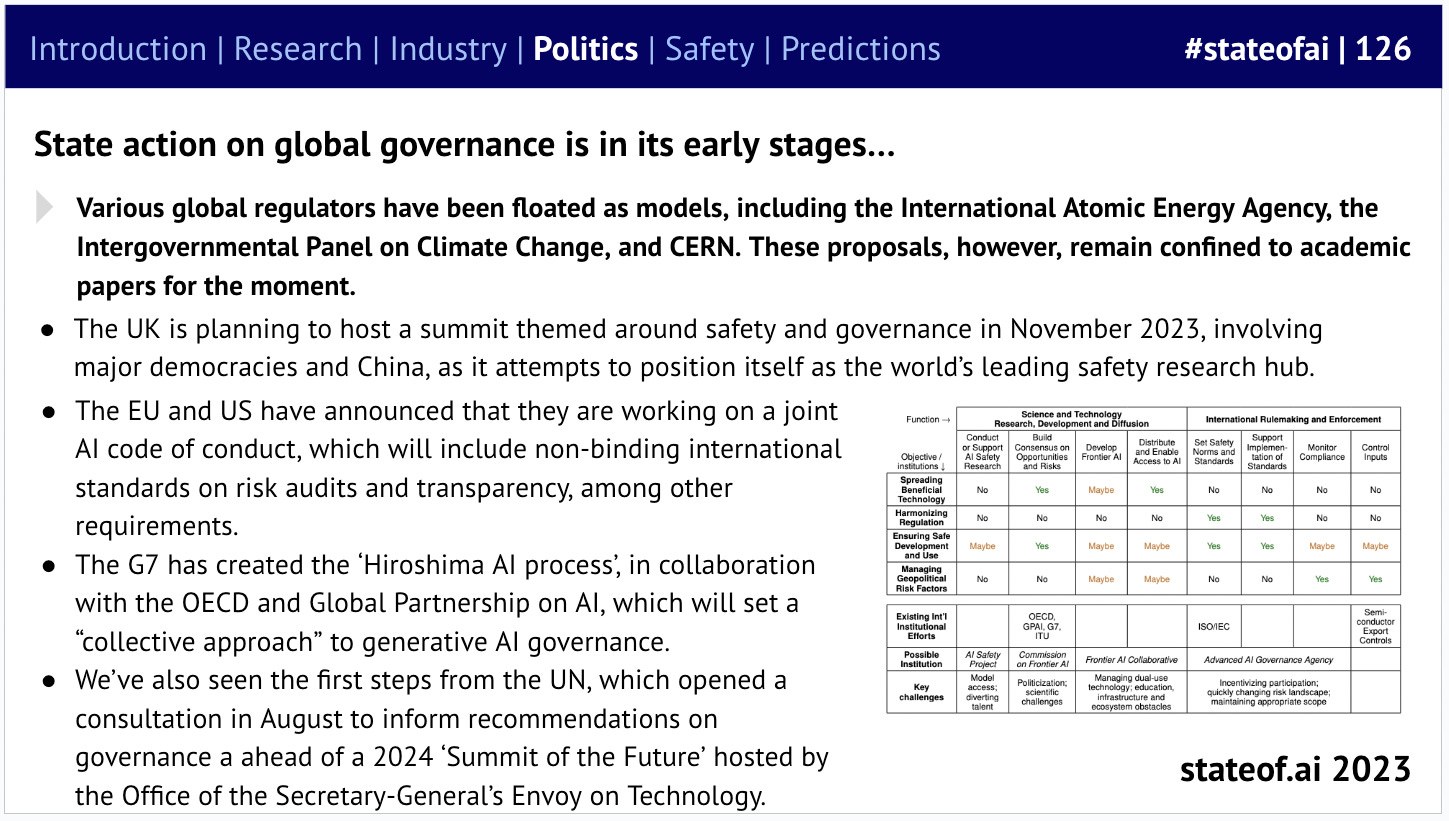

There are already numerous potential proposals for the global governance of artificial intelligence, primarily put forward by a series of global organizations. The UK AI Safety Summit, organized by Matt Clifford and others, may help to concretize some of these ideas.

As we continue to witness the power of artificial intelligence on the battlefield, these debate topics may become more urgent. The Ukraine conflict has become a laboratory for AI warfare, demonstrating that even relatively makeshift systems, when cleverly integrated, can produce devastating effects.

Another potential flashpoint is next year's U.S. presidential election. So far, the role of deepfakes and other AI-generated content has been relatively limited compared to traditional misinformation. However, low-cost, high-quality models could change this, prompting preemptive actions.

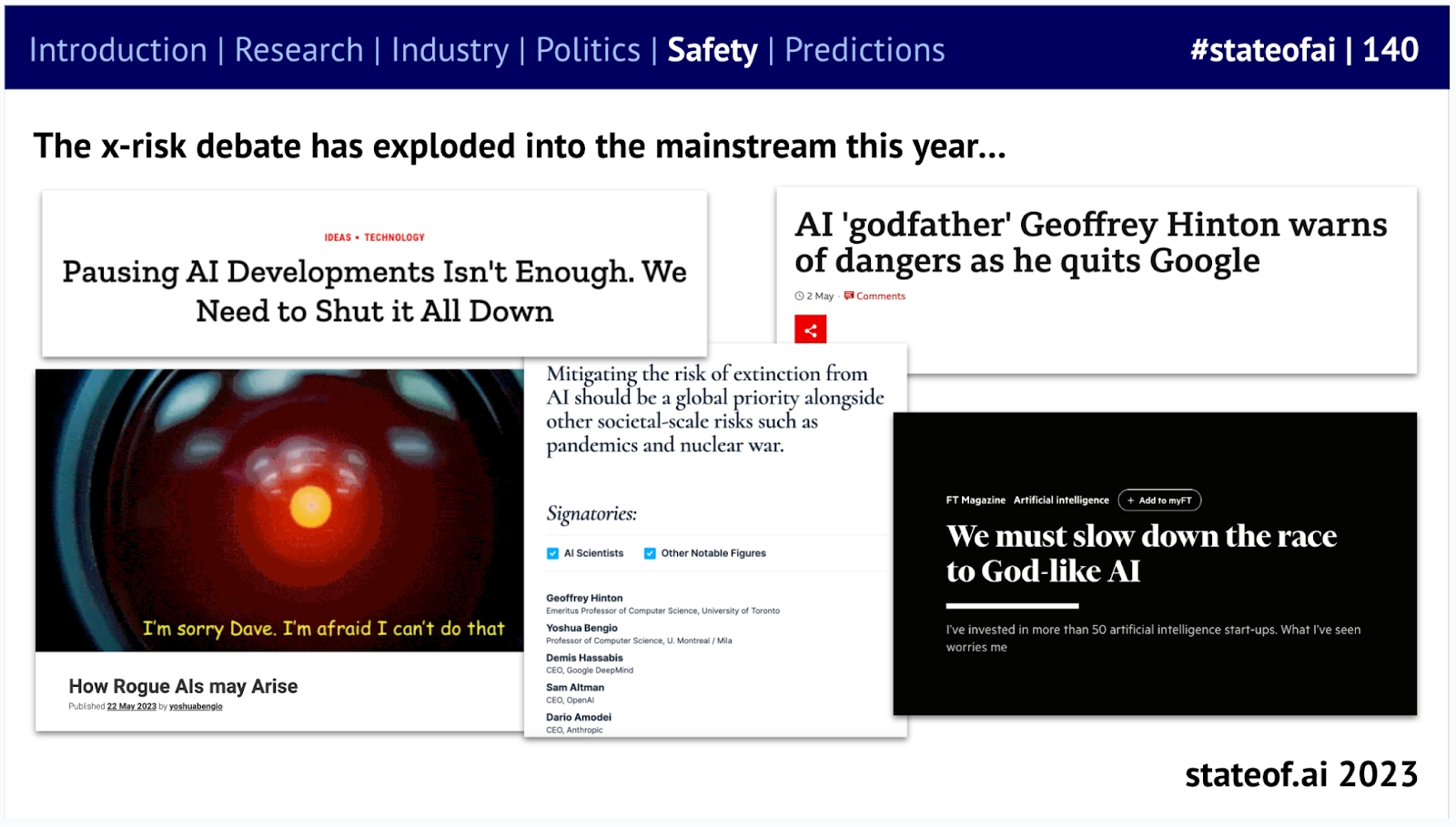

Previous AI status reports have warned that major labs may have overlooked AI safety. In 2023, debates raged about whether AI poses an existential risk to humanity, with researchers increasingly divided over open-source versus closed-source approaches, and extinction risks making headlines.

As artificial intelligence demonstrates astonishing capabilities, some experts have begun to voice concerns about existential risks to humanity.

...Needless to say, not everyone agrees—with Yann LeCun and Marc Andreessen being prominent skeptics.

Experts are divided into two camps regarding extinction risks.

It's unsurprising that policymakers are only now becoming alarmed by the potential risks of artificial intelligence, despite their ongoing efforts to understand the field. The UK has taken the lead by establishing a dedicated Frontier AI Taskforce, led by Ian Hogarth, while the US has launched congressional investigations.

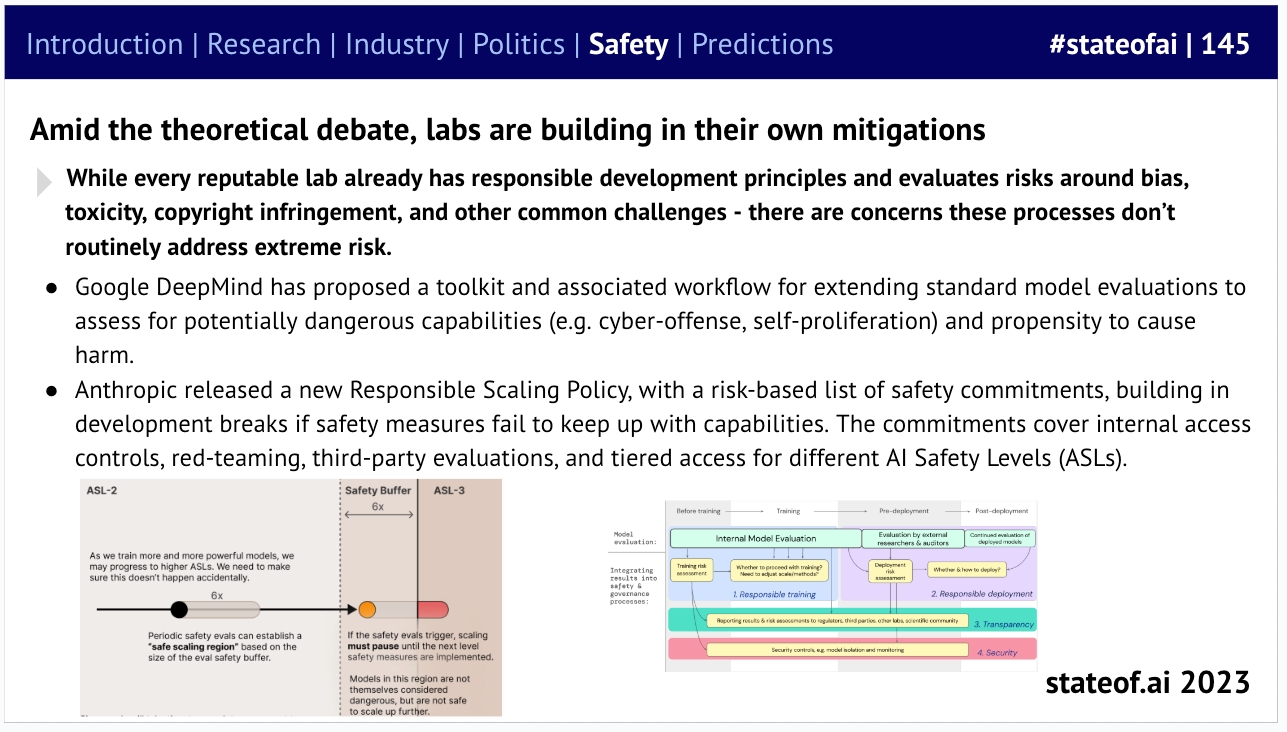

Despite ongoing theoretical debates, laboratories have already begun taking action. In terms of mitigating extreme risks from development and deployment, Google DeepMind and Anthropic are among the first companies to articulate detailed approaches.

Large-scale laboratories have already begun taking action to mitigate risks.

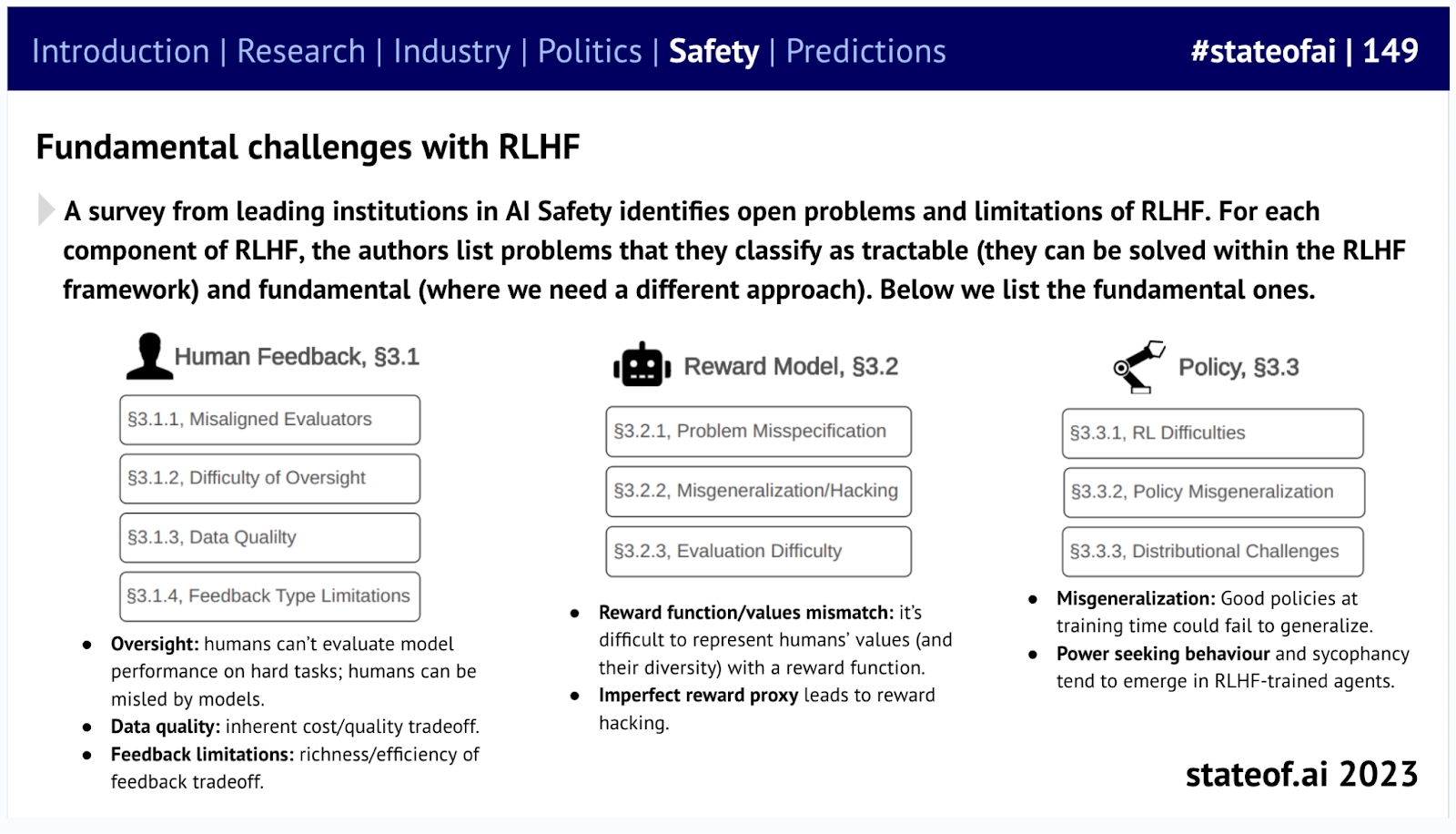

Even without involving the distant future, people have started raising challenging questions about technologies such as reinforcement learning from human feedback (which underpins technologies like ChatGPT).

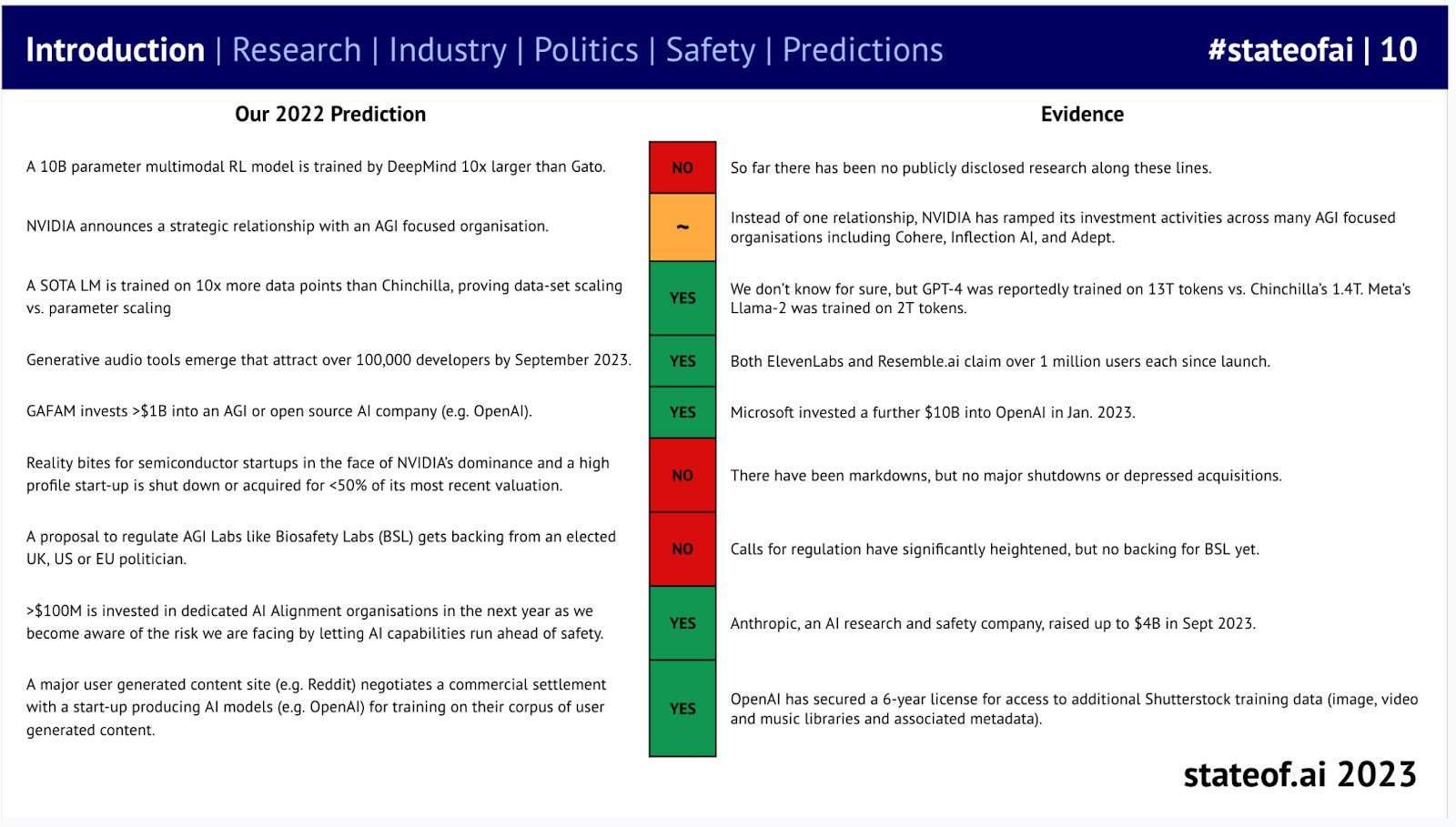

As always, in the spirit of transparency, we're keeping score on last year's predictions—our score is 5/9.

LLM training, generative AI/audio, major tech companies' full commitment to AGI R&D, alignment investments, and training data.

LLM training, generative AI/audio, major tech companies' full commitment to AGI R&D, alignment investments, and training data. Multimodal research, biosafety lab regulations, and the plight of half-baked startups.

Multimodal research, biosafety lab regulations, and the plight of half-baked startups.

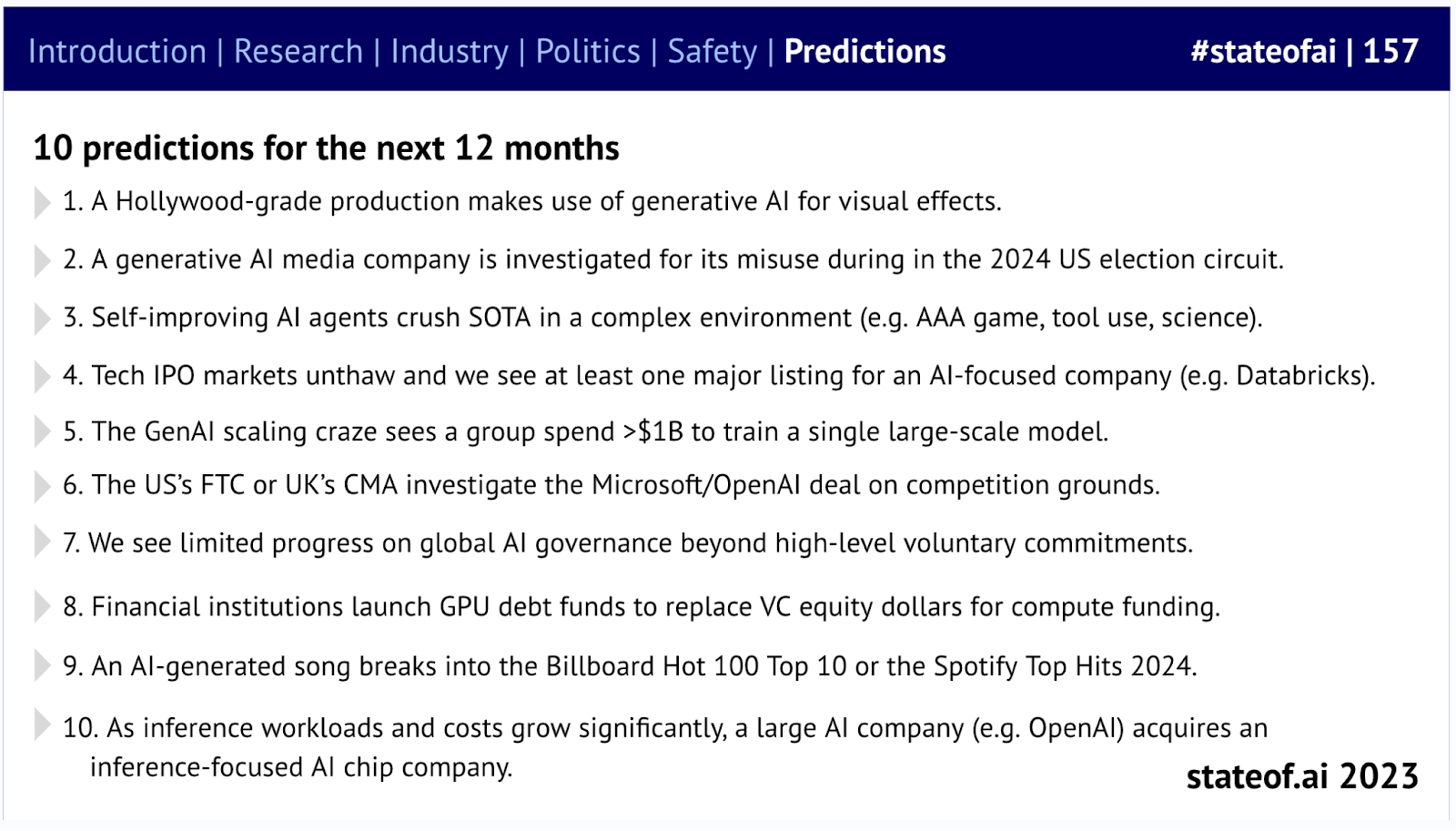

Here are our 10 predictions for the next 12 months! Including:

-

Generative AI/film production

-

Artificial Intelligence and Elections

-

Self-Improving Agents

-

The Return of IPOs

-

Models Worth Over $1 Billion

-

Competition Investigations

-

Global Governance

-

Banking + GPU

-

Music

-

Chip Acquisitions

Hollywood-level production will utilize generative artificial intelligence for visual effects.

A generative AI media company will be investigated for misuse of generation technology during the 2024 U.S. election

Self-improving AI agents will outperform state-of-the-art technology in complex environments (such as AAA games, tool usage, science, etc.)

The tech IPO market will thaw, with at least one AI-focused company going public (such as Databricks)

The training costs for generative AI large models may soar to over $1 billion

The U.S. FTC or UK's CMA will launch a competition investigation into the Microsoft/OpenAI deal

Global AI governance will make limited progress beyond voluntary actions

Financial institutions will replace venture capital equity by launching GPT debt funds to finance computing power

AI-generated songs will make it into Billboard's Top 10 or Spotify Hits 2024

随着推理负载与成本飙升,会有一家大型人工智能公司(如OpenAI)收购一家面向推理的人工智能芯片公司

-