Alibaba's Tongyi Qianwen Goes Open Source: The Beginning of the 'Siphon Effect' in the Era of Large Models

-

On December 1, Alibaba Cloud held a press conference to officially release and open-source the 'industry's strongest open-source large model,' Tongyi Qianwen's 72-billion-parameter model Qwen-72B. At the same time, Tongyi Qianwen open-sourced the 1.8-billion-parameter model Qwen-1.8B and the audio large model Qwen-Audio.

To date, Tongyi Qianwen has open-sourced four large language models with 1.8 billion, 7 billion, 14 billion, and 72 billion parameters, as well as two multimodal large models for visual and audio understanding, achieving 'full-scale, full-modal' open-source.

This was a press conference that could be described as 'unusual,' not only because it attracted widespread attention but also because, based on the information released, the long-debated 'hundred-model battle' and the dispute over large model approaches this year have found a preliminary answer—driven by Alibaba Cloud's 'no openness, no ecosystem' strategy, Tongyi Qianwen, as the most widely adopted and applied large model in China, aims to continue Alibaba Cloud's role as an innovation foundation from the 'pre-AI era,' fostering the prosperity of the upper-layer ecosystem through the openness of the AI foundation.

1

Alibaba Cloud's Open Logic

This is not Alibaba Cloud's first move in open-sourcing large language models. As one of the earliest domestic tech giants to open-source its self-developed large models, Alibaba Cloud hopes to enable small and medium enterprises and AI developers to use Tongyi Qianwen earlier and faster through open-source initiatives.

In August this year, Alibaba Cloud open-sourced the 7-billion-parameter model Qwen-7B of Tongyi Qianwen. In September, the 14-billion-parameter model Qwen-14B and its conversational variant Qwen-14B-Chat were also made available for free commercial use.

At this conference, Alibaba Cloud CTO Zhou Jingren stated that the open-source ecosystem is crucial for promoting technological progress and application implementation of large models in China. Tongyi Qianwen will continue to invest in open source, aiming to become "the most open large model in the AI era" and collaborate with partners to advance the large model ecosystem.

The potential of large models goes without saying. However, similar to the evolution of the foundational software industry, large models have two development approaches: open source and closed source, mirroring the "iOS vs. Android" competition in smartphones.

Internationally, OpenAI has chosen a closed-source path, with no specific product applications beyond ChatGPT, instead fostering its ecosystem through API provision and investments. Meta's large model Llama2 opted for open source, leveraging the open-source community to accelerate its iteration and upgrades. Domestically, among the BAT trio (Baidu, Alibaba, Tencent), Tencent Cloud and Baidu Cloud have adopted closed-source strategies for their large models, while Alibaba Cloud has chosen the open-source route.

The industry is thus divided into two camps. Proponents of closed-source models argue that this approach offers more mature and stable products, allowing customers to use them directly upon payment. Additionally, it provides more professional technical support and services.

Advocates for open-source large models believe that open source simplifies the model training and deployment process for users. Instead of training models from scratch, users can download pre-trained models, fine-tune them, and quickly develop high-quality models or applications.

“The debate over whether large models should be open-source or closed-source is essentially tied to the question of ‘ecosystem first, commercialization later’ versus ‘commercialization first, ecosystem later’,” an industry insider told Jiemian News·Bullet Finance. Historically, leading tech service providers have typically built ecosystems and applications first before focusing on business models, and this pattern continues with large models today.

As Zhou Jingren recently stated, “Large models should prioritize ecosystem development before commercialization, rather than overemphasizing commercialization from the outset.” The foundation for building an ecosystem is openness.

At this year’s Yunqi Conference, Joseph Tsai, Chairman of Alibaba Group, repeatedly emphasized the keyword “openness”: “We firmly believe that without openness, there is no ecosystem, and without an ecosystem, there is no future. At the same time, we must continue to push the boundaries of technology. Only by standing on more advanced and stable technological capabilities can we have the confidence to embrace greater openness.”

Unlike other leading companies, Alibaba has always had a gene for technology openness, such as in operating systems, cloud-native technologies, databases, big data, and more. In these fields, Alibaba has self-developed open-source projects.

Additionally, in November last year, Alibaba launched the AI open-source community 'ModelScope'. According to Alibaba's data, nearly all major large-model R&D institutions in China have chosen 'ModelScope' as the primary platform for model open-sourcing. After a year of development, 'ModelScope' has now gathered 2.8 million developers, over 2,300 high-quality models, and model downloads have exceeded 100 million.

Moreover, Alibaba Cloud's abundant computing resources are another key reason for its choice to open-source. Both cloud and AI rely heavily on computing power, especially large models, which have even higher demands. Alibaba's strength lies in cloud computing, including data, computing power, and storage—the critical underlying resources.

From Alibaba Cloud's releases and showcases this year, it is evident that the company possesses full-stack AI capabilities, along with a more comprehensive Tongyi large model series. Behind such a capability system, computing power remains indispensable. This is also why, in the era of large models, the MaaS layer has become the most critical business segment for leading cloud service providers.

Internationally, cloud service giants like Microsoft have also expanded their MaaS services for open-source models. They rely on connecting the upstream, midstream, and downstream of the industrial chain to form a scaled and platform-based ecosystem.

Alibaba Cloud's future role will be similar—building on the cloud platform, it will leave the construction and application of large models to industries, connecting numerous enterprises and individual developers to build a new ecosystem.

From this point onward, Alibaba Cloud's open-source logic for large models becomes clearer: providing technical products through open-source methods, lowering barriers, promoting technological inclusivity, and offering diversified, comprehensive technical services for enterprise clients and individual developers. The richer the large and small models created on the foundation of Tongyi Qianwen, the more prosperous the AI ecosystem will be, and the broader Alibaba Cloud's prospects will become.

2

"Standing on the Shoulders of Giants"

According to reports, the newly open-sourced Tongyi Qianwen Qwen-72B features high performance, high controllability, and cost-effectiveness, providing the industry with an option comparable to commercial closed-source large models.

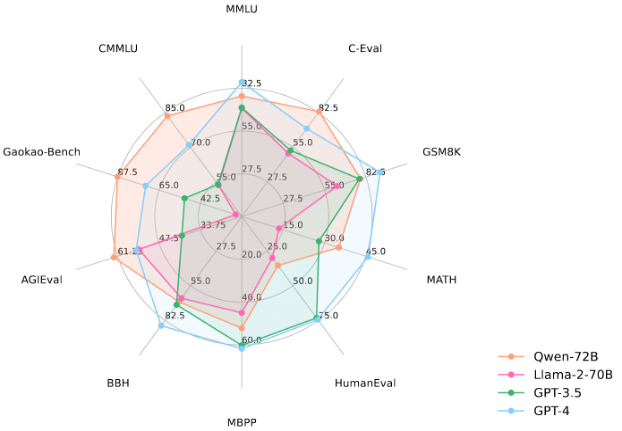

From the performance data, Qwen-72B has achieved the best scores among open-source models in 10 authoritative benchmarks, including MMLU and AGIEval, establishing itself as the most powerful open-source model. It even outperforms the open-source benchmark Llama2-70B and most commercial closed-source models (with some scores surpassing GPT-3.5 and GPT-4).

Based on Qwen-72B, large and medium-sized enterprises can develop various commercial applications, while universities and research institutions can conduct scientific research such as AI for Science.

From 1.8 billion, 7 billion, 14 billion to 72 billion parameters, Tongyi Qianwen has not only become the industry's first "full-size open-source" large model but has also been widely welcomed by the public.

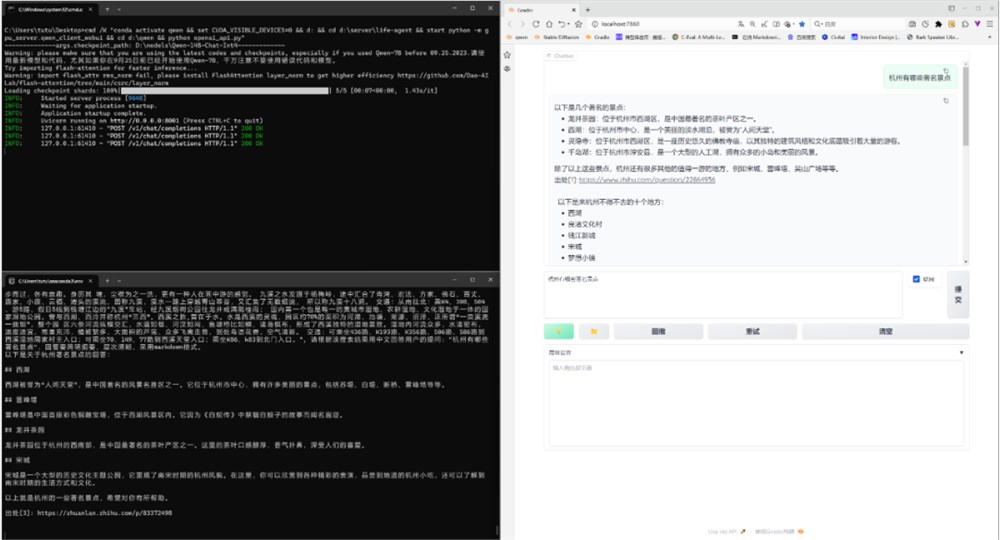

According to reports, the open-source Tongyi Qianwen model series has previously topped the HuggingFace and Github large model rankings, gaining favor among many enterprise clients and individual developers. The cumulative downloads have exceeded 1.5 million, spawning over 150 new models and applications. Users can directly experience the Qwen series models in the "ModelScope" community, call the model APIs through Alibaba Cloud's Lingji Platform, or customize large model applications based on Alibaba Cloud's Bailian Platform.

It is understood that currently, from enterprises/universities to startups and individual developers, there are numerous examples of rapid business growth achieved by developing powerful AI platforms and applications based on Tongyi Qianwen.

East China University of Science and Technology's X-D Lab (Heartbeat Laboratory) focuses on AI application development in social computing and psychological-emotional fields. Based on the open-source Tongyi Qianwen, the team has developed several specialized models: the mental health large model MindChat (Casual Talk) providing psychological comfort and evaluation services; the medical health large model Sunsimiao (named after the famous ancient physician) offering medication and wellness advice; and the education/examination large model GradChat (Lucky Carp) providing guidance on employment, further education, and studying abroad for students.

X-D Lab team members stated: "From the three dimensions of sustainability, ecosystem, and scenario adaptation, Tongyi Qianwen is the most suitable choice." "Previously, a company approached us for collaboration. We only fine-tuned the Qwen base model with 200,000 tokens of data and achieved better results than another model fine-tuned with millions of data points. This demonstrates both the capability of the Tongyi Qianwen base model and our strong industry know-how."

"I have very high expectations for the 72B model and am curious about its performance limits in our field. Given the limited computing resources in academia, we may not use such a large-parameter model directly for inference services, but we might conduct academic explorations based on Qwen-72B, including using federated learning algorithms to process data. We also hope the inference costs of 72B can be well controlled," said a university researcher.

For startup Youlu Robotics, the open-source nature of large models is crucial. The company specializes in large models + embodied intelligence, aiming to equip every professional device with intelligence. Currently, Youlu Robotics has integrated Qwen-7B into road cleaning robots, enabling them to interact with users in natural language, understand user requests, and complete assigned tasks.

Chen Junbo, founder and CEO of Youlu Robotics, stated: "We've experimented with all available large models on the market and ultimately chose Tongyi Qianwen because: first, it's currently one of the best-performing open-source large models in Chinese; second, it provides a very convenient toolchain that allows us to quickly conduct fine-tuning and various experiments with our own data; third, it offers quantized models with minimal performance degradation before and after quantization, which is highly attractive as we need to deploy large models on embedded devices; finally, Tongyi Qianwen provides excellent service with quick responses to all our needs."

In the eyes of many individual developers, Tongyi Qianwen represents endless possibilities. Tutu, who works in the power industry, is primarily responsible for macro-analysis, planning research, and preliminary optimization of new power systems and integrated energy. He uses Tongyi Qianwen's open-source model to build document Q&A applications, aiming to explore the various possibilities of applying large models in the power sector.

"I use Qwen to create retrieval-based Q&A applications for private knowledge bases. The scenarios are very specific, often requiring searching through documents with hundreds of thousands or even millions of words. Given an English document, I ask the large model to locate the content and provide answers based on the document's table of contents," Tutu explained.

Professional document retrieval and interpretation tasks demand high accuracy and logical rigor. Among the open-source models he has tested, Tongyi Qianwen is the best—not only providing accurate answers but also free from bizarre bugs. "The performance of Tongyi Qianwen's 14B open-source model is already excellent, and the 72B version is even more anticipated. I hope the 72B model can take another step forward in logical reasoning. With some programming enhancements, it could handle document retrieval and interpretation tasks effectively. Once the basics are mastered, we can gradually increase the difficulty, such as requiring the model to meet national standards in this industry."

Currently, Qwen-based industry models span various sectors, including healthcare, education, automation, and computing.

Developers have enthusiastically noted that beyond open-source large models, the recently launched 'Tongyi Qianwen AI Challenge' holds significant appeal. It offers opportunities to experiment with fine-tuning the Tongyi Qianwen model, explore the coding potential limits of open-source models, and develop next-gen AI applications using the Tongyi Qianwen model and ModelScope's Agent-Builder framework. "It feels like standing on the shoulders of giants—embracing challenges and reaping growth."

3

The Winds of Change for Large Models

At the World Internet Conference Wuzhen Summit held in November this year, the words of Alibaba Group CEO Wu Yongming left a deep impression on Interface News·Bullet Finance:

"AI technology will fundamentally change the way knowledge iterates and society collaborates, and the acceleration of development driven by this will far exceed our imagination."

"The deep integration of AI and cloud computing will become an important driving force for the iteration of cloud computing. The dual-wheel drive of 'AI + cloud computing' is Alibaba Cloud's underlying capability to face the future and support AI infrastructure."

"Alibaba will position itself as a 'technology platform enterprise,' build a more solid infrastructure foundation, continuously increase openness and open-source efforts, and work with developers to create a thriving AI ecosystem."

These words effectively explain Alibaba's past, present, and future to the outside world: Previously, Alibaba's business covered logistics, payments, transactions, production, and other segments, providing digital business services for these areas. Against the backdrop of AI becoming the most important driving force for China's digital economy and industrial innovation, Alibaba has transformed into a 'tech platform enterprise,' offering infrastructure services for various industries.

Their complete technological system and infrastructure-building capabilities are now being fully opened to the outside world through open-source and platform services. They not only provide stable and efficient AI foundational services but also aim to create an open and thriving AI ecosystem. This initiative seeks to build a solid AI foundation for society, achieve self-upgrading, and align with the broader trends of the era.

Alibaba Cloud has already reaped substantial benefits from large models. Over 50% of China's leading large model companies operate on Alibaba Cloud. As the era of intelligence arrives, AI will become the new productivity. Alibaba's diverse businesses and scenarios are experimenting with large models to enhance product experience and operational efficiency, creating new growth engines.

Conversely, large models are also driving Alibaba Cloud. Zhou Jingren once stated, 'Based on the Tongyi Qianwen large model, we have AI-ized over 30 cloud products, significantly improving development efficiency.' This information fully illustrates why Alibaba Cloud aims to become the most open cloud in the AI era.

Today, from foundational computing power to AI platforms and model services, Alibaba Cloud is continuously increasing its R&D investments, shaping three new strategic approaches: infrastructure, open-source initiatives, and open platforms. Coupled with iterative advancements in the IaaS and PaaS layers, these efforts effectively aggregate customers, developers, and ISVs while also helping to establish exemplary benchmark cases.

These advantages are building a new growth flywheel for Alibaba Cloud and even Alibaba Group as a whole. When the domestic open-source large model ecosystem "catches the wind," Alibaba Cloud stands as the "weather vane" guiding the trend.

With its AI computing foundation, diverse and open-source products, multifaceted application scenarios, an expanding developer community through open-source engagement, toolchains and intelligent platforms, and an open innovation ecosystem, Alibaba Cloud is forging a competitive commercialization path via large model open-source initiatives: "high-quality open-source foundational models → model optimization → AI application innovation." This development trajectory holds significant implications for the domestic large model industry's practical implementation and innovation, inevitably accelerating the emergence of mature, large-scale applications in China.

As Dr. Wang Jian, academician of the Chinese Academy of Engineering and founder of Alibaba Cloud, stated: The integration of AI and cloud computing will usher in the third wave of cloud computing. The open-source and democratization of large models will also transform the technology, products, and service models of cloud computing. The future roles and positioning of cloud service providers will evolve in response to large models.