Can Inspur, the 'Huawei' of the Server Industry, Reap the Benefits of AI?

-

Inspur Information (000977.SZ) is undergoing a transformation.

Recently, Inspur Information released its open-source large-scale model series 'Yuan 2.0' with hundreds of billions of parameters. This series of models is free for commercial use and comes in three versions with parameter values of 102B, 51B, and 2B. In its research paper, Inspur Information claimed that 'Yuan 2.0' performs better than Meta's open-source large model Llama 2 in tests involving code, mathematics, and practical Q&A.

Inspur Information is one of China's earliest companies engaged in large-scale model development. Its 'Yuan 1.0', released in 2021, was one of the top three single large models in the global NLP field at the time. The other two were OpenAI's 'GPT-3' and 'Megatron-Turing-NLG', jointly developed by Microsoft and NVIDIA.

Today, OpenAI has become the rising star in the AI field with 'GPT-4', leading the world in technological capabilities. Microsoft and NVIDIA have each found their niche—Microsoft by integrating large models with its business operations to strike gold in 'AI + Office', and NVIDIA by becoming the 'shovel seller' of the AI era with its GPUs, securing a trillion-dollar market valuation.

However, what is perplexing is that Inspur Information seems to have failed to capitalize on the AI boom.

The financial reports for the first three quarters of this year show that Inspur Information's revenues for Q1, Q2, and Q3 were 9.4 billion, 24.8 billion, and 48.096 billion yuan, respectively, representing year-on-year declines of 46%, 29%, and 9%. Net profits attributable to shareholders were 210 million, 325 million, and 787 million yuan, down 37%, 66%, and 49% year-on-year, respectively.

As a leading enterprise in the global server industry, why has Inspur Information not been able to seize the immense opportunities brought by AI? What kind of blueprint has it laid out for the AI era? Most importantly, how will Inspur Information and the entire server industry find their value anchor in the rapidly advancing AI era?

Inspur Information is often referred to as the 'Huawei in the server field.' Its founder, Sun Pishu, is widely recognized as the 'Father of Chinese Servers.' Under Sun's leadership, Inspur Information developed China's first critical application host, making China the third country after the U.S. and Japan to master high-end server technology.

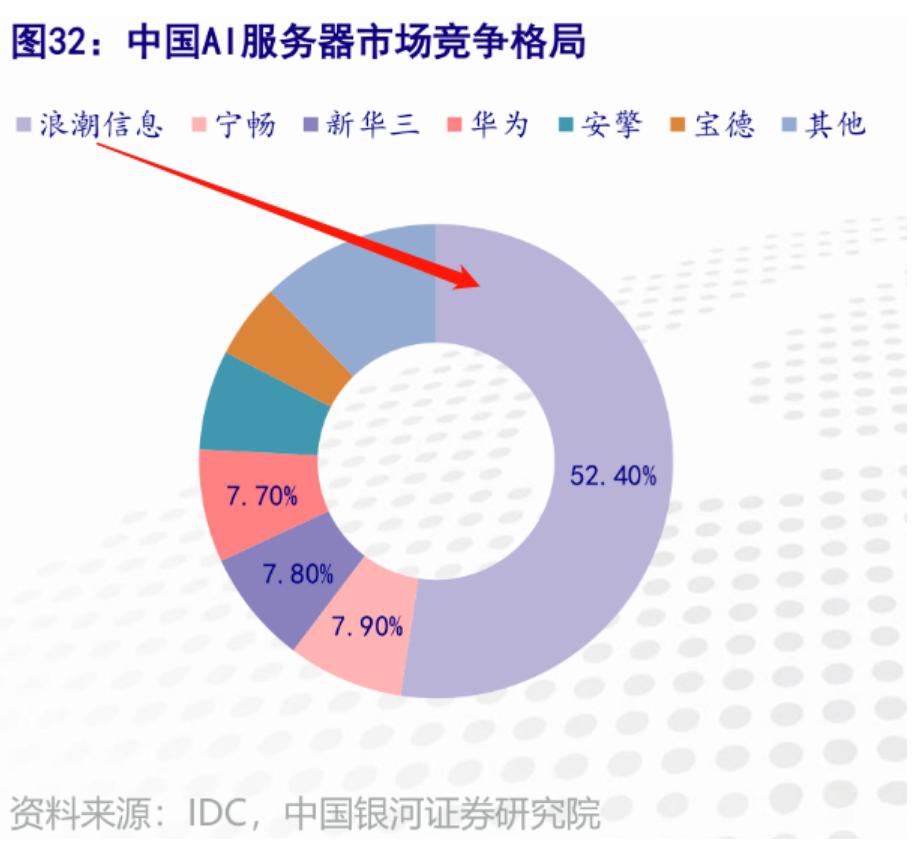

In terms of scale, Inspur Information is undoubtedly the top server manufacturer in China. According to data from Gartner and IDC, in 2021, Inspur Information ranked first in both the domestic X86 server market and the AI server market, with market shares of 30% and 52.4%, respectively. In 2022, it achieved the highest global market share in AI servers, and in 2023, it maintained its leading position in the Chinese server market.

Given its high market share and the current AI boom, why hasn't Inspur Information been able to capitalize on the AI wave?

The primary reason is the decline in traditional business.

Inspur's servers can generally be divided into three categories: the traditional general-purpose servers commonly used in the industry, edge computing servers, and the highly popular AI servers this year.

Before this year, the industry primarily purchased Inspur's general-purpose servers. However, with the development of large models driving the demand for high computing power, unless CPU technology undergoes another major upgrade, current general-purpose servers are unlikely to be suitable for the training and inference tasks of large models.

As a result, many internet companies have shifted their investment focus to AI servers this year, leading to a significant decline in demand for general-purpose servers. According to the latest report from TrendForce, internet companies such as Baidu (09888.HK), Alibaba (89988.HK), and Tencent (00700.HK) have halved their server procurement numbers compared to previous years.

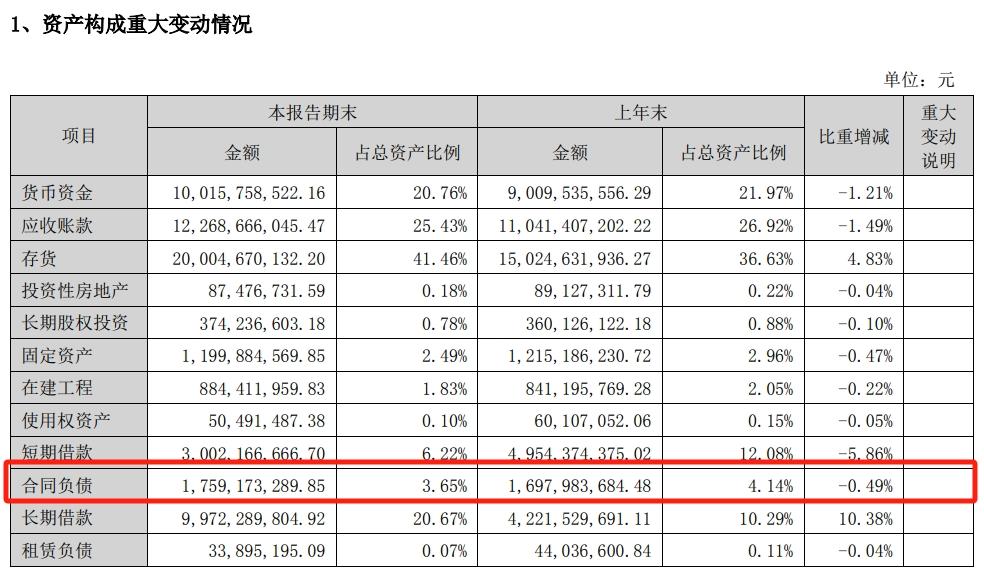

The impact of declining general server purchases is directly reflected in Inspur Information's contract liabilities. Contract liabilities represent the deposits paid by ordering companies before procurement. The semi-annual report shows that from January 1 to June 30 this year, Inspur Information's contract liabilities increased only slightly from 1.698 billion yuan to 1.759 billion yuan, meaning a mere 61 million yuan increase over six months. In contrast, the increase in contract liabilities during the first half of 2022 was 416 million yuan.

Logically, while general servers are underperforming, AI servers should be able to compensate, if not drive growth for Inspur Information, at least preventing consecutive quarterly declines in net profit and revenue.

This brings us to the second reason: the difficulty in scaling AI server production.

Regarding the substantial decline in the company's revenue in the first half of the year, Inspur Information stated frankly in its performance forecast released in July that its server product revenue was "affected by factors such as the global GPU and related specialized chip supply shortage."

Even the cleverest housewife cannot cook without rice. In the short term, the global "chip shortage" remains a shared anxiety. Without GPU supply, no matter how advanced Inspur Information's server technology is, it cannot produce AI servers out of thin air.

The shrinking demand for its core business and the constraints on emerging businesses due to supply chain limitations are the real challenges Inspur Information faces today. However, objectively speaking, if these were the only two factors, Inspur Information would only be experiencing temporary difficulties and could quickly recover once the production expansion cycle is over.

But what truly shakes its value foundation is that domestic cloud vendors have started bypassing server manufacturers to directly engage with upstream players like NVIDIA.

Servers are the weak link in the computing power industry chain. Upstream are chip manufacturers like Intel, NVIDIA, and AMD, which not only have thick technological barriers and extremely high market concentration but also benefit from various software and hardware ecosystems, ensuring their dominant position remains solid.

Downstream are cloud vendors with substantial financial and technical resources, such as Baidu, Alibaba, and Tencent. They can choose to collaborate with server manufacturers like Inspur and H3C or skip them entirely to place orders directly with upstream suppliers. They can even develop servers in-house, which may help them establish closer relationships with chip manufacturers, gain early access to new technologies, and maintain a competitive edge.

Not only has Inspur failed to benefit from the AI boom, but the gloom looming over it actually casts a shadow over the entire server industry. On November 23, H3C CEO Yu Yingtao also mentioned in A Letter to All Employees that middle and senior management would take voluntary pay cuts due to slowing revenue growth.

The importance of server manufacturers in the computing power industry chain is gradually declining. With the arrival of the AI era, the industry is undergoing a major test, and this test paper has only one question—what exactly is the value of servers in the computing power industry?

As the saying goes, blessings and misfortunes are intertwined. Although Inspur Information has not directly reaped the benefits of the AI era in the short term, it has brought about many positive changes.

In the AI era, the definition of "large-scale computing power" has shifted. In the past, a few hundred GPU cards could be considered large-scale computing power, but now, computing clusters built with tens of thousands of GPU cards are no longer unimaginable. The change in the scale of computing power has elevated some previously marginal research fields to new heights.

At the 2021 Open Compute Project (OCP) Global Summit, Inspur Information shared research titled 'Distributed Inspur Information Hard Drive Sensitivity Expert Model.' This study uncovered the internal mechanism by which sound pressure affects hard drive read/write performance and proposed a mathematical model.

Why was this research conducted?

Around 2019, the industry encountered a widespread issue: when high-power, high-density computing clusters used fans for cooling, the performance of server hard drives could degrade or even fail, limiting storage density improvements. After a series of experiments, the root cause was identified: fan speeds had surpassed those of top-tier sports car engines, reaching 20,000 to 30,000 RPM, generating sound energy powerful enough to interfere with hard drives.

Inspur Information's research is the key to unlocking this systemic black box. Against the backdrop of increasingly widespread high-performance computing applications, similar issues will only multiply. Ensuring the effective utilization of each computing card's power has become a prominent discipline. These pressing challenges underscore the value of server manufacturers like Inspur Information.

Beyond ensuring the stable operation of computing centers, Inspur Information continuously iterates energy-saving products and technologies to help enterprises reduce costs and improve efficiency.

In 2022, aligned with the "Dual Carbon" strategy, Inspur Information incorporated "All in Liquid Cooling" into its corporate development strategy. The company has fully deployed liquid cooling solutions, supporting cold-plate liquid cooling across its entire product line, including general-purpose servers, high-density servers, rack-scale servers, and AI servers. It also provides comprehensive lifecycle solutions for liquid-cooled data centers.

According to a report by the Shanghai Securities News, the fully liquid-cooled ORS3000S server launched by Inspur Information on March 17 this year has been adopted by JD Cloud's data center. It provides computing power support for JD's 618 and Double 11 shopping festivals, delivering performance improvements of 34%-56%.

If focusing on computing power centers represents Inspur Information's fundamental duty as a server manufacturer, then its efforts to promote the computing power ecosystem demonstrate this "hardware veteran's" clear self-awareness and courage to break new ground.

Today, NVIDIA's dominance in the GPU field owes much to the software and hardware ecosystem it has built. However, for a long time, the value of ecosystems hasn't received sufficient attention domestically.

It's precisely in this aspect that Inspur Information may be ahead of most. From launching the YuanNao ecosystem in 2019, to introducing the AIStation platform in 2020, and then open-sourcing the "Yuan 2.0" series of foundational large models on November 27, Inspur Information has been consistently promoting the prosperity of the computing power ecosystem. On one hand, this aligns with its role as an "infrastructure provider" - the more prosperous the industry, the more potential customers emerge. On the other hand, these initiatives aim to strengthen its presence in the computing power industry chain.

In fact, Inspur Information is well aware of its limitations as a server provider and has been consistently seeking ways to engage more deeply in various industry segments. For instance, in 2015, the company introduced the JDM (Joint Design Manufacture) service model, which is based on integration with users' industrial chains. With the JDM model, Inspur Information has reduced the R&D cycle for new products from 1.5 years to 9 months, with the fastest turnaround being just 3 months from development to supply.

Of course, as the backdrop to these strategic moves, Inspur Information has been continuously iterating its server products to enhance computational efficiency.

The semi-annual report reveals that in 2023, Inspur Information made significant progress in converged architecture technology, successfully developing a 'Serial Memory Expansion Resource Pool System Based on Cache-Coherent Bus.' This innovation achieves large-scale memory expansion while effectively improving memory resource utilization.

In summary, Inspur's AI strategy revolves around four key directions: enhancing data center stability, reducing computing costs, improving server efficiency, and fostering a computing ecosystem with Inspur's distinctive imprint. These initiatives are not only responses to the era's transformation but also part of the company's long-term strategy.

Another question arises: despite extensive preparations for the AI era, has Inspur truly secured its anchor?

From the perspective of "Silicon Research Lab," whether Inspur can ultimately solidify its corporate value depends on how deeply its two anchors—"cost" and "ecosystem"—are embedded in customer needs.

First, let’s discuss cost.

Currently, the high cost of computing power remains one of the primary reasons many enterprises hesitate to adopt large models, and in the short term, these costs may continue to rise.

On November 24, Observer.com reported that among the three chips (L2, L20, H20) NVIDIA developed for the Chinese market, the H20 will be delayed until the first quarter of next year due to integration challenges faced by server manufacturers.

Industry insiders analyzed that the interconnect speed of these chips is similar to the H100, meaning their performance in large-scale computing tasks is comparable. However, this will lead to a significant increase in computing power costs for enterprises.

The rising computing power costs are driving demand for cost reduction, making Inspur Information's liquid cooling technology a focal point for market attention.

Objectively speaking, this path is not only difficult to navigate but also highly congested. For Inspur Information to truly anchor its value, it must withstand challenges from at least two groups.

Competition from similar server manufacturers like H3C and Superfusion is a given, while cloud providers are also committed to reducing energy consumption through methods such as full lifecycle carbon calculations.

Previously, Ant Group and the China Academy of Information and Communications Technology released the "Green Computing for Computing Applications White Paper," which introduced the concept of "end-to-end green computing." This approach considers the entire industrial chain—from power production and computing power production (including AI computing center builders, hardware manufacturers, and cloud providers) to computing power applications—incorporating operational energy costs into the initial construction phase.

In other words, whether Inspur Information can provide servers and maintain computing power centers more efficiently and at a lower overall cost than cloud providers doing it themselves remains a question that needs to be answered in the future.

Secondly, there's the ecosystem.

As mentioned earlier, NVIDIA's chips dominate the market not only because of their outstanding performance but also due to their robust hardware and software ecosystem. Specifically, over the past nearly 20 years, any field requiring GPU research has relied on applications built on NVIDIA's underlying architecture. These applications and operational habits form NVIDIA's strongest moat.

As a server supplier, whether for the sake of supply chain stability or to ensure that domestic computing power ecosystems are not constrained by external factors, Inspur Information must deepen the establishment of its own ecosystem.

From the server perspective, the requirement is to achieve compatibility with more types of chips from more manufacturers. This is something Inspur Information has been consistently working on.

At the application layer, Inspur Information frequently engages with various enterprises requiring cloud computing solutions, making it well-suited to play the role of an ecosystem builder.

From a corporate strategy standpoint, this aligns with Inspur Information's development direction. Transitioning from a hardware vendor to a comprehensive service provider encompassing integrated hardware-software solutions, efficient network solutions, security services, and data analysis tools helps enhance corporate competitiveness.

Overall, in the short term, Inspur Information cannot yet reap the maximum benefits of AI. Against the backdrop of global GPU supply shortages, as a relatively weaker link in the industrial chain, it's understandable that the company may temporarily struggle to deliver outstanding performance results.

What truly deserves attention is that, based on past strategic layouts and R&D directions, Inspur Information has been stockpiling 'ammunition' for the AI era and is committed to maximizing the value of servers in the entire industry chain.

But will these preparations be enough to help it achieve its ambitions? Perhaps cooperation will be a more important keyword than competition.