Chinese Team Successfully Open-Sources High-Quality Image-Text Dataset ShareGPT4V, Boosting Large-Scale Application Development

-

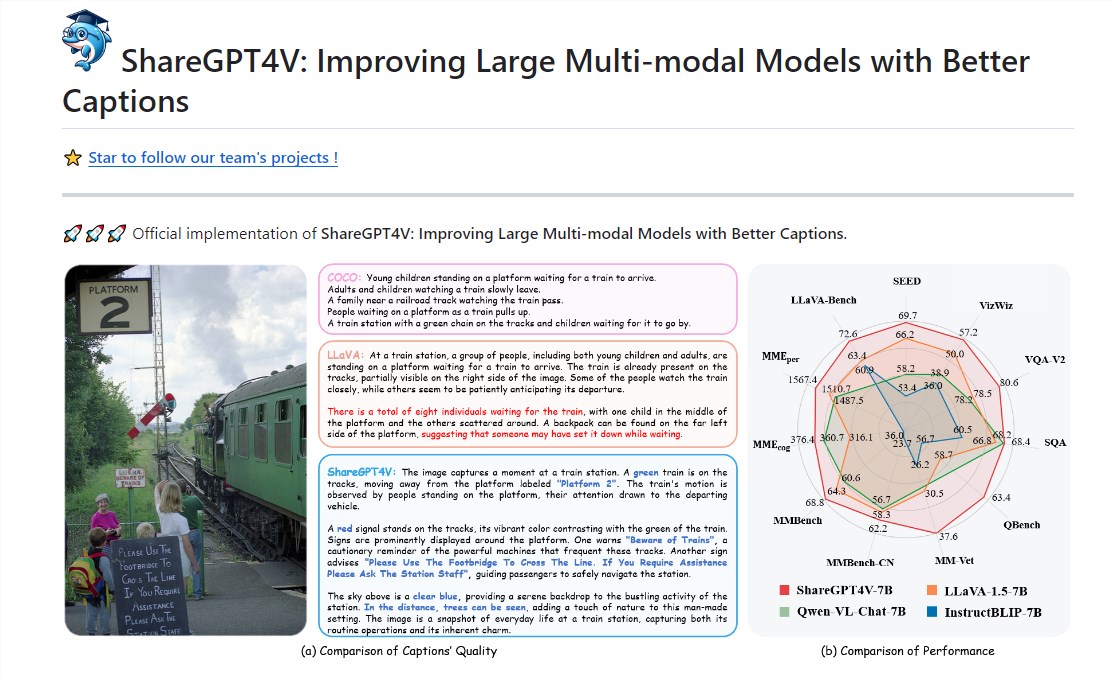

A Chinese team recently open-sourced a remarkable image-text dataset named ShareGPT4V, built upon GPT4-Vision, training a 7B model. This initiative has achieved significant progress in the multimodal field, surpassing models of the same level.

The dataset contains 1.2 million image-text description pairs, covering world knowledge, object attributes, spatial relationships, artistic evaluations, and more. It significantly outperforms existing datasets in terms of diversity and information coverage.

Paper address: https://arxiv.org/abs/2311.12793

Demo: https://huggingface.co/spaces/Lin-Chen/ShareGPT4V-7B

Project address: https://github.com/InternLM/InternLM-XComposer/tree/main/projects/ShareGPT4V

The performance of multimodal models is largely constrained by the effectiveness of modality alignment, yet existing works lack large-scale, high-quality image-text data. To address this issue, researchers from the University of Science and Technology of China (USTC) and Shanghai AI Lab have introduced ShareGPT4V, a pioneering large-scale image-text dataset.

Through in-depth research on 100,000 image-text description pairs generated by the GPT4-Vision model, they successfully constructed this high-quality dataset, which covers diverse content including world knowledge, art evaluation, and more.

The release of this dataset establishes a new foundation for multimodal research and applications. In experiments, the researchers demonstrated the effectiveness of the ShareGPT4V dataset across various architectures and parameter scales of multimodal models. Through equivalent substitution experiments, they successfully improved the performance of multiple models.

Ultimately, by utilizing the ShareGPT4V dataset in both pre-training and supervised fine-tuning phases, they developed the ShareGPT4V-7B model, which achieved outstanding results in multimodal benchmark tests.

This research provides robust support for future multimodal studies and applications, while also prompting the multimodal open-source community to focus on developing high-quality image descriptions. It foreshadows the emergence of more powerful and intelligent multimodal models. This achievement holds positive significance for advancing the field of artificial intelligence.