Five Major Players in Fierce Competition: Unveiling the Driving Forces Behind China's AI Large Models!

-

The battle for customers among domestic public cloud giants in the large model sector has intensified.

Baidu Intelligent Cloud recently announced that its Qianfan large model platform now manages 42 mainstream large models, serving over 17,000 clients. Meanwhile, Alibaba Cloud claims that more than half of China's large model companies operate on its platform. Even the "dark horse" Volcanic Engine has boldly stated that over 70% of the domestic large model market consists of its clients... So, who truly has the most large model customers?

Cloud computing industry insiders revealed to Zhidongxi that major cloud giants already have large model enterprises aligning with them, each potentially nurturing their own "domestic OpenAI." However, few players are exclusively tied to a single cloud provider. Instead, they evaluate multiple factors such as GPU computing power, development toolchains, and community ecosystems, remaining in a phase of "strategic ambiguity."

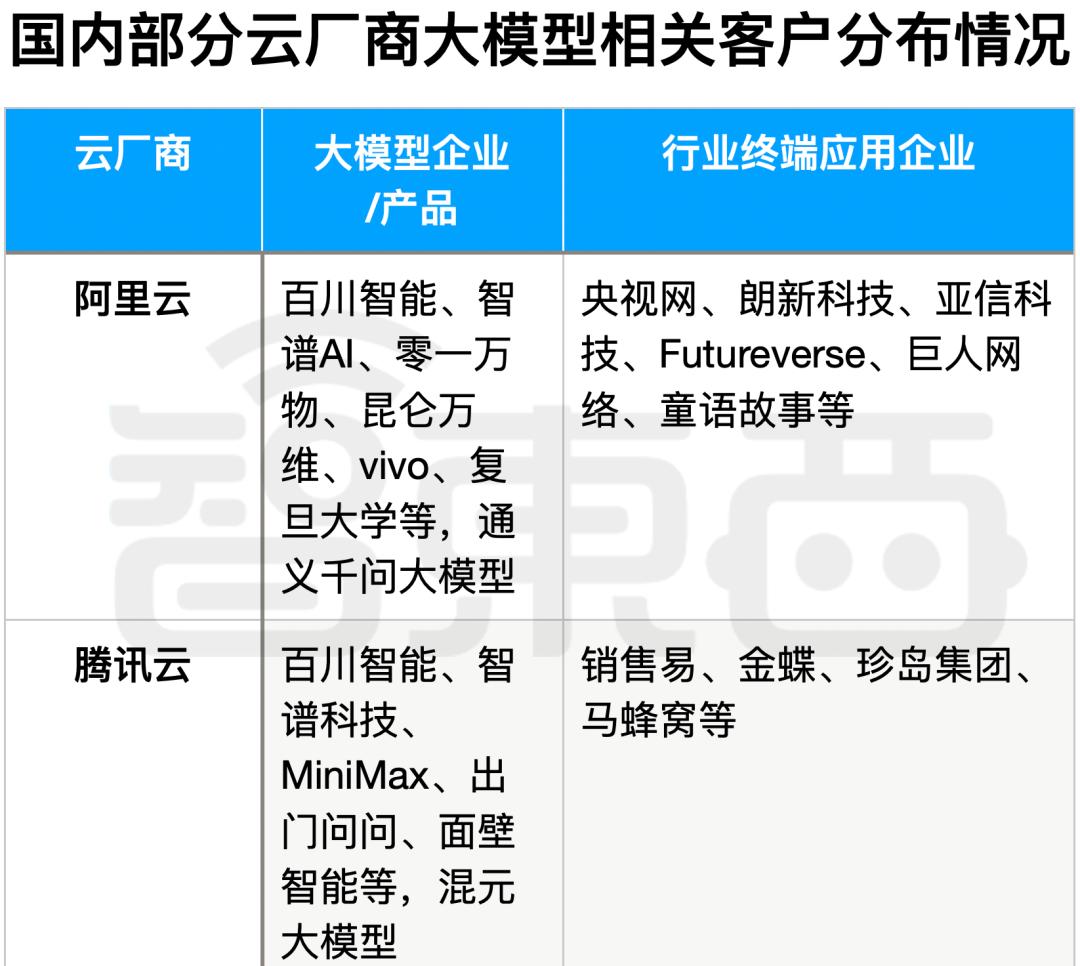

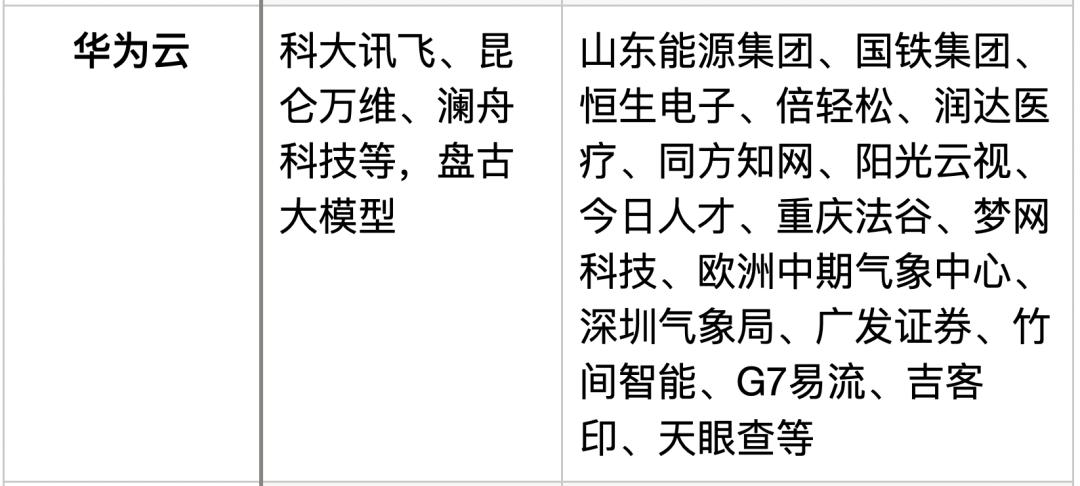

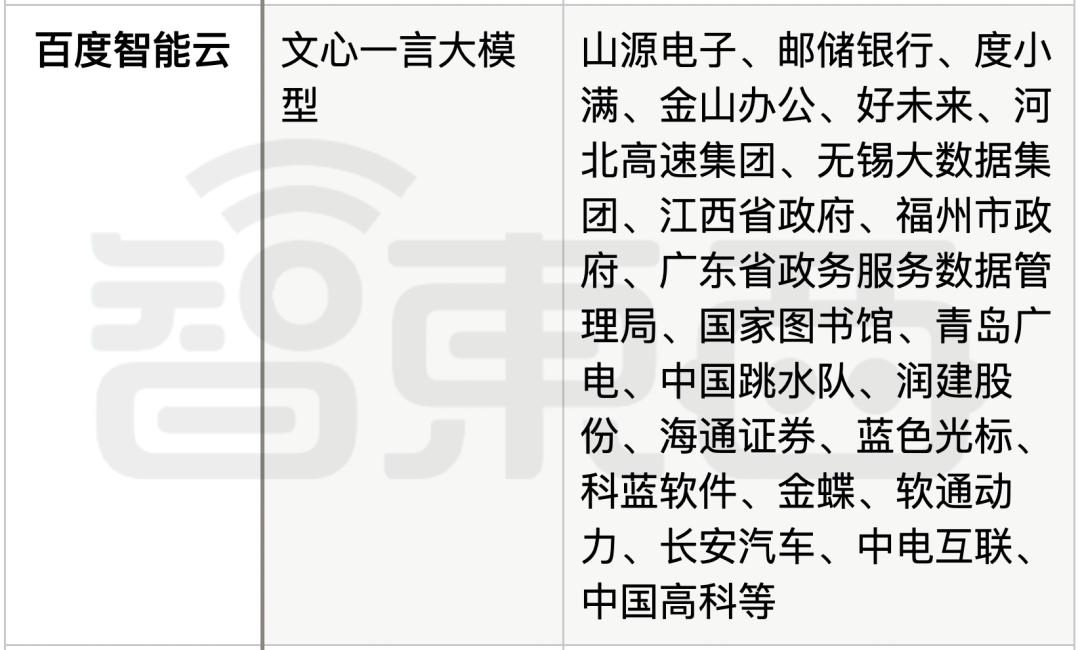

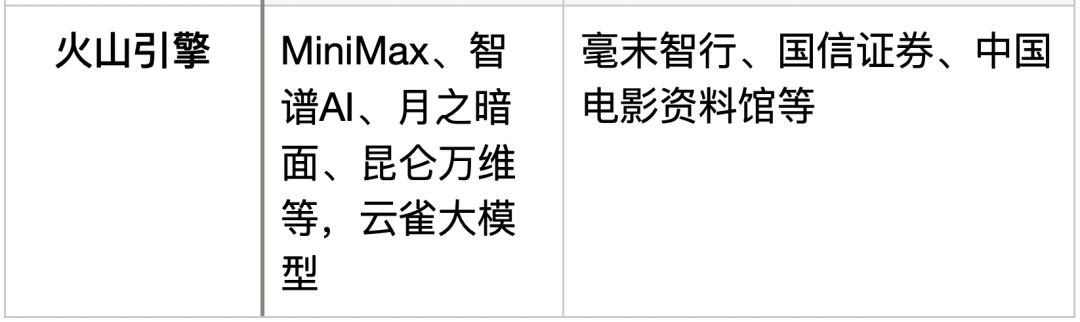

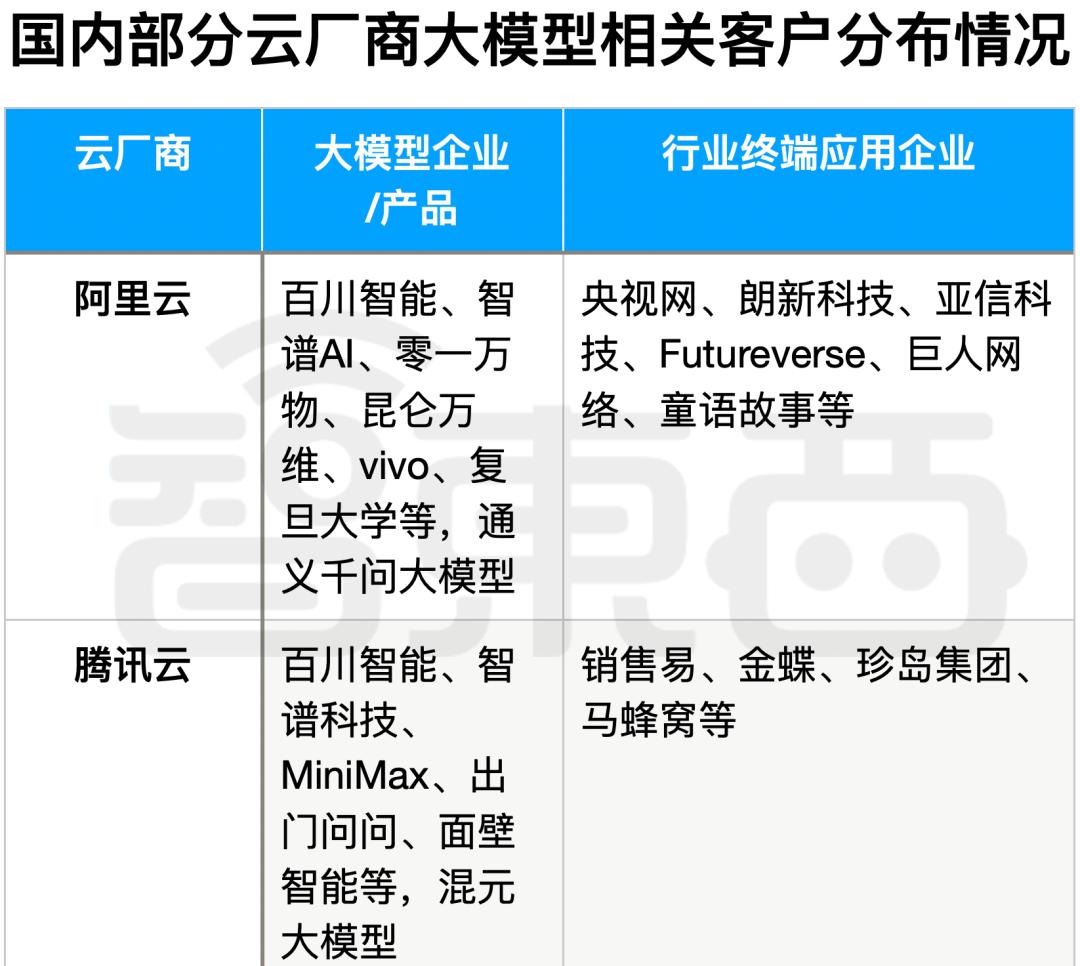

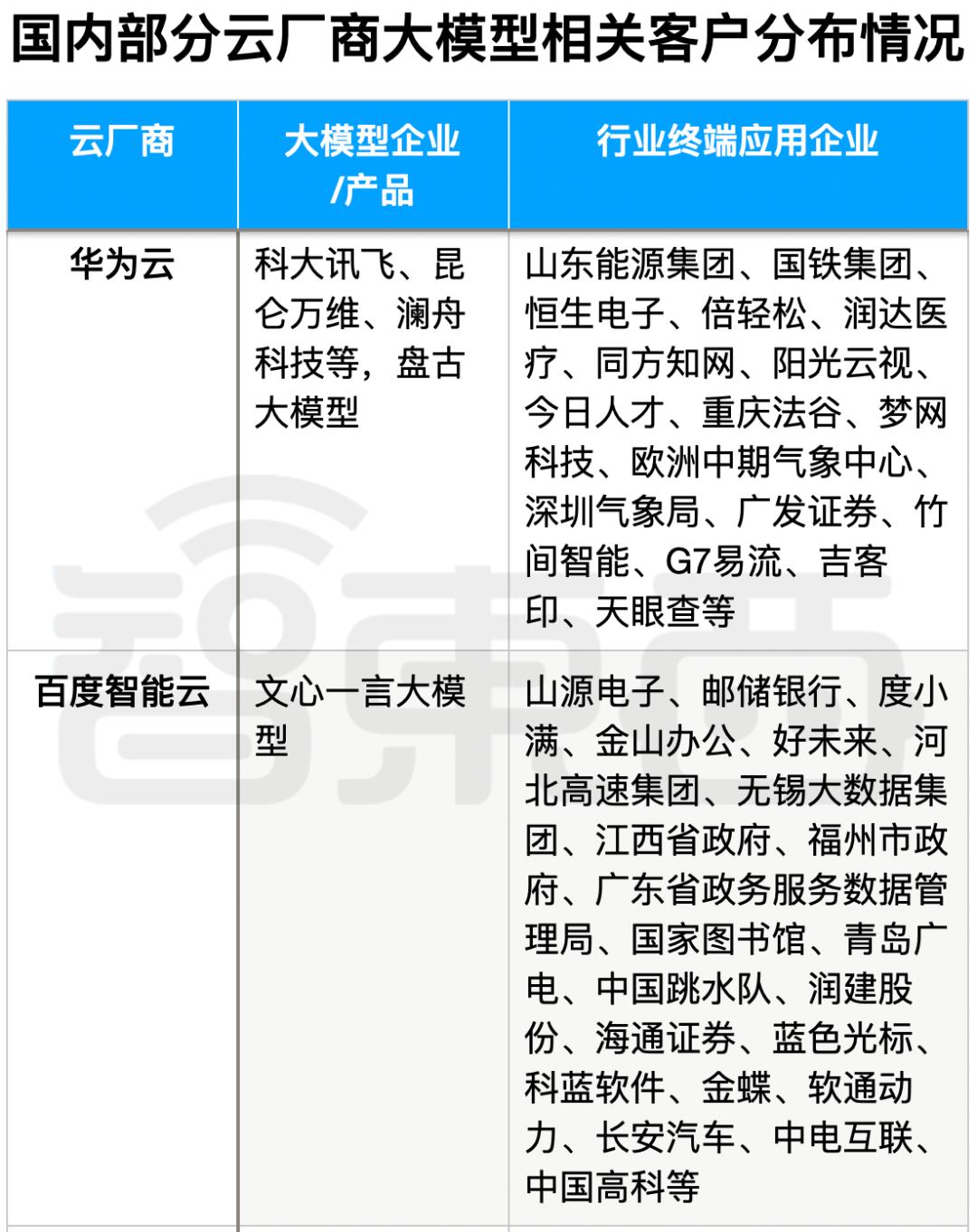

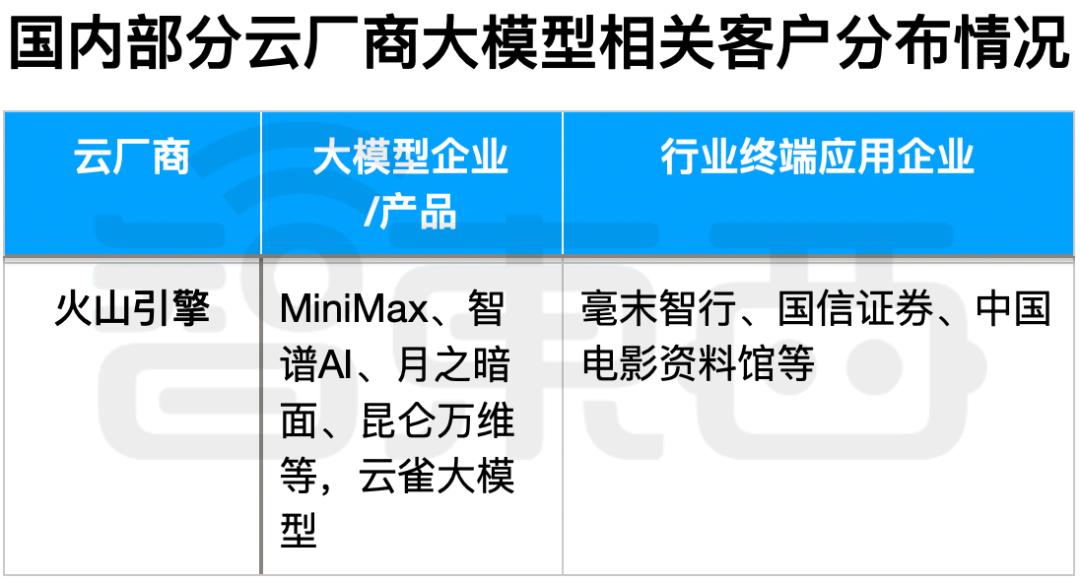

After thorough analysis by Zhidongxi, as shown in the figure below, major cloud providers have indeed established their respective "spheres of influence," marking a phased outcome in the cloud giants' 'Hundred-Model War.'

▲ Distribution of large model customers among domestic cloud vendors (compiled from public information)

In early November, OpenAI's GPTs sparked a global wave of large model application development, raising new demands for the computing power industry. Greater computing power, lower costs, and easier development have become the focal points of competition among public cloud providers. To win over top-tier large model clients, domestic cloud vendors must demonstrate their irreplaceability in this commercial battle.

As the 'Hundred-Model War' enters deeper waters, cloud providers such as Alibaba Cloud, Huawei Cloud, Tencent Cloud, Baidu Intelligent Cloud, Volcano Engine, and China Telecom Cloud have all revealed their trump cards... Who is the leading cloud service provider for large models in China? This article attempts to explore this question in depth.

Leading domestic large model startups have already aligned themselves with different cloud giants.

To quickly acquire the computing power necessary for training large models—while avoiding the high costs and missed opportunities of building their own data centers—large model developers have unanimously turned to major public cloud providers. The industry has already seen powerful alliances between star large model companies and cloud giants.

On one side, Alibaba Cloud recently showcased Baichuan Intelligence's founder and CEO Wang Xiaochuan as a key supporter, revealing that their ability to iterate one model per month is largely due to Alibaba Cloud's support in completing thousand-card large model training tasks, while also effectively reducing model inference costs.

On another front, Huawei's rotating chairman Xu Zhijun personally attended iFLYTEK's Spark 2.0 launch event, jointly announcing the "Flying Star One" domestic computing power platform for large models. This platform was developed through Huawei's special task force stationed at iFLYTEK, demonstrating momentum to build a "national team" for large models.

Tencent Cloud is equally bustling with activity. They revealed supporting MiniMax in running thousand-card level large model tasks. According to Tencent Cloud VP Wei Wei, through Tencent Cloud's new-generation high-performance computing cluster HCC, MiniMax completed its technical infrastructure upgrade, reducing overall cloud costs by at least 20%.

Public cloud giants' large model customers can be simply divided into two categories: one being the aforementioned large model enterprises, and the other being end-user application customers in specific industries.

According to industry analysis, cloud service providers including Alibaba Cloud, Tencent Cloud, Huawei Cloud, Baidu Intelligent Cloud, and Volcano Engine have all secured prestigious enterprise clients.

Among them, Alibaba Cloud and Tencent Cloud have strategically positioned themselves across both large model enterprises and industry-specific application companies. Domestic AI startups like Zhipu AI, Baichuan Intelligence, and Kunlun Wanwei are fiercely competing for leadership in China's large model ecosystem, with strong backing from these two cloud giants.

Public information indicates that Baidu Intelligent Cloud and Huawei Cloud are more focused on implementing their large models across various industry applications, covering sectors such as healthcare, education, finance, entertainment, energy, and meteorology.

The industry's 'dark horse,' ByteDance's Volcano Engine, primarily focuses on enterprise AI models. This year, Volcano Engine has prominently displayed its 'Your Next Cloud' slogan across various promotional scenarios, and its momentum in the AI model domain warrants attention.

Notably, the client base for major cloud providers' AI models remains unstable, with many AI model companies opting for a 'multi-vendor approach'—appearing simultaneously on multiple public cloud providers' client lists.

For instance, Baichuan Intelligence not only utilizes Alibaba Cloud's services but has also partnered with Tencent Cloud's vector database to build an intelligent Q&A demo system based on user knowledge bases.

MiniMax has not only upgraded its technological foundation through Tencent Cloud's new-generation high-performance computing cluster HCC but has also previously collaborated with Volcano Engine to build a high-performance computing cluster. Based on its machine learning platform, MiniMax has developed an ultra-large-scale model training platform that supports daily stable training on over a thousand cards.

It can be inferred that in the subsequent training and inference of models, the choice of cloud service provider by large model manufacturers is still not a settled matter.

The competition for large model clients is a protracted battle among major public cloud providers, revolving around money, computing resources, and management strategies.

Investing heavily is the most straightforward and aggressive strategy employed by domestic public cloud providers to compete for large model clients.

Taking a cue from OpenAI abroad, Microsoft serves as its exclusive cloud provider, meeting all of ChatGPT's computational demands, while OpenAI grants Microsoft priority access to most of its technologies. This arrangement stems largely from Microsoft's cumulative $13 billion investment in OpenAI.

Domestic cloud giants are mirroring this strategy. For instance, Alibaba Cloud led the investment in '01.AI,' an AI company founded by Lee Kai-Fu, Chairman and CEO of Sinovation Ventures, which released its first open-source bilingual large model 'Yi' in November. Alibaba Cloud's official account heavily promoted this model, as it was primarily developed on Alibaba's platform.

However, top-tier large model projects are highly sought after, and investments alone may not secure cloud giants' exclusive partnerships with these AI clients.

For example, OpenAI's formidable competitor Anthropic has been fiercely contested by Amazon and Google. Amazon announced on September 28 its intention to invest up to $4 billion in Anthropic to gain control, only for Google to commit $2 billion in financing by late October. As a result, neither Google nor Amazon can become Anthropic's sole cloud provider.

In China, both Alibaba and Tencent have set their sights on several promising AI model startups. For instance, Zhipu AI secured over 2.5 billion yuan in funding by late October this year, while Baichuan Intelligence raised $300 million, with both tech giants being major investors in these rounds.

With multiple 'big players' holding stakes, these star AI model companies naturally don't need to commit to exclusive partnerships with any single investor.

Funding is just the appetizer – public cloud providers must play their trump card: GPU supply.

The battle for AI model clients among cloud providers ultimately centers on GPU computing clusters. Every major public cloud provider is aggressively promoting their thousand-card and ten-thousand-card cluster capabilities, as this represents the core competitive advantage in attracting AI model customers.

Alibaba Cloud claims it can provide single clusters with up to 10,000 GPUs, capable of supporting simultaneous online training of multiple trillion-parameter large models. Ant Group's financial model infrastructure has reached the 10,000-GPU scale. Baidu recently released its Ernie 4.0 model, also claiming it was trained on a 10,000-GPU AI cluster. On November 9th, Tencent partnered with Songjiang to establish what it calls China's largest GPU computing center.

To build thousand or ten-thousand GPU clusters, public cloud giants are spending heavily to acquire Nvidia GPUs.

In August this year, according to the Financial Times citing informed sources, Chinese internet giants including Alibaba, Tencent, Baidu, and ByteDance placed orders with Nvidia for $5 billion worth of chips. About $1 billion worth (approximately 100,000 Nvidia A800 GPUs) will be delivered this year, with the remaining $4 billion worth of GPUs scheduled for delivery in 2024.

However, the US expansion of restrictive policies has abruptly disrupted public cloud providers' "10,000-GPU cluster" competition.

In October this year, with the US updating its "Export Controls on Advanced Computing Chips and Semiconductor Manufacturing Equipment," according to Global Times citing foreign media reports, Nvidia may be forced to cancel over $5 billion worth of advanced chip orders to China next year. This undoubtedly casts uncertainty on the direction of domestic public cloud providers' "customer acquisition battle."

Who will become China's leading cloud provider for large models? The future remains uncertain for all cloud providers, and the question must be postponed for now.

Even Alibaba Cloud, considered the cloud giant with the most abundant GPU reserves, is feeling the strain.

In early November, Alibaba Cloud suspended A100 GPU rentals on its official platform. Recent financial reports from Alibaba stated: "These new restrictions may significantly impair Cloud Intelligence Group's ability to provide products and services, as well as fulfill existing contracts, thereby negatively impacting its operational performance and financial condition."

With supply constraints from upstream monopolistic giants, public cloud providers can only focus on two approaches to ensure supply for large model developers.

1. Resource Optimization - By improving the utilization efficiency of existing computing resources, they aim to alleviate computing shortages in a cost-effective manner.

Cloud providers including Alibaba Cloud, Tencent Cloud, Huawei Cloud, Baidu AI Cloud, and Volcano Engine have comprehensively upgraded their storage, networking, and computing infrastructure to enhance computing efficiency.

For instance, Tencent Cloud's StarSea-based servers reportedly reduce GPU server failure rates by over 50%. Through storage upgrades, Tencent Cloud can complete writes of more than 3TB of data within 60 seconds, enhancing model training efficiency. Alibaba Cloud launched its upgraded AI platform PAI in late October, featuring the HPN 7.0 next-generation AI cluster network architecture, which claims to achieve up to 96% linear scaling efficiency for large-scale training. In large model training, it can save over 50% of computing resources.

Second is open sourcing, seeking domestic alternatives for computing power to accelerate catch-up efforts.

For example, in November this year, British news agency Reuters reported that Baidu ordered 1,600 Ascend 910B AI chips from Huawei for 200 servers, as a replacement for NVIDIA's A100. Subsequently, other major model and cloud providers have also disclosed purchases of domestic chips.

According to Baidu Intelligent Cloud's official information, its Qianfan platform can achieve a 95% acceleration ratio for cluster training at the scale of 10,000 cards, with effective training time accounting for 96%. Meanwhile, the Qianfan platform is compatible with mainstream AI chips both domestic and international, including Kunlun Core, Ascend, Hygon DCU, NVIDIA, and Intel, supporting customers in completing computing power adaptation with minimal switching costs.

It's evident that investment, GPU hoarding, and localization have emerged as the main strategies for cloud giants to compete in the large model market.

Currently, with OpenAI's GPTs sparking a new wave of customized large models, the demand for intelligent computing power continues to surge.

On one hand, large model companies require even more computing power to keep pace with OpenAI, which is developing GPT-5 and securing additional funding from Microsoft. On the other hand, as large models increasingly focus on real-world applications across industries, cloud providers need to offer more user-friendly development tools and APIs, enabling domestic large models to "develop an application in just 5 minutes."

As Robin Li, founder, chairman, and CEO of Baidu, pointed out: "Looking abroad, besides dozens of foundational large models, there are already thousands of AI-native applications—something currently lacking in the Chinese market." The AI industry should prioritize demand-side and application-layer efforts, encouraging enterprises to leverage large models for developing AI-native applications.

Qiu Yuepeng, Tencent Group Vice President, COO of Cloud and Smart Industries Group, and President of Tencent Cloud, pointed out: "The cloud is the best carrier for large models, which will create a completely new form of next-generation cloud services."

Large models are redefining cloud-based tools, becoming a new battleground for public cloud providers in their customer acquisition wars.

Cloud giants are increasing value-added services in three key areas - toolchains, ecosystem communities, and AI-native applications - to lower the barriers for large model implementation and help enterprises deploy these models.

1. The Great Battle of Large Model Development Platforms

Currently, major public cloud providers have rolled out upgraded development toolchains, delivering their accumulated expertise in large model training tools to AI companies and industry end-users. These include Alibaba Cloud's Bailian, Baidu Intelligent Cloud's Qianfan, Huawei Cloud's Ascend MindSpore, Tencent Cloud's TI Platform, and ByteDance's Volcano Engine Ark.

2. The Battle of Developer Community Vitality

Cloud giants are cultivating developer communities, and those with more active communities can significantly boost the download and real-world application of large model products. For instance, Alibaba Cloud touts its ModelScope community—dubbed the "Chinese version of Hugging Face"—as a key advantage. Reportedly, model downloads have exceeded 100 million, and the platform has contributed 30 million hours of free GPU computing power to developers.

3. The Explosion of AI-Native Applications

Cloud giants have developed a batch of AI-native applications based on their business scenarios, directly serving end customers with clear needs but weaker development capabilities. For instance, Baidu has comprehensively infused AI capabilities into its existing products, including consumer-facing services like search, maps, document libraries, and cloud storage.

While public cloud giants are targeting these three major areas, their focuses differ.

Multiple cloud computing industry insiders told Zhidongxi that currently, Alibaba Cloud and Tencent Cloud have more obvious scale effects, with more large model customers. They primarily focus on providing cloud infrastructure foundations while also considering application development. For example, Tencent Cloud has successively launched new products for large models in computing, storage, databases, and networking, but the application deployment of its Hunyuan large model has received relatively less attention. Alibaba Cloud, on the other hand, particularly emphasizes its cloud service foundation capabilities, such as "saving over 50% of computing resources" and "achieving up to 96% linear scaling efficiency in large-scale training."

Baidu Intelligent Cloud appears to have more industry end-users, focusing on facilitating large model application development for industries (similar to OpenAI) and empowering Baidu's own products with large models. According to official data, as of August 31, within just over forty days of Wenxin Yiyan's public release, the Wenxin large model has reached 4,500 users, 54,000 developers, 4,300 scenarios, 825 applications, and 500 plugins.

Meanwhile, players like Huawei Cloud and China Telecom's Tianyi Cloud emphasize creating self-developed, controllable large model solutions based on full-stack proprietary advantages to empower industry applications. Additionally, some emerging cloud providers are finding opportunities. For example, Volcano Engine has attracted many large model clients by leveraging its extensive video business GPU resources, massive data, and experience in self-developed AI large models.

The "client acquisition battle" among cloud giants continues to evolve. On one hand, they still need to expand their territories and acquire more clients. On the other hand, with upstream supply constraints, each is implementing funnel strategies to select more capable large model providers and more benchmark industry end-users to jointly advance the commercialization of large models.

Large models are transforming the landscape of cloud services, with PaaS and MaaS businesses like large model development platforms showing greater potential. This remains an undecided market. Large model application development and deployment have become the "second battlefield." The cloud giant whose platform can incubate more successful large model or AIGC applications will likely take the lead in this new arena.