AI Hardware PM Guide 2: Voice Interaction - Making Hardware Understand Human Speech

AI Insights

1

Posts

1

Posters

55

Views

1

Watching

-

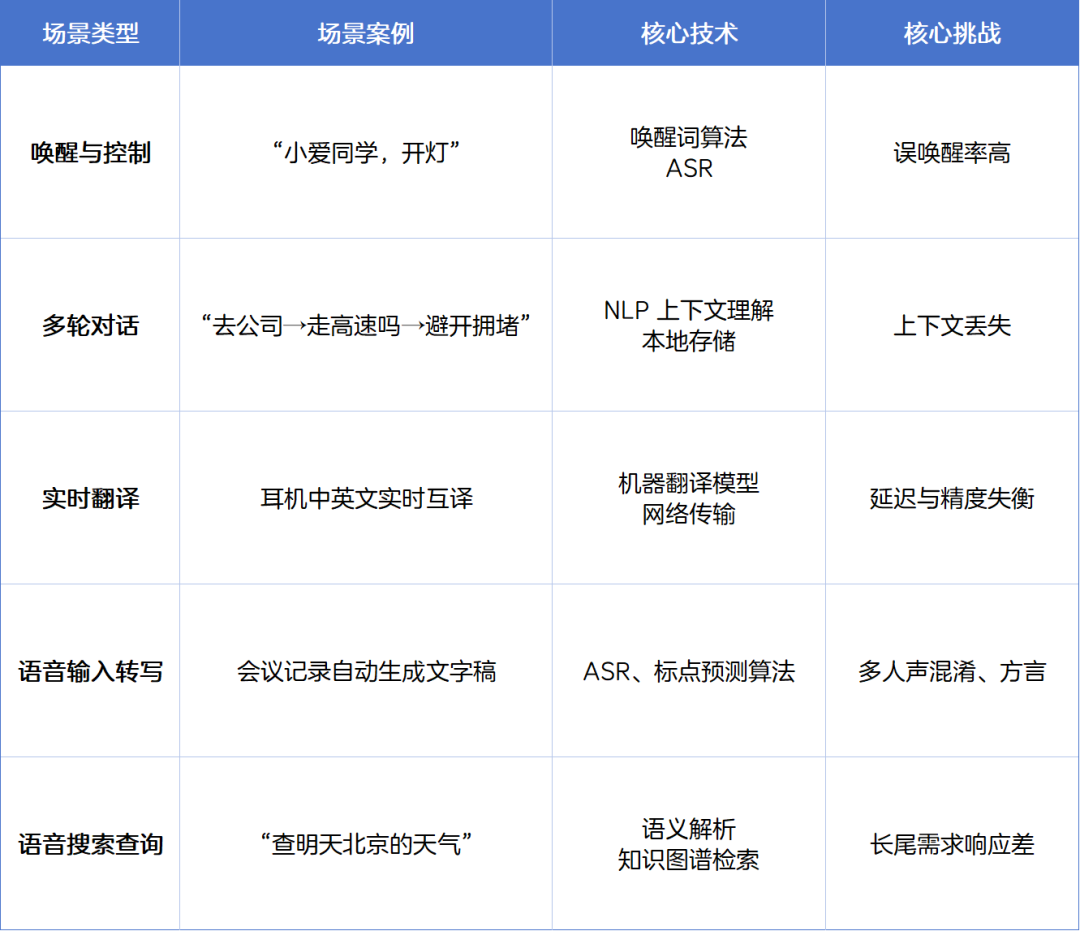

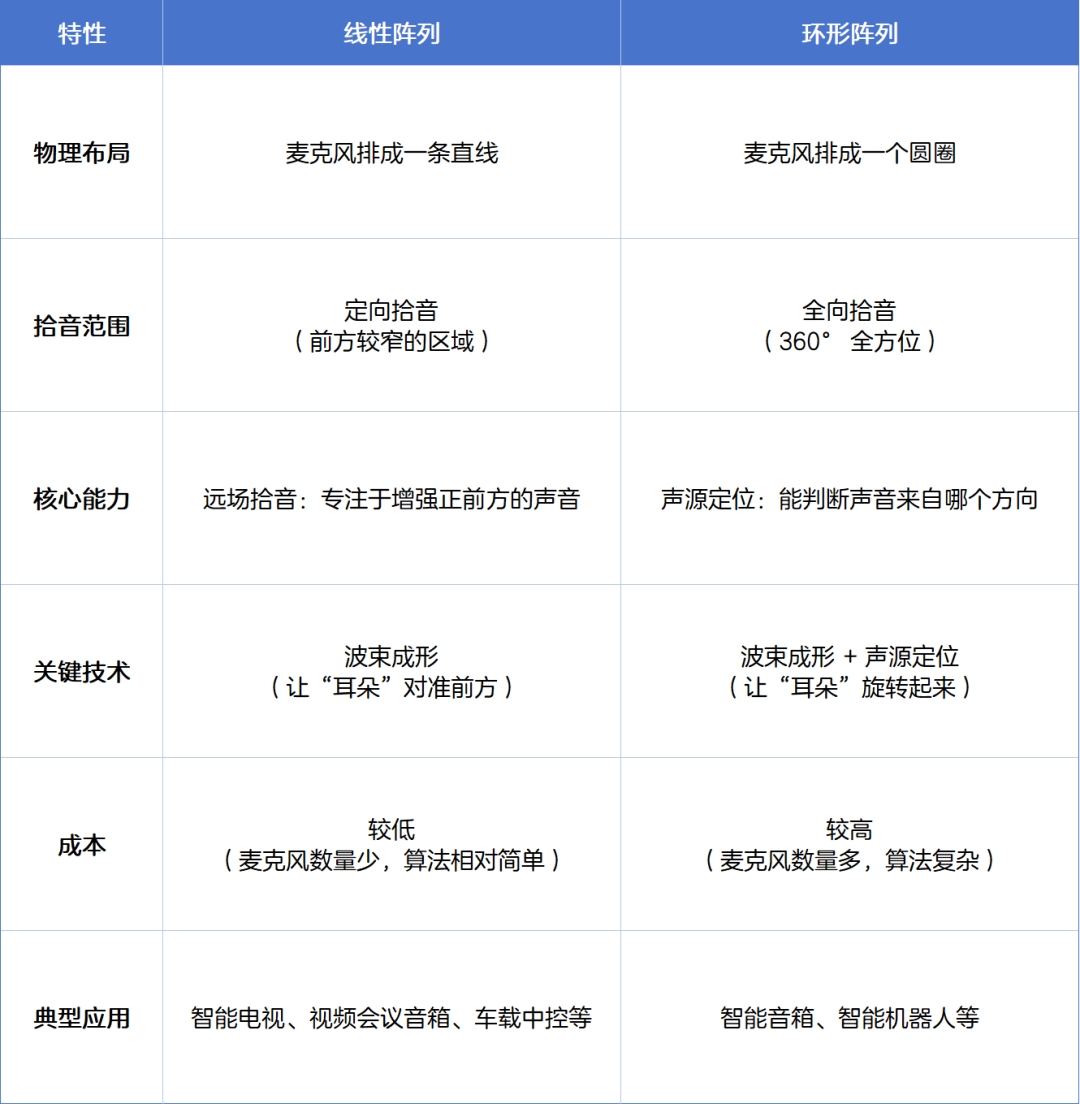

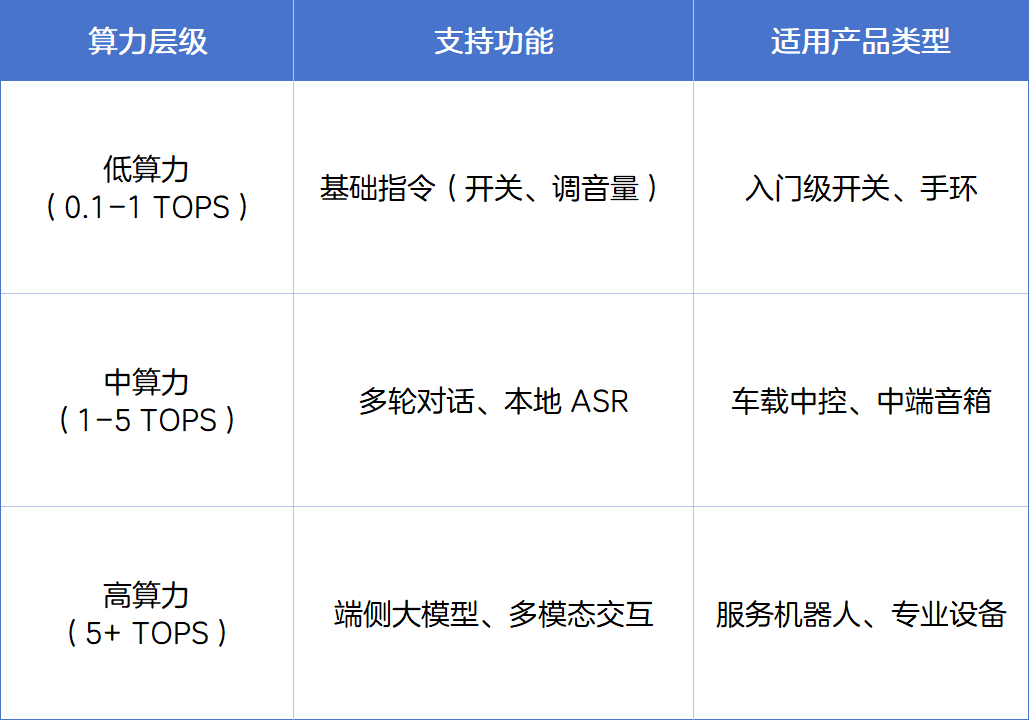

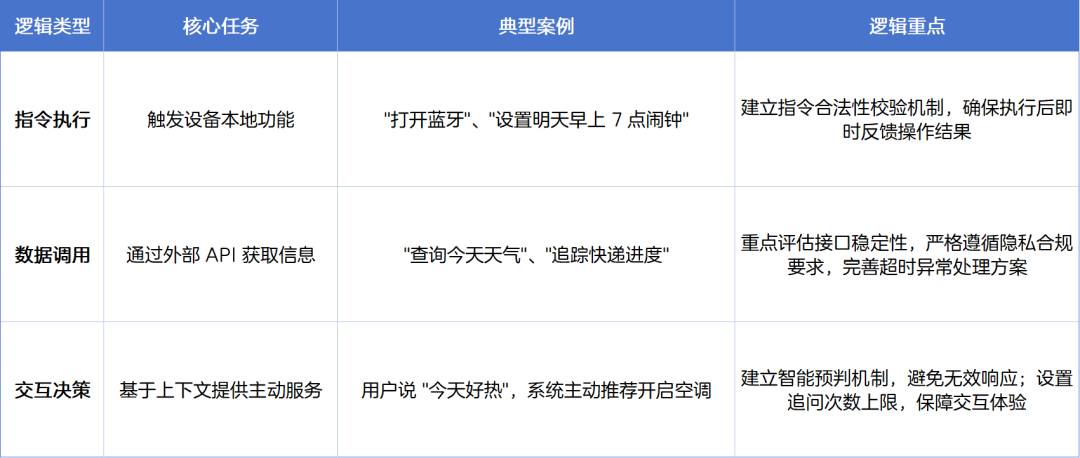

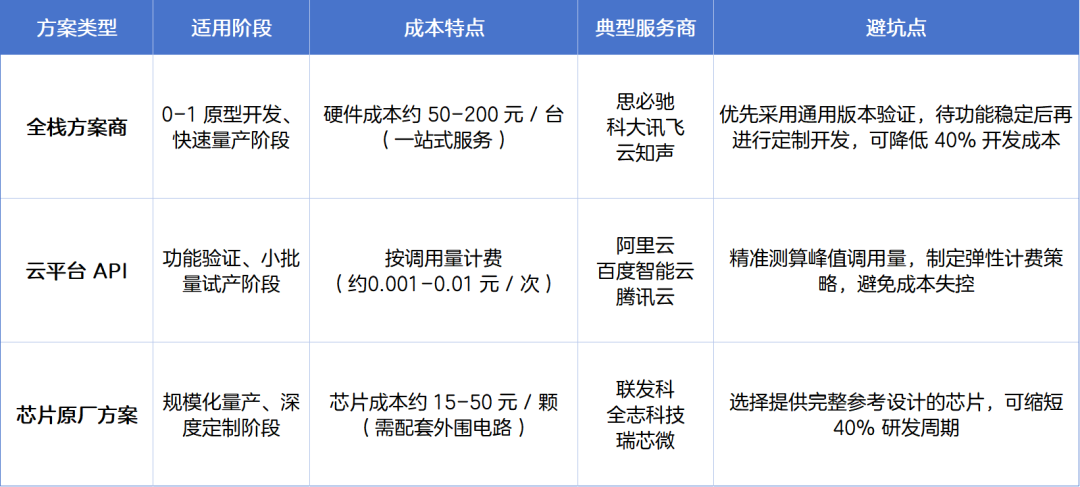

> How to make voice interaction more than just a 'gimmick'? This article systematically breaks down the implementation path of voice interaction in smart hardware from three dimensions: speech recognition, semantic understanding, and interaction design, helping hardware PMs build products that truly 'understand' users.  ## 1. Core Concepts of Voice Interaction Voice interaction refers to the technological process of communication between humans and hardware devices through speech signals, consisting of three core components: **Automatic Speech Recognition (ASR), Natural Language Processing (NLP), and Text-to-Speech (TTS)**: 1. **Automatic Speech Recognition (ASR):** Converts human speech into text, serving as the 'input port' of interaction. Accuracy directly impacts subsequent processes. 2. **Natural Language Processing (NLP):** Parses text semantics to understand user intent, acting as a 'translator' that determines whether the device can 'understand'. 3. **Text-to-Speech (TTS):** Converts device responses into natural speech, serving as the 'output port' that affects user comfort in receiving information. These three components work closely together, and any weakness in one can degrade the overall experience. For example, ASR errors can lead to NLP misinterpretations, and stiff TTS synthesis can reduce user acceptance. ## 2. Technical Application Scenarios ### Classic Core Scenarios  ### Emerging Scenarios (Fast-Growing Fields from 2023-2025)  ## 3. Core Hardware Components Voice interaction hardware revolves around the **'capture – process – respond'** workflow, primarily consisting of four categories: 1. **Microphone Array** (Sound Capture) 2. **Main Chip** (Data Processing) 3. **Network Module** (Data Transmission) 4. **Auxiliary Acoustic Components** (e.g., Noise-Canceling Microphones, Speakers) ### Microphone Array: The 'Ears' for Sound Capture **Microphone Array** A microphone array is a collection of multiple microphones arranged in a specific pattern to enhance sound capture. Theoretically, more microphones enable more precise sound source localization and noise suppression. In many cases, a single microphone (1-mic) can meet basic needs. **Sound Capture** Refers to the process of capturing and collecting external sound signals, the first step in voice interaction. Capture quality directly impacts speech recognition accuracy—just as humans struggle to hear in noisy environments, poor capture leads to devices 'misunderstanding' commands. **Layout Types** Microphone arrays come in two layouts: linear and circular.  **Key Note:** Noisier environments require higher signal-to-noise ratios (SNR) (≥60dB for factories, ≥50dB for homes), increasing hardware costs. ### Main Chip: The 'Brain' for Data Processing **Main Chip** The device's 'central processor' runs algorithms like speech recognition and semantic understanding, processing sound data from the microphone array. Its **computing power** (measured in TOPS, or trillions of operations per second) determines response speed—like human brain reaction time affecting conversation fluency. **Computing Power Tiers**  **Selection Logic** Higher computing power correlates with higher costs. Entry-level products don’t need excessive power (to avoid cost waste), while high-end products should reserve computing headroom for future algorithm upgrades. ### Network Module: The 'Nerves' for Data Transmission Handles data transfer between devices and the cloud or other devices. Some voice commands (e.g., complex queries, real-time translation) rely on cloud processing, so network stability directly affects response speed. - **WiFi:** Ideal for stationary devices (stable but router-dependent); watch for dead zones. - **Bluetooth:** Suited for low-power devices (long battery life but slow transfer); limited to occasional wake-up scenarios. - **4G:** Best for mobile devices (portable but costly); requires SIM card and data plan budgeting. ## 4. Algorithms in Voice Interaction Algorithms are the core engine for **'understanding – interpreting – responding'** in voice interaction: converting speech signals to text, analyzing intent, and generating execution commands. Their performance determines the 'intelligence' of interaction, and precision/efficiency require systematic training and optimization. ### Data & Cost Planning: The 'Fuel Supply' for Algorithm Training Model performance heavily depends on data quality and scale, requiring diverse scenarios and user demographics: - **Data Scale:** Over 100,000 labeled samples, covering varied ages, accents, and environments (e.g., home noise, outdoor interference). - **Cost Allocation:** Data collection and labeling account for **20%-30%** of total project budget. - **Timeline:** **3-6 months** total; plan budgets and schedules early, defining data scope and labeling standards to avoid delays or underperformance. ### Core Metrics: Algorithm Performance Benchmarks Training aims for 'three ups, one down'—quantifiable targets balancing feasibility and high-level goals:  ### On-Device Optimization: Balancing Algorithms and Hardware Deploying algorithms to devices requires resolving the conflict between 'limited computing power' and 'performance demands.' Model compression suits low-end hardware but incurs ~**5% accuracy loss**—trade-offs vary by product: **Low-End Devices (e.g., Entry-Level Smart Speakers)** Priority: Battery Life > Fluency > Accuracy Strategy: Use lightweight models, accept minor accuracy loss, prioritize battery targets (e.g., days of standby). **High-End Devices (e.g., Premium Car Systems)** Priority: Accuracy > Speed > Battery Life Strategy: Leverage spare computing power, retain complex models, prioritize precision and instant response while meeting basic battery needs. ## 5. Post-Recognition Logic After converting speech to text, 'processing logic' determines 'what to do and how'—bridging 'intent understanding' and 'final response.' Design must ensure rationality and stability to avoid 'understood but executed wrong.' Common logic types:  ## 6. Three Mainstream Voice Solutions Solution selection is critical for project cost and timeline. Compared across stages, costs, and risks:  ## Conclusion The key to voice interaction hardware isn't 'how advanced the tech is' but 'how precise the decisions are.' PMs must align with user needs, balancing scenarios, hardware, algorithms, and costs to transition products from 'usable' to 'delightful.'