ChatGPT is Getting Stronger, Will OpenAI Surpass Apple?

-

"Today, approximately 2 million developers are using our API for various use cases. Over 92% of Fortune 500 companies are building with our products, and ChatGPT now has around 100 million weekly active users."

At OpenAI's first developer conference on November 7th Beijing time, Sam Altman announced the impressive achievements of OpenAI over the past year.

As rumored before the event, the conference introduced the latest version of ChatGPT, GPT-4 Turbo, along with numerous new features. More importantly, OpenAI announced plans to build an AI platform around GPT, launching the GPT Store and introducing the concept of GPTs. Users can now create their own GPTs using the GPT Builder tool.

Since ChatGPT was likened to the "iPhone moment" for AI, it seems Sam Altman has already set his sights on Apple. However, his ambitions are even greater—not only aiming to make ChatGPT the next iOS but also to strengthen user engagement by allowing every user to become a developer.

The Big Reveal: GPT-4 Turbo

The updates to the LLM (Large Language Model) include:

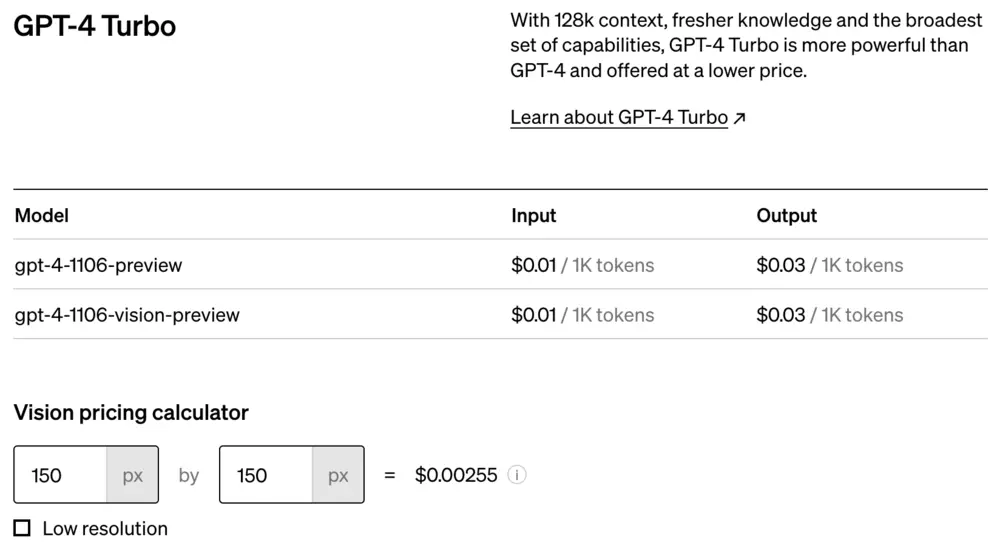

- The latest GPT-4 Turbo, supporting a 128k context window, with lower costs and faster output.

- A new Assistants API, making it easier for developers to build their own AI applications.

- New multimodal capabilities, including vision, image creation (DALL·E 3), and text-to-speech (TTS).

Long Context

Long context has become the 'main battlefield' in the competition among AI models. Compared to many new models, GPT-4's 32k token context window is no longer outstanding. The updated GPT-4 Turbo now features a 128k token context window, four times that of GPT-4, surpassing competitor Anthropic's Claude 2, which offers 100k tokens.

Sam Altman demonstrated that 128k tokens are roughly equivalent to 300 pages of text—about the length of the UK edition of Harry Potter and the Philosopher's Stone. Each book in The Lord of the Rings trilogy averages around 400 pages.

However, GPT-4 Turbo's context length is not the longest. A week earlier, Baichuan Intelligent released Baichuan2-192K, with a context window of 192k tokens, capable of processing approximately 350,000 Chinese characters.

Developer Assistant

In this update, OpenAI introduced the Assistant API, enabling developers to integrate AI agent-like experiences into their applications.

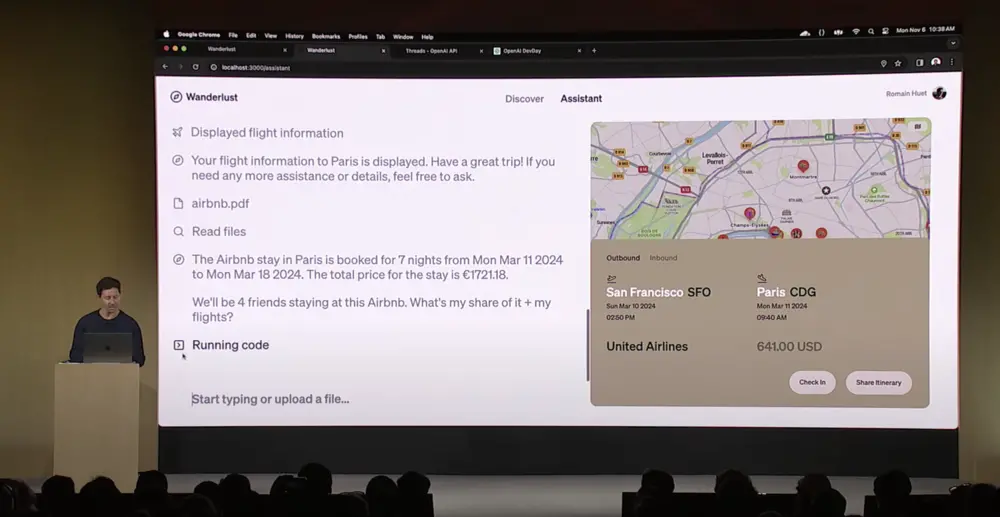

This API allows AI assistants to perform specific tasks, call models and tools, and handle complex programming and data processing. It supports various use cases, such as natural language data analysis, coding assistance, and travel planning.

The Assistant API features persistent threads, simplifying state management and allowing calls to developer-defined functions. It also includes a code interpreter and retrieval tools for running and retrieving information from external data. Developers retain control over the data they pass to the API. The Assistant API playground now allows testing without coding.

Additionally, OpenAI updated function calling, enabling models to call multiple functions in a single message and return accurate function parameters. GPT-4 Turbo performs better in generating specific formats and supports a new JSON mode to ensure valid JSON output. The new

response_formatAPI parameter ensures syntactically correct JSON. Theseedparameter enables reproducible outputs, aiding debugging and unit testing. OpenAI also plans to introduce token log probabilities for features like autocomplete.Knowledge Update

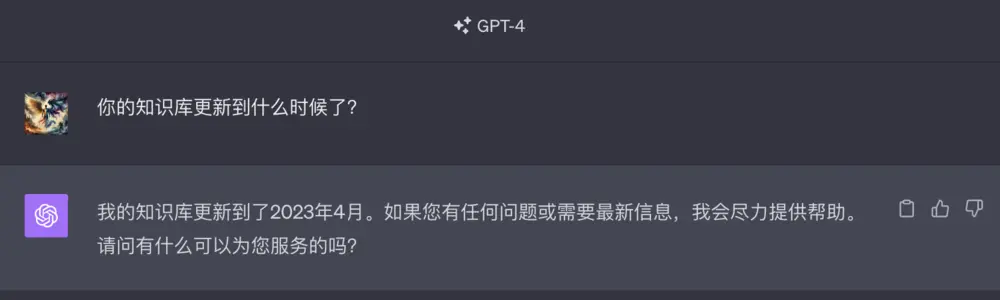

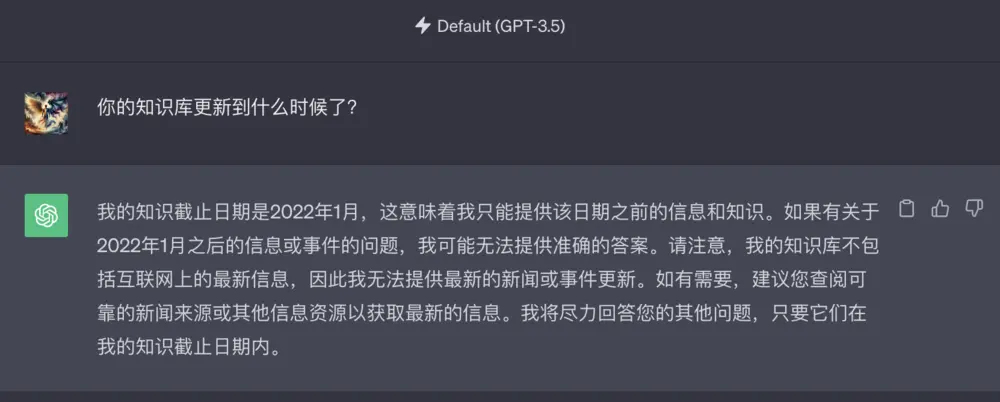

GPT-4's knowledge base has been updated to April 2023. The GPT-4 model currently used in ChatGPT reflects this update, while GPT-3.5's knowledge remains as of January 2022.

New Multimodal Model

In terms of multimodal capabilities, the GPT-4 Turbo API will integrate DALL-E 3 and the text-to-speech (TTS) model. Developers can directly call the DALL-E 3 model in GPT-4 Turbo with vision via the API for image recognition and generation. In fact, since the launch of DALL·E 3, ChatGPT Plus users have been able to use DALL·E 3 under the GPT-4 module for image creation and multimodal image recognition. However, this time, the multimodal capabilities are being made available to developers in the form of an API.

Additionally, OpenAI has introduced a new TTS (text-to-speech) feature. Similar capabilities have been available on the ChatGPT mobile app for some time (though Chinese still has some English accents in testing), and now the API is being opened to developers. Currently, the TTS voice pack offers six voice options and can output in Opus, AAC, and FLAC formats, though custom voices are not yet supported.

Lower Prices, Faster Output

"As the model continues to iterate, our prices are also decreasing," Sam Altman explained. Compared to GPT-4, GPT-4 Turbo's input cost is only one-third, at $0.01 per 1,000 tokens, while the output cost is $0.03 per 1,000 tokens, half the original price. Content generation speed has also doubled.

Another iPhone Moment: GPT Store

When ChatGPT plugins were launched, many compared them to the Android Market or iOS's App Store. Now, OpenAI has truly introduced an AI version of the App Store—GPT Store.

Users can directly create custom versions of ChatGPT. During the developer conference, two OpenAI staff demonstrated how to build a GPT.

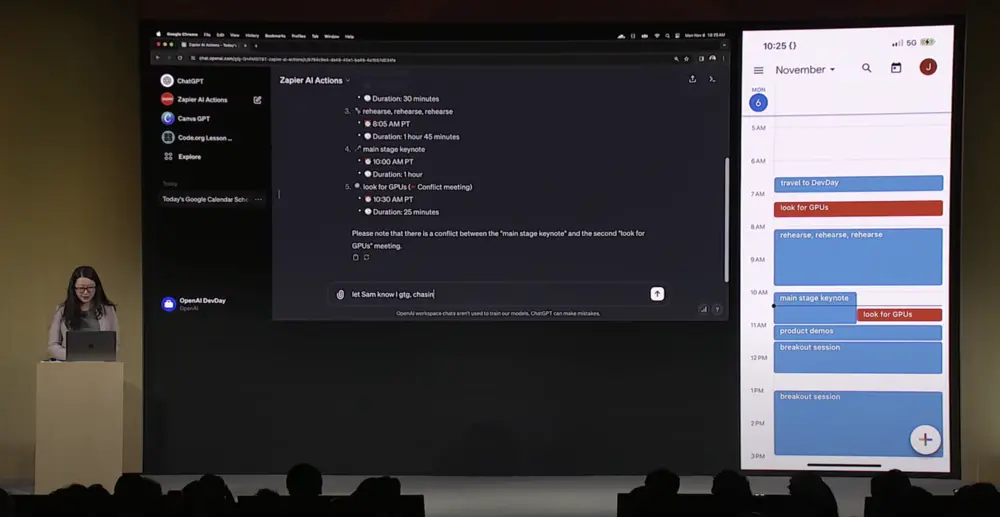

OpenAI staff member Jessica Shay demonstrated using GPT Builder to create a GPT that interacts with a travel itinerary. Through a conversational interface, the GPT was commanded to send a message to Sam Altman, who received it live on stage.

A more professional demonstration by OpenAI staff showcased the use of the Assistants API: it not only lists travel suggestions for Paris but also marks the mentioned locations on a map by category.

Sam Altman announced that OpenAI will launch the GPT Store by the end of the month, where developers can share and publish their GPTs. Additionally, OpenAI will share revenue generated from the GPT Store with developers, though the specific distribution plan has not yet been disclosed.

About Money and Microsoft

During the live Assistants API demonstration, OpenAI staff conducted a lottery, first awarding five engineers $500 each in developer credits, followed by distributing $500 credits to each of the 900+ attending developers and guests.

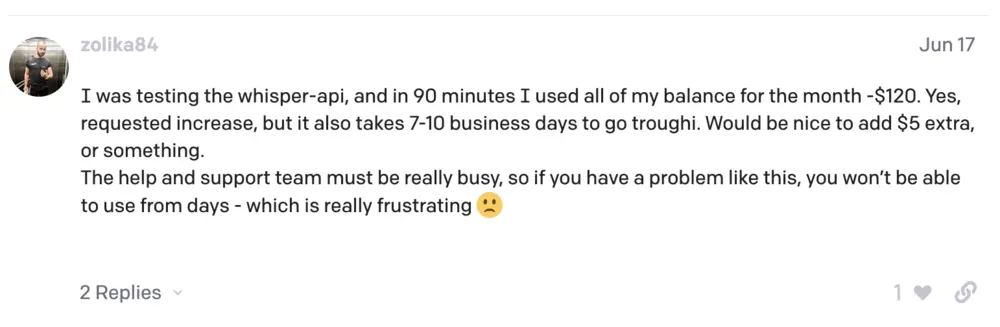

While this may seem generous, the credits are quickly consumed. Many developers have noted that OpenAI's $120 trial credits are exhausted rapidly. For example, developer zolika84 used up $120 in credits within 90 minutes while testing the Whisper API for speech recognition.

Developer zolika84 used up $120 in credits within 90 minutes while testing the Whisper API for speech recognition.Over the past six months, OpenAI's valuation has soared, with total funding exceeding $14 billion, but its spending is equally staggering. High computing and R&D costs, along with potential legal expenses, are major financial burdens.

At the event, OpenAI introduced the Copyright Shield feature to protect users from copyright issues, offering both technical safeguards and legal support. However, OpenAI faces ongoing copyright disputes with U.S. publishers, requiring substantial financial backing for future commercialization.

Regarding finances, Microsoft remains OpenAI's key backer. Despite rumors of discord, Sam Altman invited only Microsoft CEO Satya Nadella as a guest speaker, seemingly reaffirming their strong partnership.

During the speech, Sam Altman straightforwardly asked Satya Nadella: How is the partnership between Microsoft and OpenAI going?

Satya Nadella humorously replied, 'In fact, I remember when you first reached out to me and said, "Hey, do you have any Azure credits?" We’ve come a long way since then.'

Microsoft has always been an infrastructure provider, earning revenue from infrastructure. Satya Nadella repeatedly emphasized, 'Our top priority is to build the best systems so you can build the best models, and then make them all available to developers.'

However, Satya Nadella did not deny the research work of Microsoft's development team in AI, stating, 'We are also developers ourselves, and we are building products.'