Post-00s Chinese Entrepreneurs Launch Large Model Startup, Securing Angel Investment from Top Silicon Valley Founders

-

Two Chinese entrepreneurs lead a 7-member team to start a large model venture???

Yes, Cortex is such a project, which has reportedly secured angel investments from Zoom-affiliated entities, the Getty family, and Kuaishou-affiliated investors.

This is a middleware that integrates multiple large model APIs to make large models understand you better.

It has two main features:

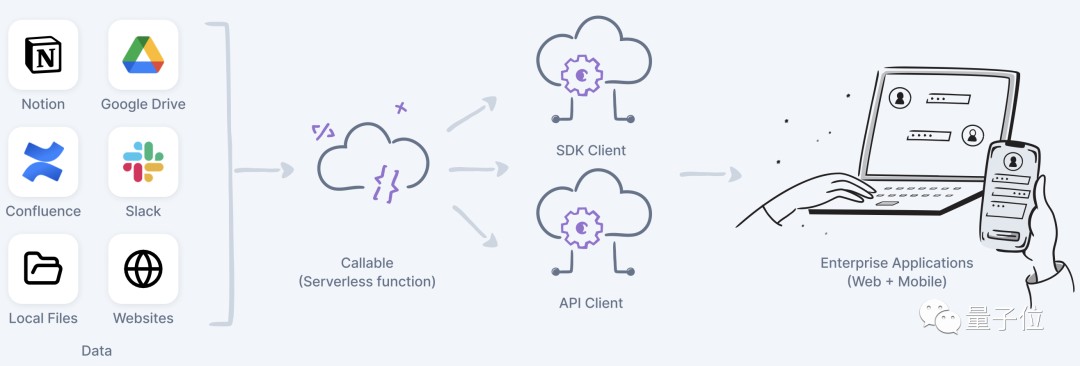

- Connecting to private data, including Notion, Slack, Google Drive, etc., to create a private version of GPT for specific domains.

- Building personalized Copilots tailored to each employee within an enterprise.

This tool has already gained some traction abroad, with over 10 paying customers and thousands of individual users. A popular SaaS company has even adopted Cortex to enable influencers to engage with fans in specific personas.

Large model middleware is foundational software that sits between underlying large models and upper-layer applications. It primarily addresses challenges in deploying large models, such as integrating data, applications, knowledge bases, and coordinating multi-model functionalities.

It’s particularly suitable for those facing dilemmas in the large model space:

- Building a general-purpose large model is expensive and complex.

- Training a more vertical industry-specific model or simply using others' APIs may not suffice.

This is where middleware steps in with solutions.

As the large model field deepens, middleware startups like Cortex are emerging. What sets Cortex apart to attract top Silicon Valley founders?

Cortex enables the combined use of multiple large models like GPT-4, facilitating collaborative applications among language models.

In essence, it functions as an orchestrator for the application layer of large models.

Its primary goal is to improve the 'scalability, accessibility, and efficiency' of development for technical personnel.

The name 'Cortex' is derived from the English word meaning 'cerebral cortex.'

The brain serves as the neural center, analogous to large models, while the cortex represents the overlying structure.

Its capability lies in dynamically invoking different large models and constraining output freedom with fixed formats, assisting users in addressing tasks that single models cannot handle or that require cumbersome multi-model API calls.

Specifically, its functionalities include:

- As a tool integrating numerous APIs, it connects to private databases, transforming into a domain-specific large model with precision.

From this perspective, Cortex adopts a strategy that is both 'broad' and 'deep.'

'Broad' means Cortex does not compete with individual industry services but can be applied across various fields to develop large model applications.

'Deep' refers to building upon the general knowledge of large models by integrating private databases to enhance understanding of specific domains or enterprises.

To make these domain-specific models more practical, Cortex employs methods like vector data retrieval, real-time web searches, and designated API calls.

Cortex's second functionality is enabling individuals to create a personal copilot.

Cortex can handle basic tasks such as drafting documents, creating PowerPoint presentations, writing emails, summarizing meetings, and checking online shopping return policies.

Furthermore, within the same company, Cortex can extract key points from raw information tailored to each role's needs, delivering outputs in specific formats—essentially providing 'a thousand faces for a thousand roles' based on different business departments.

It is understood that Cortex's pricing is based on actual usage.

In terms of billing methods and service models, Cortex can be likened to a 'cloud provider' that connects databases and large models. By aggregating various API resources, it ensures all functionalities remain operational, providing related services.

This is why Cortex is quite popular among developers—it primarily targets developers and IT professionals with some technical background, allowing them to quickly get started, significantly reducing the workload from debugging, and saving time for more creative tasks.

Why create such a middleware for large models? To find the answer, Quantum Bit reached out to the team behind Cortex.

Their response was that they believe the future will be a multimodal, multi-model world. Even a model as powerful as GPT-4 cannot solve all problems alone.

They argue that 'only by connecting multiple models can a true AI application be organized.'

When the core team initially tried to achieve this functionality using Langchain, they found it increasingly difficult. The AI field currently lacks a middleware to bridge the gap between the model layer and the application layer.

Nemo Yang, a founding member of the Cortex team (and CEO of the company behind it), told Quantum Bit that existing platforms and tools cannot adequately fulfill such an idea.

The team initially explored other approaches, but as they gained more insight into the field, they received increasing feedback from users that while large models are useful, integrating a new API for each powerful model is cumbersome, and mastering the controllability of large models is challenging.

Inspired by this, Cortex was gradually refined into what it is today.

The formation of Cortex likely stems from user feedback on the current market situation. In conversations with Quantum Bit, Nemo repeatedly mentioned phrases like "users say" and "market feedback," explaining how Cortex was shaped based on this information to define its product features.

For example, with the rising popularity of large models, vector databases have come into the spotlight. Market feedback indicated that when vector segmentation is applied to contextually dense articles, the retrieved answers might lose coherence due to a lack of contextual understanding, rendering them "nonsensical."

To address this, Cortex employs a fully self-developed vector database that can perform global searches or return results in segmented portions as needed.

Another example involves prompt engineering. As the team member with the "strongest language skills," Nemo found that while prompt engineering might seem simple, excelling at it is actually quite challenging for programmers.

Thus, Cortex includes a design feature that is extremely friendly to developers who struggle with writing prompts: it allows colleagues skilled in prompt writing (i.e., those with strong language abilities who can accurately describe requirements) to join the workflow, helping Cortex better understand user needs.

What new features can we expect in the future?

Nemo mentioned that Cortex will likely integrate with Slack, Confluence, the Microsoft suite, and the Google suite, among others.

The ultimate goal is "Developer first," aiming to minimize developers' development cycles as much as possible.

Cortex is developed by Kinesys AI, a company with a team of seven full-time members, including two Chinese co-founders, several of whom hold bachelor's and master's degrees from Stanford.

The founder and CEO, Nemo Yang, is a member of Generation Z who completed his bachelor's and master's degrees at Georgia Institute of Technology in just two years. With a background in machine learning, he previously worked at ByteDance's Feishu and Microsoft.

At the age of 14, Nemo moved to Silicon Valley alone to pursue his passion for computers. Starting in high school, he began developing websites and apps and participated in startup projects.

Jian Cai, the co-founder and CTO, graduated from the Computer Science Department of Peking University and worked at Google for 8 years. His previous entrepreneurial project was the online collaborative office document platform 'YiQiXie', which was later acquired by Kuaishou.