Is the Ultimate Destination of AI Large Models a Power Plant?

-

40 kilometers north of Seattle, USA, lies the quiet town of Everett. A few years ago, a small startup named Helion, focused on controlled nuclear fusion, settled here.

It might sound impressive, but in this era, it’s not particularly rare. MiHoYo, the creator of Genshin Impact, recently invested in a domestic company researching controlled nuclear fusion technology. This shows that investment is like the feudal lords of the Warring States period supporting retainers—even if 99 out of 100 are freeloaders, as long as one is willing to 'die for a confidant,' the money isn’t wasted.

What makes Helion particularly noteworthy is its investors. In November 2021, Helion received a $500 million investment, whereas in the previous eight years, the company had only secured less than $80 million in funding.

The 'top donor' behind this massive investment is Sam Altman, a name well-known in tech circles as the founder of OpenAI and the 'father' of ChatGPT. Besides investing in fusion energy, Altman also founded Oklo, another startup in the nuclear energy sector.

Putting it all together: The world’s top AI leaders are now targeting the energy industry.

AI ventures are halfway through their journey but running out of power. Today, the AI world is divided, with America feeling the strain—truly a critical moment for survival...

While Chinese AI companies worry about acquiring advanced GPUs, their American counterparts are grappling with electricity issues. Experts from the University of Pennsylvania note that in 2018, computers consumed less than 2% of global electricity. Today, that figure has risen to 10%, and by 2030, computers could consume up to one-fifth of the world’s electricity, or at least 8%.

Data centers alone will account for 2% of global electricity consumption.

2% might seem harmless, right?

But here’s a more alarming perspective:

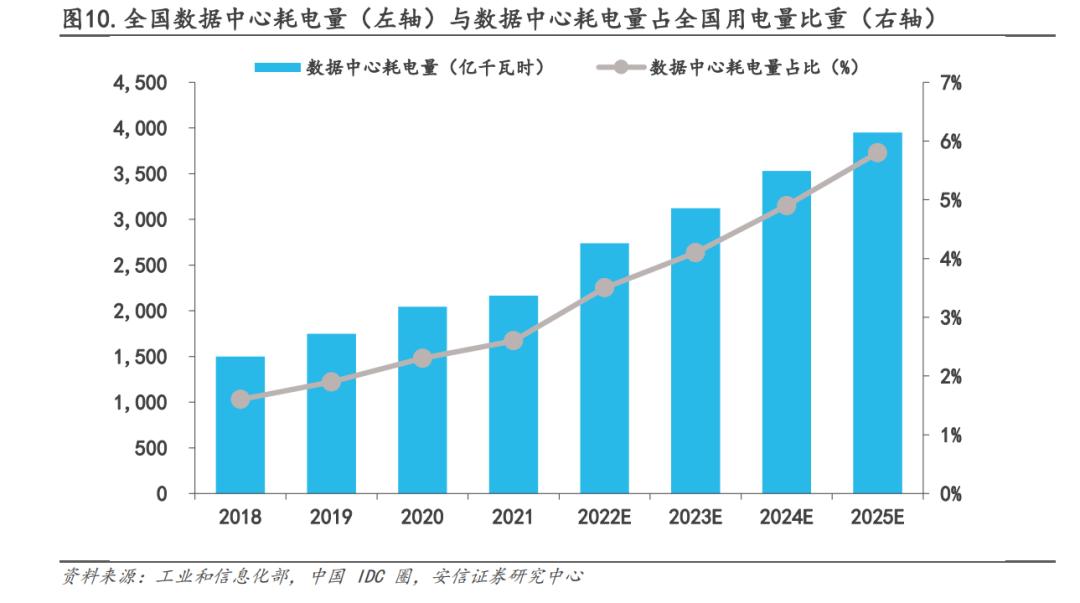

China has 1.4 billion people, with about 1 billion active internet users. We wake up scrolling our phones, binge-watching Douyin, ordering takeout at noon, chatting in group chats in the afternoon, and relying on digital payments for almost everything—yet data centers only account for 2.7% of China’s total electricity consumption.

'Data centers will consume 2% of global electricity'—this implies the entire world living a hyper-connected life like ours. But the reality is, even neighboring India still has 700 million people offline.

How many data centers would be needed to support such a vision? And how much electricity would they require?

In absolute terms, the future electricity consumption of global data centers will easily surpass that of many small European countries.

The most extreme prediction I’ve heard is that global computing demand might exceed the total electricity generated worldwide in a decade.

While the 'hundred-model battle' is fierce now, only a handful will survive in the long run. To emerge victorious, your model needs massive data and training. At current levels, ChatGPT’s monthly electricity consumption equals that of nearly 300,000 Chinese households.

Don’t forget, many data centers also rely on water cooling. Google’s 2023 environmental report shows it consumed 5.6 billion gallons (about 21.2 billion liters) of water in 2022—equivalent to 37 golf courses. Of this, 5.2 billion gallons were used for data centers, a 20% increase from 2021.

Today, this isn’t a critical issue, but energy costs are already the second-largest expense in computing after chips.

While the total electricity consumption might not seem staggering to us Chinese—globally, it’s about the same as a small county—the problem is that not many companies can afford it. To all AI model developers: When the time comes, can you really foot the utility bill?

Of course, there’s nothing new under the sun.

Long before AI, another industry started building its own power plants—aluminum electrolysis.

Aluminum electrolysis production

Take the Zhundong Industrial Park of East Hope Group, for example. Its core is the Zhundong Power Plant, where coal from open-pit mines is directly burned to generate electricity, powering nearby aluminum electrolysis and heavy chemical plants.

For industries like aluminum electrolysis, building their own power plants is far more economical than buying electricity from the grid.

The future of AI will likely follow a similar path.

This helps explain the American approach—given Europe and America’s carbon emission regulations, Microsoft and OpenAI are turning to nuclear energy, specifically small modular reactors (SMRs) that are compact and quick to deploy.

But for Chinese tech companies, the energy issue for data centers is a different story. We’re aware of AI’s energy demands—otherwise, How to Achieve Low-Energy AI wouldn’t have been listed among this year’s top ten frontier scientific questions.

The main reason Chinese AI companies haven’t ventured into energy like their American counterparts is simple: lack of funds.

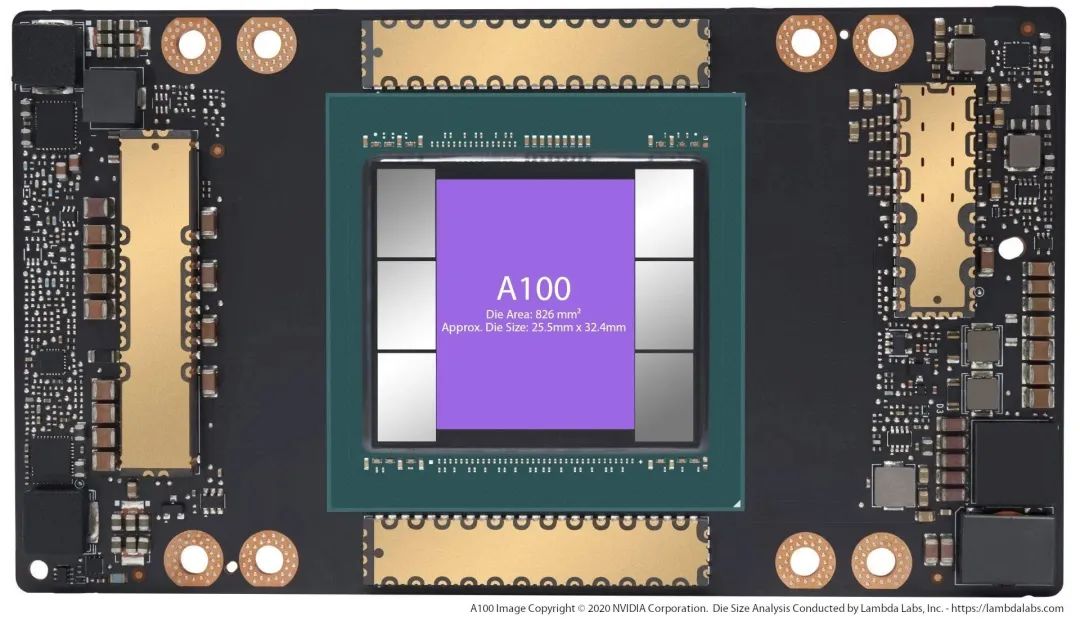

Training large models is incredibly expensive. First, you need to buy a massive number of GPUs—ChatGPT requires around 30,000 Nvidia A100 GPUs, costing $800 million upfront and $50,000 daily in electricity.

Baidu’s R&D investment over the past decade totals about 140 billion yuan (~$20 billion), averaging $2 billion annually. Meanwhile, ChatGPT’s annual operating costs alone are $500 million.

You get what you pay for—there are no shortcuts. It’s all about money. The sheer cost of R&D and training is already overwhelming for our AI companies. Do they have any spare resources to tackle energy issues?

Thus, for Chinese AI firms, given the current economic constraints, worrying about energy is somewhat premature. Baidu’s ERNIE Bot, the most famous domestic large model, has just a few million daily active users—a fraction of ChatGPT’s. Asking it to fret over energy is simply too ahead of its time.

Beyond money, technology is another major hurdle.

How to achieve low-energy AI? One approach is hardware (chips), another is software (algorithms). Current computing power largely relies on advanced manufacturing processes, which come at the cost of high energy consumption. Reducing energy use without sacrificing chip performance is a key challenge—either by pushing process nodes further or inventing a revolutionary architecture mimicking the low-energy efficiency of human neurons. Then there’s the algorithm side, making AI algorithms more efficient.

This isn’t just the responsibility of AI companies; upstream chip suppliers and manufacturers must also step up.

As a technology with the potential to spark a new industrial revolution, AI’s vast energy demands mean its supply chain will be extensive, involving many players. This isn’t something one or two AI companies can solve alone.

For us, preparing for energy needs is always the right move.

China is now the world's largest power generator, producing nearly 9 trillion kilowatt-hours in 2022—approximately double that of the United States. Behind this massive power generation lies China's enormous industrial system, which consumes vast amounts of electricity, particularly in sectors like metallurgy and chemicals. Compared to these industries, the current electricity consumption of AI appears relatively insignificant.

However, it's important to note that as AI becomes increasingly integrated across various industries, its energy consumption will rise significantly. Beyond a certain threshold, AI's electricity demand may reach levels we can hardly anticipate—why else would Altman invest directly in nuclear energy? If power generation is inevitable, why not invest in already mature sectors like wind or solar?

My view is: Altman believes that future AI will require an enormous amount of electricity, and only nuclear power can meet this demand.

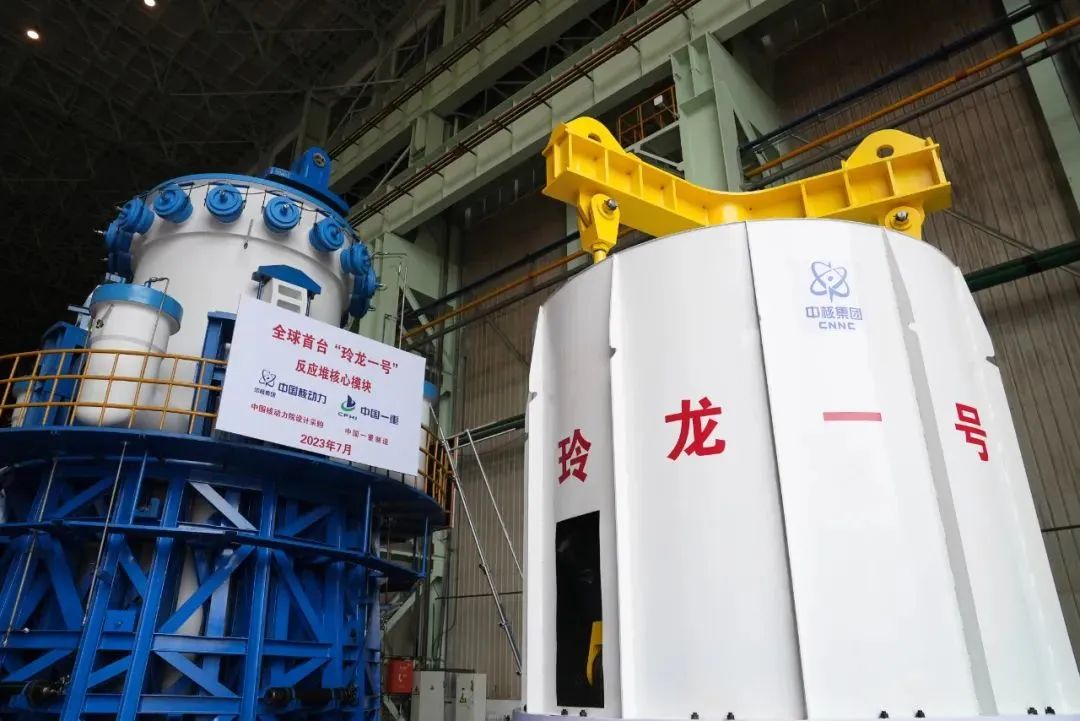

Image source: China National Nuclear CorporationThe AI competition between China and the U.S. has only just begun. Admittedly, in terms of GPU technology, we haven't yet reached the same level and still need breakthroughs and market recognition—this is an issue for the semiconductor industry, beyond the scope of this discussion.

But in energy technology, we've made notable achievements.

Take small modular reactors (SMRs), for example. Over a decade ago, we began researching SMRs—exemplified by CNNC's 'Linglong One.' As an innovative nuclear reactor based on mature technology, it's the world's first SMR to pass IAEA safety reviews and is currently under construction, expected to be operational by 2026.

Image source: China Electric Power NewsIn this area, we already have ready-made solutions to meet future demands.

Beyond nuclear, our efforts in clean energy are noteworthy.

In the solar industry, China is world-class. Trina Solar, based in Changzhou, Jiangsu, generated 85 billion yuan in revenue last year—China's solar expansion has driven down costs, reducing the price of solar power by 20 times over the years.

This cost advantage enhances our competitiveness—a significant portion of China's solar products are exported, with solar panels accounting for 75% of the global market, solar cells for 80%, and silicon wafers for 90%.

Moreover, solar power has quietly surpassed hydropower to become China's second-largest power source—after decades of developing massive hydropower projects like the Three Gorges Dam and Baihetan, who would have thought solar would overtake hydropower in just a decade?

...

In summary, for China's AI companies, the overall market remains relatively small, and energy concerns aren't as urgent as they are for ChatGPT. Thus, there's no immediate need to worry about energy shortages, and even if demand arises, ready-made solutions are available in the market.