OpenAI's Image Detection Tool Unveiled, Recognizes 99% of AI-Generated Content

-

OpenAI is stepping into AI image recognition.

According to the latest news, the company is developing a detection tool.

CTO Mira Murati revealed:

The tool is highly accurate, with a correct identification rate of 99%.

It is currently in the internal testing phase and will be released publicly soon.

It must be said that the accuracy rate still leaves something to be desired, especially considering OpenAI's previous efforts in AI text detection, which ended in a dismal failure with a mere 26% accuracy rate.

OpenAI has long been involved in the field of AI content detection.

In January of this year, they released an AI text detector designed to distinguish between AI-generated and human-written content, aiming to prevent the misuse of AI-generated text.

However, by July, the tool was quietly discontinued—without any announcement, the page simply returned a 404 error.

The reason? The accuracy was simply too low, "barely better than random guessing."

According to data released by OpenAI themselves:

It can only correctly identify 26% of AI-generated text while falsely flagging 9% of human-written content.

Following this rushed conclusion, OpenAI stated it would incorporate user feedback to improve and research more effective text source identification technologies.

They also announced plans to develop tools for detecting AI-generated images, audio, and video content.

With the emergence of DALL-E 3 and continuous iterations of similar tools like Midjourney, AI's image generation capabilities are becoming increasingly powerful.

The greatest concern is their potential use for creating fake news images on a global scale.

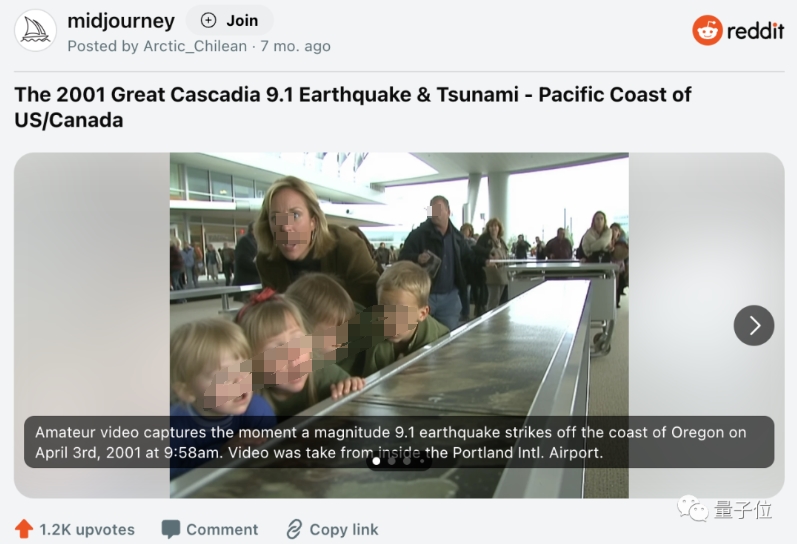

For example, Reddit featured an AI-generated "on-site" image of a "9.1 magnitude Cascadia earthquake and tsunami in 2001" that received over 1.2k upvotes.

Compared to AI text detection tools, the development of AI image detection tools is clearly more urgent (likely because "no one cares whether a speech was written by oneself or a secretary," but "seeing is believing" content makes it hard for some not to trust).

However, as some netizens pointed out when OpenAI's AI text detection tool was taken offline:

Developing both generation and detection tools simultaneously is inherently contradictory.

If one side performs well, it means the other side is lacking, and there may also be conflicts of interest.

A more straightforward approach would be to delegate the task to a third party.

But third-party performance in AI text detection has not been satisfactory either.

From a technical standpoint, another feasible solution is embedding watermarks in AI-generated content at the time of creation.

This is what Google has done.

Recently (late August this year), Google has already beaten OpenAI to the punch by launching an AI image detection technology called SynthID.

Currently, it collaborates with Google's text-to-image model Imagen, embedding a metadata identifier—"This is AI-generated"—into every image produced by the model.

Even if the image undergoes cropping, filters, color changes, or lossy compression, the identification remains unaffected.

In internal tests, SynthID accurately identified a large number of edited AI images, though the specific accuracy rate has not been disclosed.

It remains to be seen what technology OpenAI's upcoming tool will employ and whether it will become the most precise one on the market.

The above information comes from remarks made by OpenAI's CTO and Sam Altman at the Wall Street Journal's Tech Live conference this week.

During the event, the two also revealed more news about OpenAI.

For example, the next-generation large model may be launched soon.

No name was disclosed, but OpenAI did file a trademark for GPT-5 in July this year.

Some are concerned about the accuracy of "GPT-5," asking whether it will no longer produce errors or false content.

In response, the CTO was cautious, only saying "maybe."

She explained:

"We have made significant progress on the hallucination issue with GPT-4, but it has not yet reached the level we need."

Altman, on the other hand, discussed the 'chip-making plan.'

From his original statement, there is no 'concrete evidence,' but it leaves ample room for imagination:

'We definitely wouldn't do this following the default path, but I would never rule out this possibility.'

Compared to the 'chip-making plan,' Altman responded much more decisively to the smartphone manufacturing rumors.

In September this year, former Apple Chief Design Officer Jony Ive (who worked at Apple for 27 years) was reported to be in talks with OpenAI. Sources claimed Altman wanted to develop a hardware device that would provide a more natural and intuitive way to interact with AI, potentially called the 'iPhone of AI.'

Now, he tells everyone:

'I'm not even sure what to do yet, just have some vague ideas.'

And:

'No AI device will surpass the iPhone's popularity, and I have no interest in competing with any smartphone.'