Mini-DALLE 3: Enhancing Text-to-Image Generation Technology in Large Models

-

In recent years, the rapid development of text-to-image (T2I) models has revolutionized artificial content generation, enabling the creation of high-quality, diverse, and creative images in less than two years. However, most existing T2I models face a challenge: they struggle to effectively communicate with natural language, often requiring complex prompt adjustments and specific word combinations.

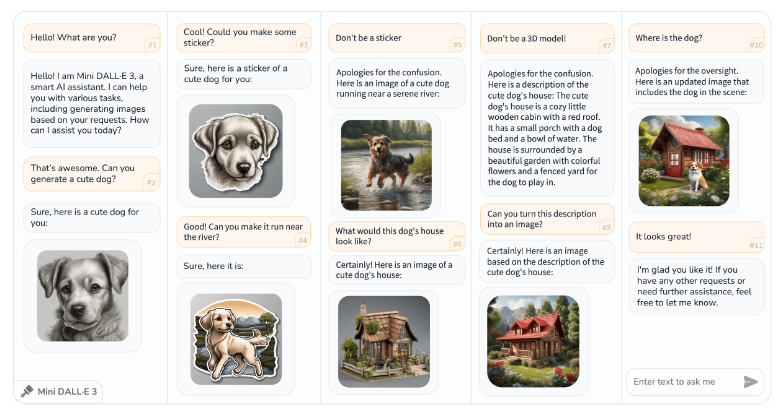

Inspired by DALLE3, researchers have proposed a new task called interactive text-to-image (iT2I), allowing people to interact with large language models (LLMs) using natural language to achieve high-quality image generation and question answering. They also introduced a simple method to extend LLMs for iT2I using prompt techniques and off-the-shelf T2I models, without requiring additional training.

The researchers evaluated their approach across various LLMs, such as ChatGPT, LLAMA, and Baichuan, demonstrating that this method can conveniently and cost-effectively introduce iT2I capabilities to any existing LLM and T2I model, with minimal impact on the LLM's inherent functions like question answering and code generation.

This work is expected to attract widespread attention, providing inspiration for improving human-computer interaction experiences and the image quality of next-generation T2I models. The research holds significant potential for advancing human-computer interaction and enhancing image generation quality.