DeepMind Develops New AI Project Open-X Embodiment Capable of Controlling Various Types of Robots

-

Recently, Google DeepMind collaborated with 33 other research institutions to launch a remarkable new project aimed at addressing a major challenge in the robotics field: the enormous workload required to train machine learning models for each robot, task, and environment. The goal of this project is to create a general-purpose AI system capable of working with different types of physical robots and performing a variety of tasks.

Pannag Sanketi, a senior software engineer at Google Robotics, stated: 'We observed that robots are typically proficient in specialized areas but perform poorly in terms of generalizability. Usually, you have to train a model for each task, robot, and environment, and changing one variable often requires starting from scratch.'

To overcome this challenge, they launched the Open-X Embodiment project, introducing two key components: a dataset containing data from multiple robot types and a family of models capable of transferring skills across a wide range of tasks. Researchers tested these models in robotics labs and on different types of robots, achieving outstanding results in robot training, demonstrating significantly higher success rates compared to traditional methods.

This project was partly inspired by large language models (LLMs), which, when trained on large-scale general datasets, can match or even surpass smaller models trained on narrow, task-specific datasets. Surprisingly, researchers found that this principle also applies to the field of robotics.

To create the Open X-Embodiment dataset, the research team collected data from 22 different robot entities across 20 countries. The dataset includes over 500 skill examples and 150,000 task examples, totaling more than 1 million episodes (an episode is a sequence of actions a robot performs each time it attempts to complete a task).

The models accompanying this dataset are built on the Transformer deep learning architecture. RT-1-X is based on Robotic Transformer 1 (RT-1), a multi-task model for real-world robotics. RT-2-X is built on RT-2, the successor to RT-1, which is a vision-language-action (VLA) model that learns from both robot and web data and can respond to natural language instructions.

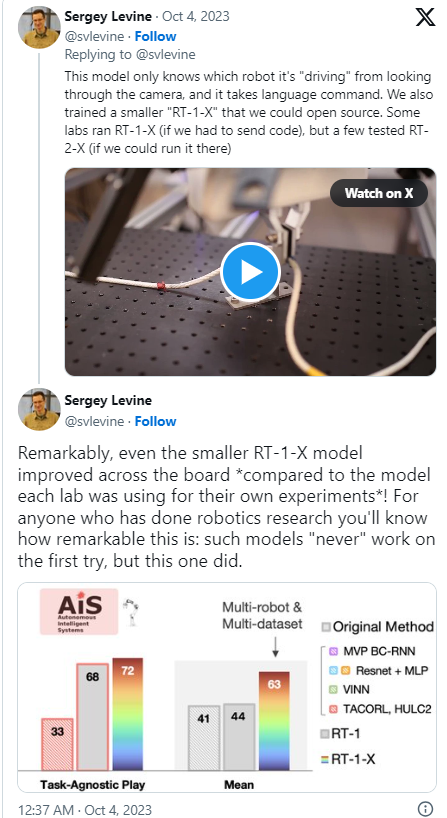

Researchers tested RT-1-X on various tasks using five commonly used robots across five different research labs. The results showed that, compared to models specifically developed for each robot, RT-1-X demonstrated a 50% higher success rate in tasks such as picking up and moving objects and opening doors. The model was also able to generalize its skills to different environments, whereas specialized models were limited to specific visual environments. This indicates that models trained on diverse examples excel in multiple tasks. According to the paper, the model can be applied to various robots, from robotic arms to quadrupedal robots.

RT-2-X achieves three times higher success rates than RT-2 in novel tasks and emergent skills, particularly excelling in tasks requiring spatial understanding, such as distinguishing between moving an apple near a cloth versus placing it on the cloth.

Researchers plan to further investigate how to combine these advancements with insights from DeepMind's self-improving model RoboCat, which can perform various tasks across different robotic arms and automatically generate new training data to enhance its performance.

They have open-sourced the Open X-Embodiment dataset and a smaller version of the RT-1-X model, but not the RT-2-X model. They believe these tools will transform robot training methods, accelerate research progress, facilitate mutual learning among robots, and promote knowledge exchange among researchers. The future of robotics lies in enabling robots to learn from each other and allowing researchers to learn from one another.