AI Startup Reka Launches Multimodal AI Assistant Yasa-1 to Compete with ChatGPT

-

Reka, an AI startup co-founded by researchers from DeepMind, Google, Baidu, and Meta, recently unveiled its latest product, the multimodal AI assistant Yasa-1. This assistant is designed to comprehend and interact with multiple media formats such as text, images, videos, and audio, positioning itself as a potential rival to OpenAI's ChatGPT.

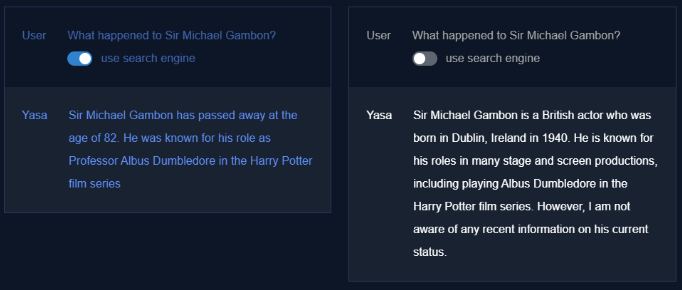

Yasa-1 is currently undergoing private testing, competing with OpenAI's ChatGPT, which has already undergone multimodal upgrades, including GPT-4V and DALL-E3. The Reka team highlighted their experience in developing projects like Google Bard, PaLM, and DeepMind's AlphaCode, which they believe gives Yasa-1 a competitive edge.

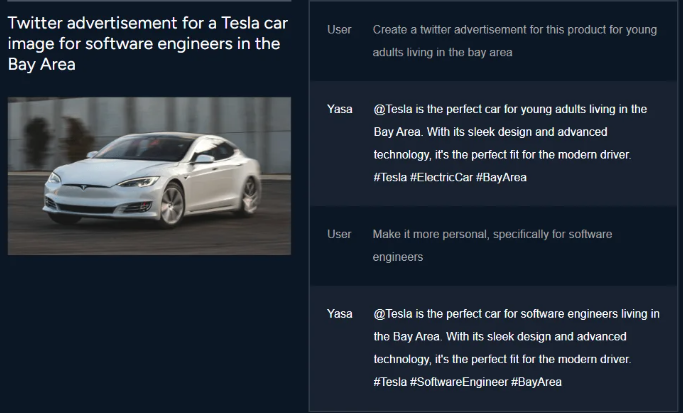

What sets Yasa-1 apart is its multimodal capabilities. It can combine text prompts with multimedia files to provide more specific answers. For example, it can create social media posts using images to promote products or identify specific sounds and their sources.

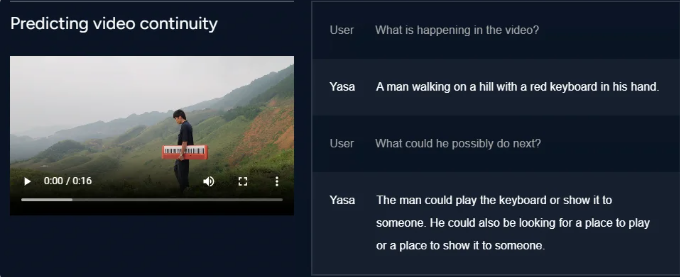

Additionally, Yasa-1 can comprehend what is happening in videos, including the topics being discussed, and predict the next possible actions in the footage.

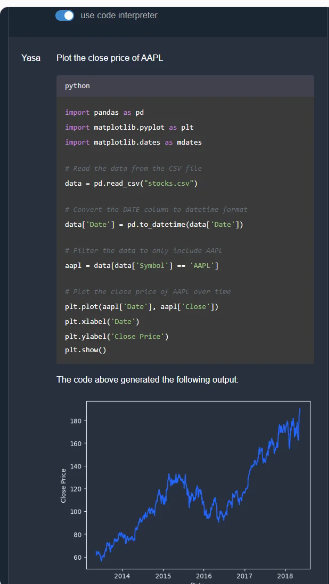

Beyond its multimodal capabilities, Yasa-1 also supports programming tasks and can execute code to perform arithmetic operations, analyze tables, or create visualizations for specific data points. However, like all large language models, Yasa-1 may occasionally generate nonsensical content, so it should not be entirely relied upon for critical advice.

Reka plans to expand Yasa-1's usage in the coming weeks to enhance its functionality and address some limitations. The startup, which debuted in June 2023, has secured $58 million in funding, with a focus on areas such as general intelligence, universal multimodal and multilingual agents, self-improving AI, and model efficiency.

The launch of Yasa-1 signifies intensifying competition in the field of multimodal AI assistants, foreshadowing more complex interactions across different media types and offering users increasingly interesting and practical features.