ChatGPT Major Upgrade: Now Capable of Seeing Images, Hearing Sounds, and Speaking!

-

On September 25, Eastern Time, OpenAI announced on its official website a major upgrade to ChatGPT, introducing three new capabilities: image recognition, sound recognition, and speech output.

Back in March of this year, when OpenAI released the GPT-4 model, it demonstrated the image recognition feature but did not make it available due to safety concerns and incomplete functionality. Now, not only is image recognition being rolled out, but sound recognition is also being introduced. This is a crucial technical step in OpenAI's strategy to achieve AGI (Artificial General Intelligence).

OpenAI stated that over the next two weeks, the new features—seeing, hearing, and speaking—will be made available to Plus and Enterprise users. The voice functionality will be accessible on iOS and Android, while image recognition will be available across all platforms.

Communicating with ChatGPT via Voice

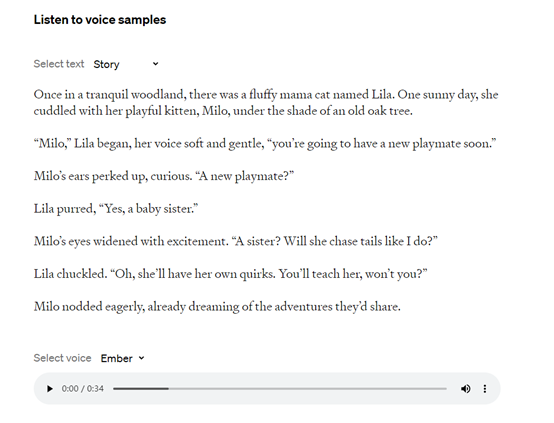

The new voice feature in ChatGPT is powered by a text-to-speech model capable of generating human-like audio from just text and a few seconds of sample speech.

OpenAI collaborated with professional voice actors to create five synthetic voices and utilized its in-house open-source speech recognition system, Whisper, to transcribe users' spoken words into text.

In simple terms, users can now generate speech directly from text within ChatGPT.

For example, ChatGPT can listen to a text story about a kitten and then transcribe it into human speech with a single click. Once completed, users can download this audio file.

[Audio: story-juniper, AIGC Open Community, 34 seconds]

Users can ask ChatGPT about images

Users can show ChatGPT one or multiple images and ask related questions. For instance, send a picture of a broken barbecue grill and ask about the possible reasons it won't start; or take a photo of ingredients in the refrigerator and request multiple meal preparation ideas.

If users only want to ask about a specific part of an image, they can use the mobile drawing function to outline it and ask questions.

ChatGPT's image understanding feature is powered by GPT-3.5 and GPT-4, and it can interpret various types of images, including photos, screenshots, or images containing text.

Providing Safe AI Services

OpenAI states that its goal is to build safe and beneficial AGI (Artificial General Intelligence). Therefore, ChatGPT's features are being rolled out gradually. This approach allows OpenAI time to make improvements and address security vulnerabilities and risks step by step.

Particularly with new voice technology, which can generate realistic synthetic voices in seconds, there is potential for misuse by fraudsters. Thus, this safety-focused development strategy is crucial for advanced models involving voice and visual technologies.

Currently, Spotify is using ChatGPT's voice feature to develop a voice translation assistant that automatically translates podcasters' voices into other languages, expanding their audience. Meanwhile, Be My Eyes has integrated ChatGPT's image recognition function into its app to assist the blind and visually impaired community.