Generative AI is Not a 'Miracle Cure' for Software Development: Developers Must Beware of These Three Major Illusions

-

The software industry has long struggled with cost reduction and efficiency improvement. Prolonged development cycles, seemingly endless release timelines, and continuously emerging defects hardly match the capabilities of this elite team. Generative AI appears to bring a glimmer of hope, with its refreshing performance leading many to think: generative AI can automatically generate code, with low cost, repeatability, and disposable capabilities like cloud resources—if this piece of code isn't suitable, just discard it and generate a new one. Does this mean we no longer need so many elite developers?

When answering our questions, generative AI occasionally provides seemingly plausible answers. However, with a bit of fact-checking, you'll find these answers are merely superficial—either completely unfounded or outright nonsense, which doesn't align with the reputation of artificial intelligence. This is what we call the hallucination of generative AI—due to the lack of reliable training data, it arbitrarily pieces together a false response.

Large model technology continues to evolve, and the perceived level of hallucinations is gradually decreasing. However, when applied to specific domains and use cases, hallucination effects still occur. In this article, I will share the applications of generative AI in software development and the three hallucinations it brings.

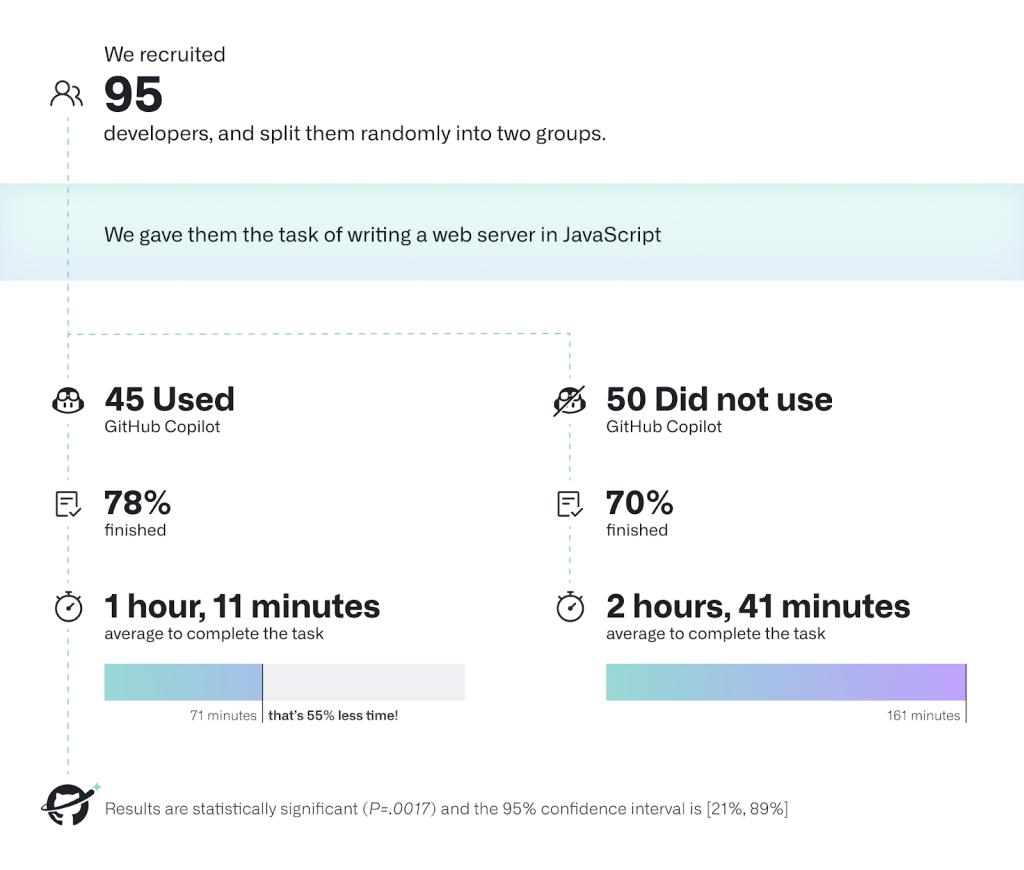

Different software tool vendors are iterating and updating their code assistant products, the most famous being GitHub's Copilot. They claim it can increase programmers' task completion speed by over 55%, and those sleek, swift demo videos make it seem like flying.

但这是否意味着软件的交付进度可以加快50%?

The demonstration code samples are suspicious, and feedback from more programmers adopting Copilot in their projects seems to indicate that speed improvements mainly occur in some commonly used function implementations. For example, array sorting, data structure initialization, or some very simple template code.

It's acceptable to delegate repetitive utility code to AI. But for a software under development, how much similar code actually needs repeated development? This is worth discussing. Not to mention that most of the time, they only need to be written once and then encapsulated for reuse. As for the considerable amount of business logic code, at what speed would programmers proceed? You can generate sufficient business code using AI, but whether it's safe might be an even bigger issue.

There are two issues worth paying attention to.

First is the programmers' selection of AI-provided code.

AI makes it so easy to provide multiple implementation methods that programmers can't help but try to find the optimal option among them.

Is this one better? Or that one? Oh, there are actually five different implementations. Need to understand each piece of code first, then switch to the next one. This implementation is elegant, but unfortunately the unit test failed. Move on to the next one.

Programmers' curiosity is fully stirred by code assistants. Their linear thinking habits are shattered. What programmers forget is not just development discipline, but also time.

Second, software has its own lifecycle.

Clearly, by the time programmers start writing code, many things have already happened, and more will continue to occur until the system goes live. These include but are not limited to: gathering requirements, understanding requirements (from specifications to user stories), testing, maintaining infrastructure, and the endless stream of fixes.

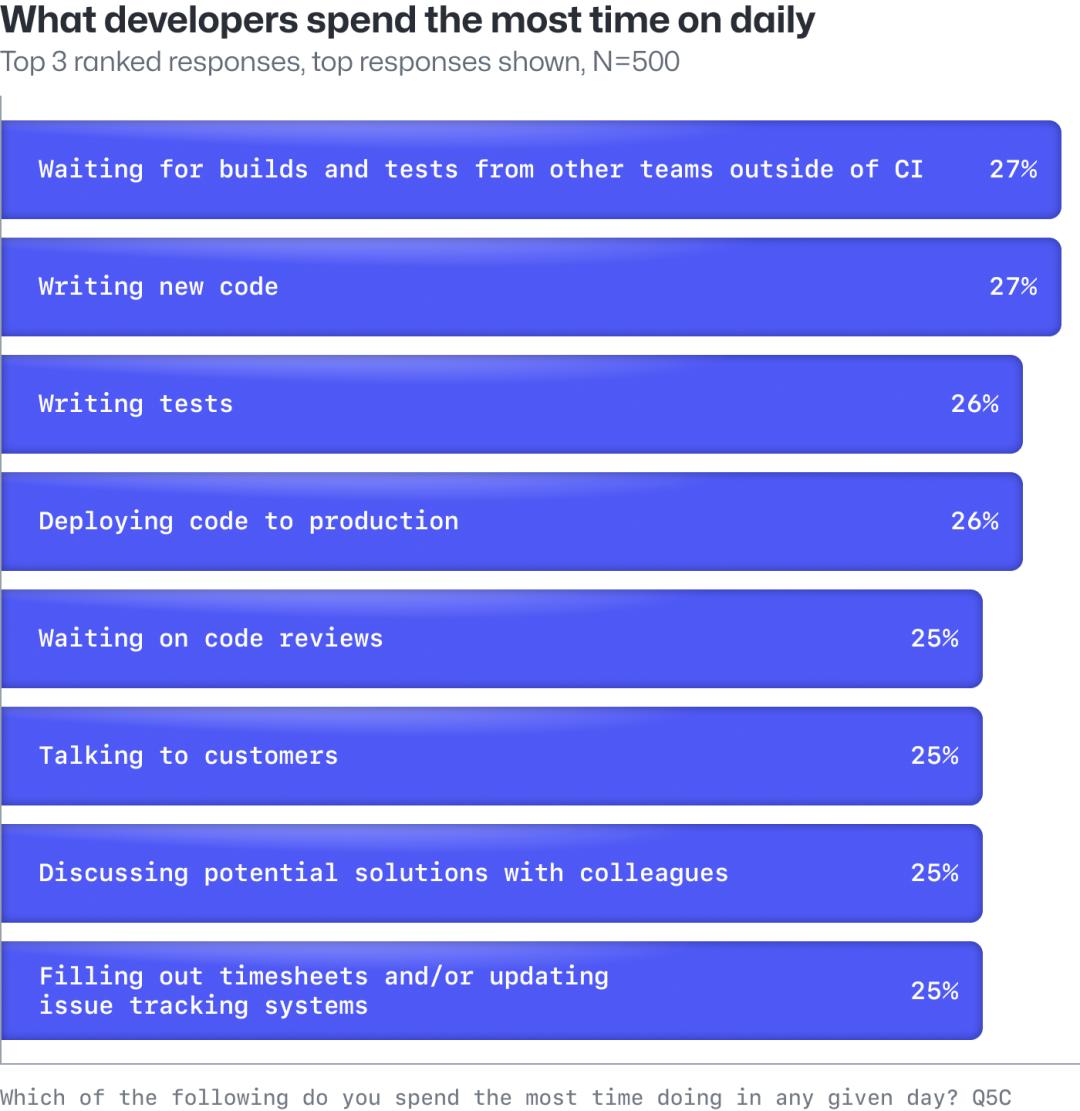

My point is, even if AI helps programmers write faster, this stage is only a part of the software lifecycle. Relevant statistics have long shown that programmers spend only 30% of their daily work time writing code, with more time spent trying to understand what they need to implement, as well as designing and learning new skills.

Human-written code inevitably contains defects, which is a fundamental consensus in software quality. Moreover, it seems that the more experienced the programmer, the more likely they are to produce obscure problems that only become apparent after a long time. Online issues are even more nerve-wracking, but such concerns are hard to avoid.

AI-generated code sounds advanced, but does it deliver perfect results? Unfortunately, the answer may disappoint.

The large language models behind generative AI are trained on massive amounts of internet text data. Although the technology continues to improve, the internet already contains substantial amounts of biased data - including vast quantities of flawed code. This means even carefully selected code suggestions from programming assistants may contain defects. After all, that problematic code might originate from someone on the other side of the globe, just happening to become the choice on this side.

What's more concerning is that generative AI has an amplifying effect. Simply put, if programmers adopt defective generated code, tools like Copilot will record this behavior and continue suggesting similar flawed code in comparable scenarios. The AI doesn't actually understand this code - it's merely encouraged to keep providing it. We can easily imagine the eventual consequences.

Programmers must strictly adhere to the team's development discipline and maintain unified code standards, as this ensures readability by others and makes it easier to identify and fix potential issues. However, the varying styles of code provided by code assistants also seem to introduce more confusion.

Code defects are just one of the sources of irreparable problems that may eventually emerge in software, and they may even constitute a small part. The process of building software is essentially a process of knowledge production and creation. Various roles involved at different stages of the software lifecycle collectively understand and analyze software requirements, then translate them into code. Throughout team and personnel transitions, they also pass on this information, which appears as requirements and code but is fundamentally knowledge.

However, knowledge tends to decay, and the transfer of knowledge assets inevitably encounters discrepancies.

For example, unreadable code, failure to continuously update documentation, entire team replacements, and so on. These are the root causes of persistent bugs and issues in software. Artificial intelligence has not yet solved these thorny problems in software engineering, at least not in the short term.

AI code assistants do appear to be like well-informed programmers. Some are even willing to treat them as partners in pair programming practices. Human resource costs have always been a headache for IT teams—top talent is too expensive, suitable candidates are hard to find, and training proficient programmers from scratch takes too long. With the support of AI and code assistants, does this mean teams could reduce their size by nearly half?

AI and code assistants cannot guarantee both speed and high quality, and they require experienced programmers to fully utilize their advantages. These experienced programmers need the ability to judge code quality, assess impacts on existing production code, and possess the patience and skills to fine-tune prompts.

In this article, the author discusses various issues to be aware of when using code assistants, as well as her meticulous thought process. The uncertainty introduced by code assistants may lead to two types of risks: affecting code quality and wasting time. This actually reflects the self-reflection capability of a sufficiently experienced programmer.

Only in this way can the code assistant comfortably play the role of a well-informed novice, while the experienced programmer acts as the gatekeeper—she is the one ultimately responsible for submitting the code. In this sense, what AI is truly transforming is the programming experience.

(Image source: https://martinfowler.com/articles/exploring-gen-ai.html. The author imagined the code assistant as an eager, stubborn, articulate but inexperienced character, and used AI to create this cartoon image.)

AI and code assistants are highly effective at solving simple, repetitive problems. However, in the process of building software, there are many scenarios that require human expertise and professional experience to address complex issues. Examples include the increasing architectural complexity and scope of software systems, meeting market and business demands, cross-role communication and collaboration, as well as more contemporary challenges involving code ethics and security.

Although judging whether programmers are sufficiently professional and skilled isn't as straightforward as counting, we can say that introducing AI and code assistants while downsizing development teams yields uncertain results, with current evidence suggesting the drawbacks outweigh the benefits.

The essence of generative AI is pattern transformation – converting one form of text into another, and advanced code assistants operate within the same paradigm. If we regard AI code assistants involved in software construction as a panacea for solving numerous software engineering challenges, we're likely oversimplifying complex problems.

What have we been talking about so far?

We are actually discussing how to measure the effectiveness of investing in AI for software development. Investing in AI is not as simple as purchasing a code assistant license and then sitting back to enjoy cost savings and efficiency gains. Instead of constantly asking, 'How do we measure the effectiveness of investing in AI and code assistants?', it's better to ask, 'What exactly should we measure?'. Starting with the four key metrics defined by DORA is a wise choice: lead time for changes, deployment frequency, mean time to recovery (MTTR), and change failure rate.

The following basic measurement principles are provided for reference:

Measure team efficiency, not individual performance.

Measure outcomes, not output.

Focus on tracking trends over time rather than comparing absolute values across different teams.

Use dashboard data to initiate conversations, not to conclude them.

Measure what's useful rather than what's easy to measure.