Behind the Billion-Dollar Valuation: The Development History of Zhipu AI

-

For Zhipu AI, the phrase 'the center of attention' has been fitting for a long time.

Recently, Zhipu AI's latest round of financing has once again drawn widespread attention, becoming the focus of public interest. Public information shows that the new round of financing exceeded 2.5 billion yuan, and combined with previous rounds, Zhipu AI's market valuation has surpassed 10 billion yuan.

More notably, the investor lineup is exceptionally prestigious, including the Zhongguancun Self-Innovation Fund of the Social Security Fund (with Junlian Capital as the fund manager), Meituan, Ant Group, Alibaba, Tencent, Xiaomi, Kingsoft, Shunwei, Boss Zhipin, TAL Education Group, Sequoia Capital, Hillhouse Capital, and other institutions, as well as follow-on investments from some existing shareholders, including Junlian Capital.

In this 'Hundred Models War,' Zhipu AI is undoubtedly one of the most highly anticipated players.

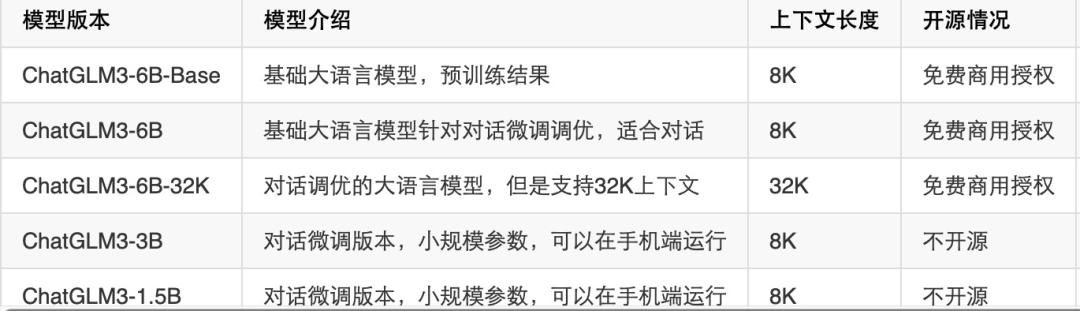

However, it's noteworthy that currently Zhipu AI's commercially available ChatGLM3 only has a 6B version, which still falls short of matching GPT 3.5's high-parameter commercial versions. Especially after Alibaba officially open-sourced its 72B parameter model, Zhipu will face significant pressure.

Some thought-provoking questions emerge: What exactly are Zhipu AI's competitive advantages? Where does its future development potential lie? And how should it address current challenges while exploring the implications behind its frequent financing activities?

From open-sourcing its first-generation model in March to reaching the third generation just seven months later, Zhipu AI's development has been remarkably rapid.

The latest third-generation base large language model, the ChatGLM3 series, has been released. Official reports indicate that this model's performance has significantly improved compared to the previous generation, making it the most powerful base large model under 10 billion parameters.

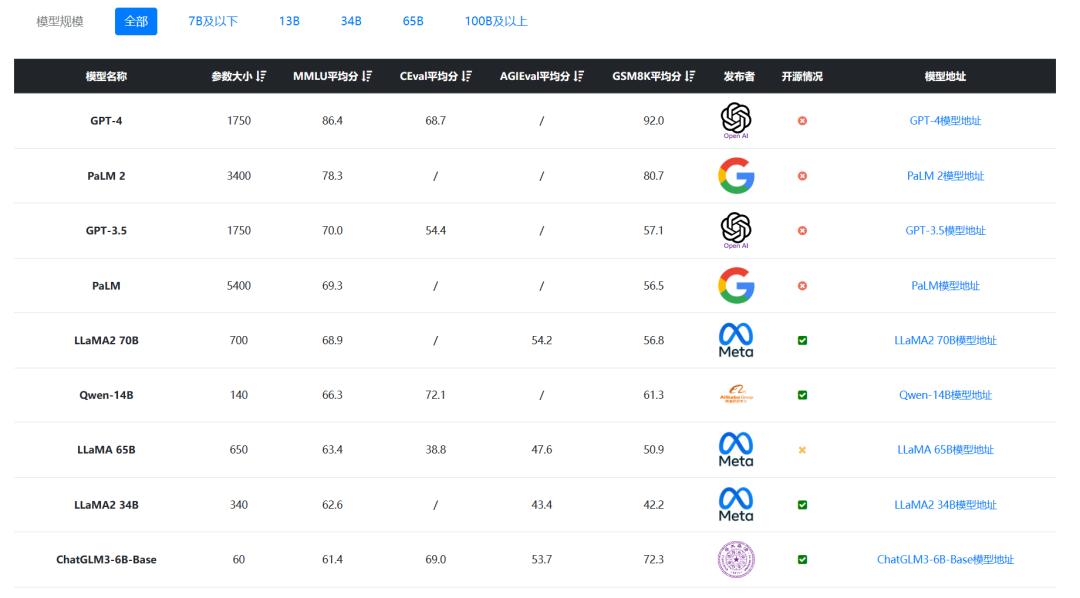

In detail, according to MMLU rankings, ChatGLM3-6B ranks 9th among all models of various sizes. However, the smallest of the top 8 models is Qwen-14B with 14 billion parameters. When ranked by GSM8K, ChatGLM3-6B-Base even reaches third place, surpassing GPT-3.5's score of 57.1.

Clearly, Zhipu AI's ambition to surpass OpenAI is not unfounded.

To deeply explore the advantages of Zhipu AI, one must start with the numerous challenges in the development and deployment of domestic large models.

The value of a new technology is most directly tested through commercialization. Among the domestic large model manufacturers, most are still in the stage of discussing technology and development. As for commercialization, they are generally in an exploratory phase.

Zhipu AI had already served the B-end market before its official startup and now boasts over 1,000 clients. This indicates its stronger prospects in industrial deployment and commercialization.

Another critical prerequisite for the deployment of large models is data security. As the only fully domestically funded and independently developed large model enterprise in China, Zhipu AI's GLM domestic chip adaptation plan offers different levels of certification and testing for various types of users and chips, ensuring true security and controllability.

This advantage, in a sense, can completely capture state-owned enterprises and large corporations with special requirements. "For state-owned enterprises and central enterprises looking to develop model capabilities or access them, Zhipu is an unavoidable option no matter what," an industry insider told Industrialist.

Additionally, there's the human factor. In the primary market, early-stage investments are all about betting on people, and this applies to all startups. Zhipu AI's "predecessor" was Tsinghua University's KEG (Knowledge Engineering Group). CEO Zhang Peng holds a Ph.D. from Tsinghua University's Computer Science Department; Chairman Liu Debing studied under Academician Gao Wen and previously served as Deputy Director of the Science and Technology Big Data Research Center at Tsinghua's Data Science Research Institute; President Wang Shaolan is a Tsinghua Innovation Leadership Ph.D.

Overall, Zhipu AI possesses all the right conditions for success: practical experience, a complete talent pool, sufficient funding, and advanced technology. These conditions have allowed it to stand out early in the race among large model providers. However, this is just the surface.

In terms of path selection, unlike the more mainstream GPT, Zhipu AI adopts GLM (General Language Model). Zhipu AI has proposed a completely new GLM approach, which offers higher training efficiency than GPT and can understand more complex scenarios.

In the implementation of large models, Zhipu AI chose not to develop industry-specific large models, but instead persuaded industry clients to fine-tune on general large model foundations. According to CEO Zhang Peng, only large-scale general models can achieve the emergence of human-like cognitive abilities.

Additionally, to improve the performance and capabilities of large language models as AI Agents, Tsinghua University and Zhipu AI introduced a new solution called AgentTuning, which effectively enhances the capabilities of open-source large language models as AI Agents.

The reason Zhipu AI has gained favor from capital and internet giants is not only due to its technology but also its choices in path, model, strategy, and the clarity of its positioning in the underlying large model framework.

As CEO Zhang Peng put it, Zhipu AI's full range of products has already achieved parity with OpenAI's offerings.

So, at present, apart from the verified paths and models, does Zhipu AI have any other unfinished pieces in its puzzle?

Looking at the commercially authorized model versions of Zhipu AI, currently, it is limited to 6B, which is 6 billion parameters. In contrast, OpenAI's open-source models show that GPT-3 is an autoregressive language model with 175 billion parameters, part of which has been open-sourced by OpenAI; GPT-3.5 has 137.5 billion parameters, and a portion of it has also been open-sourced.

More notably, Alibaba recently open-sourced a 72B-parameter model. It's important to note that current large model applications are largely in the 'brute force leads to miracles' phase, where larger parameters mean better real-world performance.

It can be observed that although Zhipu AI, as China's leading open-source large model, possesses a robust technical architecture, there remains a noticeable gap when compared to OpenAI and the model scales of domestic commercial giants with proprietary licenses. Moreover, with Alibaba's release of larger-parameter open-source models, Zhipu AI's advantage in the 6B model segment may diminish.

To bridge this gap, substantial financial support will be required.

"If Zhipu AI had a financial backer like Microsoft, it would be very impressive," an industry insider told Industrial Insights.

In fact, as Zhipu AI's large model capabilities continue to improve, the training parameters naturally need to increase, leading to higher demands for computing power and storage. This presents a huge challenge in terms of funding and resource allocation.

Roughly speaking, the annual cost of privately deploying a 130 billion parameter large model is close to 40 million yuan. However, the value generated by this 40 million yuan investment remains uncertain. In the field of large AI model deployment, small enterprises currently have weak payment capabilities, while large enterprises either develop their own models or are still in the exploration and understanding phase, making commercial implementation difficult.

Where the funding will come from is an urgent problem to be solved.

"Part of the reason Zhipu open-sourced its 6B model was to signal to the market: 'We have something better—see if you're willing to pay for it,'" an industry insider told Industrial Observer. For Zhipu AI, open-sourcing the 6B model serves as a clear strategy to showcase capabilities and attract funding.

"Another approach is to expand its 'circle of partnerships.'"

It's well-known that internet giants hold significant advantages in computing power, storage capacity, and data resources. For Zhipu AI, building these capabilities would require substantial capital investment. Collaborating with these giants can significantly reduce R&D costs and improve efficiency. Additionally, Zhipu AI can leverage cloud providers' market presence and channels to promote its AI technologies and services.

On the other hand, since large models need to be deployed on the cloud and operate on a pay-as-you-go basis, the more users utilize the models and resources, the greater the demand for cloud computing power becomes, thereby increasing the revenue of cloud providers. Moreover, cloud vendors can leverage the technical capabilities of Zhipu AI to enhance their competitiveness in the field of artificial intelligence.

Overall, for cloud vendors, this collaboration can boost their cloud revenue; for large model providers, it reduces infrastructure investment—truly killing two birds with one stone.

Currently, Zhipu AI has initiated a series of collaborations with companies such as Alibaba, Tencent, and Meituan.

From this perspective, the reason Zhipu AI is "highly sought after" lies in its open and integrated business model. In the current landscape where domestic large model competition is increasingly fierce and difficult to implement, Zhipu AI's approach is more conducive to promoting the deployment of large models and accelerating the development of the large model ecosystem.

Zhipu AI's model has sparked new imagination and contemplation for both itself and the future development landscape of domestic large models.

"A model must be capable of replacing half the workforce before enterprises would consider adopting it." This statement from an industry insider reflects the prevailing view on the long road ahead for large model commercialization.

Objectively speaking, the current domestic large model ecosystem is flourishing with diversity but already showing signs of homogenization. This not only leads to irrational utilization of infrastructure like computing power but may also foster unhealthy competition.

With the slow progress in large model implementation and the continuous emergence of new large model startups like bamboo shoots after rain, a significant bubble is inevitable. For domestic large model providers, leveraging ecosystem collaboration to drive commercialization through division of labor is undoubtedly the optimal path forward.

In fact, there is currently no generational gap in algorithms between mainstream large models domestically and internationally, but there are disparities in computing power and data resources.

By strongly supporting leading domestic technology companies in developing independently controllable large models for general domains, while encouraging various vertical sectors to leverage open-source tools to build standardized and controllable independent toolchains based on large models, we can explore both "large and powerful" general models and develop "small and refined" vertical industry models. This approach will gradually establish a healthy ecosystem where foundational large models and specialized small models interact symbiotically and evolve iteratively.

With the increasing maturity of the large model ecosystem, it will also bring about some new changes.

First is the improvement in model quality. With technological advancements and increased resource investment, future large models will achieve higher accuracy, stronger comprehension capabilities, and broader applicability. This means they will not only better understand natural language but also perform more complex tasks such as translation, reasoning, and creative work.

Second is the expansion into richer application domains. Beyond traditional text processing, large models will play a more significant role in areas like speech recognition, image generation, video understanding, and recommendation systems. This means we can enjoy the convenience of AI in more scenarios.

Additionally, future large models will become more customizable, better meeting users' personalized needs. Users can select appropriate models based on their actual requirements and perform customized configurations. This will enable users to more flexibly utilize large models to solve their specific problems.

In the large model ecosystem, data will become more shared and open. Institutions and enterprises may strengthen cooperation, sharing high-quality data resources to promote the development of large model technology. Such collaboration will provide broader opportunities for the development and application of large models.

New waves of technological advancement inevitably require certain enterprises to take on specific missions. At present, technical architecture serves as a critical standard for the emergence of large models. Looking ahead, the ability to build ecosystems will become increasingly vital for riding the wave of AI large models.