Large Models + Search Build a Complete Tech Stack: Baichuan Intelligence Uses Search Enhancement for Enterprise Customization

-

Since the initial release of ChatGPT, although the hype around large models has lasted for over a year, most of the time it has remained at the academic frontier and technological innovation level, with few cases deeply embedded in specific scenarios to realize industrial value.

The various challenges in practical implementation ultimately point to one direction: industry knowledge.

Facing vertical scenarios across various industries, general models pre-trained on publicly available online information and knowledge struggle to address issues like accuracy, stability, and cost-effectiveness. If, in addition to external real-time information search, a powerful specialized enterprise knowledge base is leveraged to significantly enhance the model's understanding of industry knowledge, the results will naturally be better.

This is akin to the familiar 'open-book exam' model. The stronger the 'memory capacity' of the human brain, the better, but it ultimately has its limits. The reference materials brought into the exam act like external 'hard drives,' allowing test-takers to focus less on memorizing complex knowledge points and more on understanding the fundamental logic of the knowledge.

At the Baichuan2-Turbo series API launch event held on December 19, Wang Xiaochuan, founder and CEO of Baichuan Intelligent, offered a more precise analogy: Large models are like a computer's CPU, internalizing knowledge through pre-training and generating results based on user prompts. The context window can be seen as the computer's memory, storing the text currently being processed, while real-time internet information and complete enterprise knowledge bases together constitute the hard drives of the large model era.

These latest technological insights have been integrated into Baichuan Intelligent's large model products.

Baichuan Intelligent has officially opened access to the search-enhanced Baichuan2-Turbo series APIs, including Baichuan2-Turbo-192K and Baichuan2-Turbo. These APIs not only support an ultra-long context window of 192K but also enhance knowledge retrieval capabilities. All users can upload specific text materials to build their own exclusive knowledge bases, enabling more complete and efficient intelligent solutions tailored to their business needs.

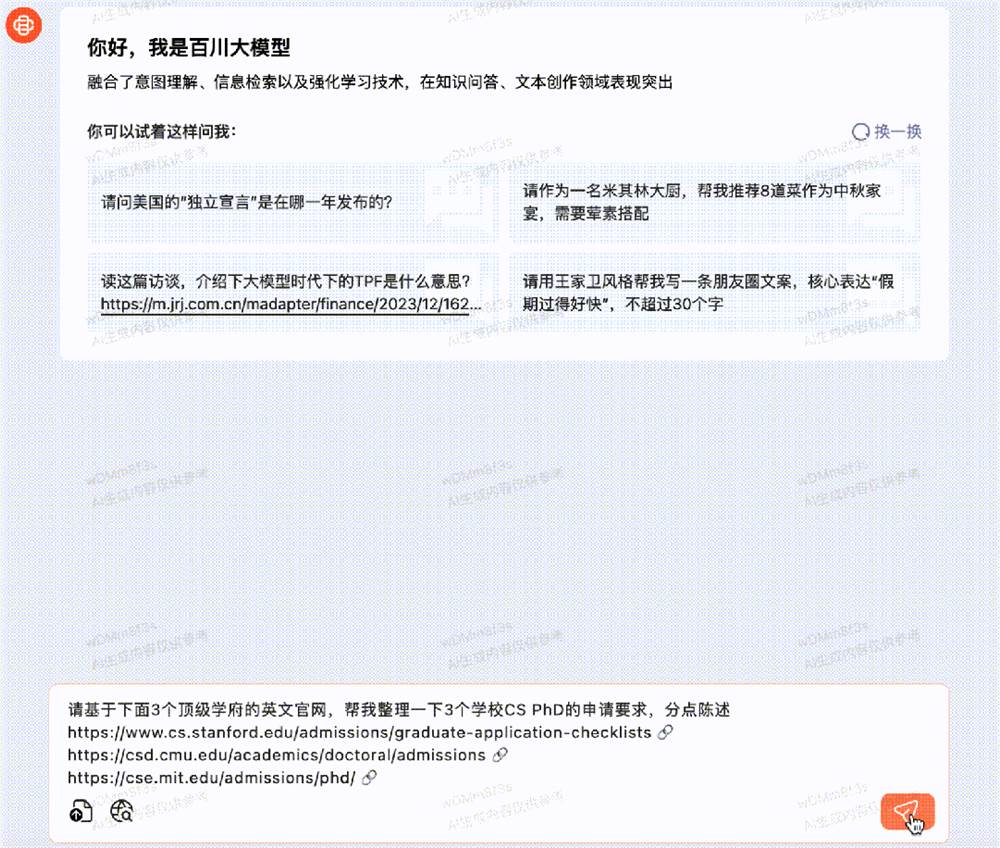

Meanwhile, Baichuan Intelligence has upgraded its official website model experience, officially supporting PDF text uploads and URL inputs. General users can now experience the enhanced general intelligence level through the official website portal, benefiting from long context windows and search enhancement.

For Large Models to Take Off, Both 'Memory' and 'Hard Drive' Are Essential

The key to large model applications lies in effectively utilizing enterprise data, a point deeply felt by practitioners in the field.

For enterprises themselves, years of digital construction have accumulated vast amounts of high-value data and experience. These proprietary data constitute the core competitiveness of enterprises and determine the depth and breadth of large model implementation.

Traditionally, well-funded enterprises have leveraged their own data to train large models during the pre-training phase. However, this approach requires significant time and computational resources, along with a dedicated technical team. Some organizations opt to integrate leading foundational models and perform post-training (Post-Train) and supervised fine-tuning (SFT) with their proprietary data. While this mitigates issues like prolonged model development cycles and lack of domain knowledge, it still fails to address the challenges of model hallucinations and timeliness in deployment. Whether through pre-training (Pre-Train), post-training (Post-Train), or supervised fine-tuning (SFT), each data update necessitates retraining or fine-tuning the model, without guaranteeing reliability or stability in application—issues persist even after multiple training iterations.

This highlights the need for a more efficient, precise, and real-time approach to data utilization in large model deployment.

Recently, expanding context windows and incorporating vector databases have emerged as promising solutions. Technically, a larger context window allows the model to reference more information when generating the next token, reducing the likelihood of hallucinations and improving output accuracy. Thus, this technology is essential for effective model deployment. Vector databases, on the other hand, act as an external "storage" for large models. Unlike merely scaling up the model, integrating an external database enables the model to answer user queries across a broader dataset, enhancing adaptability to diverse environments and questions at minimal cost.

However, each method has its limitations, and no single solution can fully overcome the challenges of large model deployment.

For instance, there are capacity limitations, cost, performance, and efficiency issues when the context window is too long. The first is the capacity issue: a 128K window can accommodate up to 230,000 Chinese characters, which is roughly equivalent to a 658KB text document. Then there's the computational cost issue, as the inference process of long-window models consumes a significant amount of tokens, leading to high costs. From a performance perspective, since the inference speed of the model is directly related to the text length, even with extensive caching techniques, long texts still result in performance degradation.

For vector databases, their query and indexing operations are more complex than traditional relational databases, which imposes additional computational and storage resource pressures on enterprises. Moreover, the domestic vector database ecosystem is relatively weak, presenting a high development barrier for small and medium-sized enterprises.

In Baichuan Intelligence's view, only by combining long-window models with search/RAG (Retrieval-Augmented Generation) to form a complete technical stack of 'long-window models + search' can truly efficient and high-quality information processing be achieved.

In terms of context windows, Baichuan Intelligence launched the Baichuan2-192K model on October 30, which at the time featured the world's longest context window, capable of processing 350,000 Chinese characters at once, reaching industry-leading standards. Simultaneously, Baichuan Intelligence upgraded its vector database to a search-enhanced knowledge base, greatly enhancing the ability of large models to acquire external knowledge. Its combination with ultra-long context windows can connect to all online information and enterprise knowledge bases, thereby replacing most enterprise personalized fine-tuning and addressing 99% of customized enterprise knowledge base needs.

This brings obvious benefits to enterprises, not only significantly reducing costs but also better accumulating vertical domain knowledge, enabling the continuous appreciation of enterprise proprietary knowledge bases as core assets.

Long Window Model + Search Enhancement

How to Enhance the Application Potential of Large Models?

On one hand, without altering the fundamental model itself, large language models can integrate internalized knowledge with external information through increased memory capacity (i.e., longer context windows) and the powerful combination of search augmentation (accessing real-time internet information and expert knowledge from specialized domain databases).

On the other hand, the incorporation of search augmentation technology can better leverage the advantages of long context windows. This technology enables large models to precisely understand user intent, identify the most relevant knowledge from vast internet and professional/enterprise knowledge bases, and then load sufficient information into the context window. With the help of long-window models, search results can be further summarized and refined, fully utilizing the context window's capacity to help the model generate optimal results. This creates a synergistic effect between technical modules, forming a closed-loop, powerful capability network.

The combination of these two approaches can expand the capacity of context windows to unprecedented levels. Baichuan Intelligence, through its long-window + search augmentation approach, has increased the original text scale accessible to large models by two orders of magnitude on top of a 192K long context window, reaching 50 million tokens.

The "Needle in the Haystack" test, designed by renowned AI entrepreneur and developer Greg Kamradt, is widely recognized as the most authoritative method in the industry for evaluating the accuracy of large models in processing long texts.

To validate the capabilities of long context windows combined with search enhancement, Baichuan AI sampled a 50-million-token dataset as the "haystack," inserting domain-specific questions as "needles" at different positions. They tested both pure embedding retrieval and sparse retrieval + embedding retrieval methods.

For requests within 192K tokens, Baichuan AI achieved 100% answer accuracy. For documents exceeding 192K tokens, the company extended the test context length to 50 million tokens using its search system, evaluating both pure vector retrieval and sparse + vector retrieval approaches.

The results showed that sparse retrieval + vector retrieval achieved 95% accuracy, approaching perfect scores even in the 50-million-token dataset, while pure vector retrieval only reached 80% accuracy. Additionally, Baichuan's search-enhanced knowledge base outperformed industry-leading models like GPT-3.5 and GPT-4 across three benchmark tests: the Bojin Large Model Challenge (financial document understanding), MultiFieldQA-zh, and DuReader.

Combining Long Context Windows with Search Presents Challenges, Baichuan AI Adapts Strategically

While the combination of "long-window models + search" can overcome the limitations of large models in terms of hallucinations, timeliness, and knowledge, the prerequisite is to first solve the integration challenges between the two.

The degree to which these two can be perfectly integrated largely determines the final effectiveness of the model.

Especially now, as the way users express their information needs is undergoing subtle changes, the deep integration with search poses new challenges for Baichuan Intelligence at every stage.

On one hand, in terms of input methods, user queries are no longer single words or short phrases but have shifted to more natural conversational interactions, even continuous multi-turn dialogues. On the other hand, the forms of queries have become more diverse and closely tied to context. The input style has become more colloquial, and queries tend to be more complex.

These changes in Prompt engineering don't align with traditional keyword-based or short-phrase search logic. How to achieve alignment between the two is the first major challenge when combining long-context models with search systems.

To better understand user intent, Baichuan AI first leverages its proprietary large language model to fine-tune user intent understanding. This converts users' continuous multi-turn, colloquial Prompts into keywords or semantic structures that are more compatible with traditional search engines, resulting in more precise and relevant search results.

Secondly, to tackle the increasingly complex problems in real-world user scenarios, Baidu Intelligent not only draws on Meta's CoVe (Chain-of-Verification) technology, which breaks down complex prompts into multiple independent, search-friendly queries that can be retrieved in parallel. This allows the large model to conduct targeted knowledge base searches for each sub-query, ultimately providing more accurate and detailed answers while reducing hallucinatory outputs. Additionally, it utilizes its self-developed TSF (Think Step-Further) technology to infer and uncover deeper issues behind user inputs, enabling a more precise and comprehensive understanding of user intent and guiding the model to output more valuable answers.

Another challenge relates to the enterprise knowledge base itself. The higher the alignment between user needs and search queries, the better the output of the large model naturally becomes. However, in knowledge base scenarios, to further improve the efficiency and accuracy of knowledge acquisition, the model requires more powerful retrieval and recall solutions.

Knowledge base scenarios have unique characteristics, as user data is typically privatized. Traditional vector databases cannot adequately ensure semantic matching between user needs and the knowledge base.

To address this, Baichuan Intelligent independently developed the Baichuan-Text-Embedding vector model, which was pre-trained on over 1.5T tokens of high-quality Chinese data. Through proprietary loss functions, it solved the batchsize dependency issue in contrastive learning methods. The results were remarkable - this vector model topped the current largest and most comprehensive Chinese semantic vector evaluation benchmark C-MTEB, achieving leading performance in classification, clustering, ranking, retrieval, text similarity tasks, and overall scores.

While vector retrieval remains the mainstream method for building large model knowledge bases, relying solely on it is clearly insufficient. The effectiveness of vector databases heavily depends on training data coverage, and their generalization capability suffers significantly in uncovered domains, creating substantial challenges for private knowledge base scenarios. Additionally, the length disparity between user prompts and knowledge base documents presents further challenges for vector retrieval.

Therefore, Baichuan Intelligent introduced sparse retrieval and rerank models on top of vector retrieval, creating a hybrid retrieval approach that combines vector and sparse retrieval methods. This significantly improved target document recall rates. Data shows this hybrid approach achieves 95% recall for target documents, while most open-source vector models have recall rates below 80%.

Additionally, large language models can amplify their own hallucination phenomena during question-answering due to inaccurate references and mismatches with the models.

In response, Baichuan Intelligence has pioneered Self-Critique technology on top of general RAG, enabling large models to introspect retrieved content based on prompts from perspectives like relevance and usability. This allows for secondary review to filter out the most prompt-matching and highest-quality candidate content, elevating the knowledge density and breadth of materials while reducing noise in retrieval results.

Following the "long-window model + search" technical roadmap, Baichuan Intelligence leverages its expertise in search technology—particularly its industry-leading combination of vector retrieval and sparse retrieval—to address the pain point of mismatches between large models and user prompts or enterprise knowledge bases. This enhances its search-augmented knowledge base capabilities, providing a significant boost for large models to more efficiently empower vertical industry scenarios.

Large Model Implementation: Search Enhancement Ushers in a New Era of Enterprise Customization

In just one year, the development of large models has surpassed expectations. We once envisioned that 'industry-specific large models' would unleash productivity across various sectors. However, these models are constrained by factors such as the need for specialized technical talent and computing power, leaving many small and medium-sized enterprises (SMEs) unable to reap the benefits of this large model wave.

It is evident that taking the step 'from product to implementation' is indeed more challenging than the initial 'from technology to product' phase.

Amid the fierce competition from the 'hundred-model battle' to the race for customized large models, technology has undergone multiple iterations. From initially developing industry-specific large models based on pre-training, to creating enterprise-exclusive models through post-training or SFT, and later leveraging technologies like long-context windows and vector databases to develop bespoke customized models—each advancement has brought large models closer to the ideal of 'omniscience and omnipotence.' However, widespread application and implementation in vertical industry scenarios remain elusive.

Baichuan Intelligence has developed a 'large model + search' technology stack. By improving model performance through long-window technology and utilizing search augmentation to more efficiently and comprehensively connect domain knowledge with web-wide information, they have pioneered a lower-cost path for customized large models, taking the first step toward achieving 'omniscience.' We have good reason to believe this will lead the large model industry into a new phase of implementation.