OpenAI Demonstrates Control Methods for Superintelligent AI

-

OpenAI, a company committed to building artificial intelligence for the benefit of all humanity, has seen its commercial ambitions become more pronounced since the launch of ChatGPT last year, especially amid recent governance crises. Now, the company announces that a new research team focused on managing future superintelligent AI is beginning to yield results.

OpenAI researcher Leopold Aschenbrenner stated: "Artificial General Intelligence (AGI) is rapidly approaching. We will see superhuman models with immense capabilities, which could be highly dangerous, and we currently lack methods to control them." OpenAI has pledged to dedicate one-fifth of its computing power to the Superalignment project.

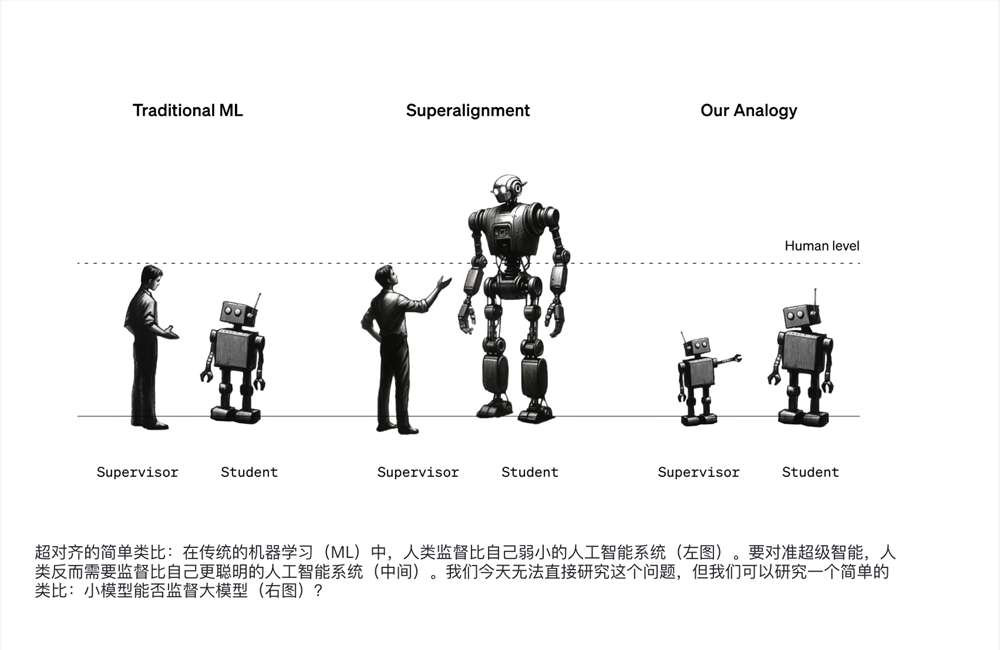

A research report released by OpenAI today presents experimental results aimed at testing how weaker AI models can guide the behavior of more intelligent AI models. Although the technology involved is far from surpassing human flexibility, this scenario is designed to represent a future period when humans must collaborate with AI systems smarter than themselves.

OpenAI researchers examined a process called supervision, which is used to adjust large language models like GPT-4 to make them more helpful and less harmful. Currently, this involves human feedback on which AI system responses are good or bad. As AI advances, researchers are exploring how to automate this process to save time, also because they believe that when AI becomes more powerful, it may become impossible for humans to provide useful feedback.

In a comparative experiment using OpenAI's GPT-2 text generator, first released in 2019, to teach GPT-4, the newer system's capabilities weakened, resembling the poorer system. Researchers tested two ideas to address this issue. One approach involved training progressively larger models to reduce performance loss at each step. In the other, the team applied an algorithmic adjustment to GPT-4, allowing the more powerful model to follow the guidance of weaker models without significantly compromising its performance. This method proved more effective, though the researchers acknowledged that these approaches do not guarantee flawless performance from more powerful models and described them as a starting point for further research.

Screenshot from OpenAI

AI Safety Center Director Dan Hendryks stated: "It's encouraging to see OpenAI actively tackling the challenge of controlling superhuman AI. We'll need years of dedicated effort to address this issue."

Aschenbrenner and two other members of the Superintelligence team, Collin Burns and Pavel Izmailov, told WIRED they were inspired by what they saw as the crucial first step in taming potential superhuman AI. "Even though sixth graders know less math than college math majors, they can still communicate their goals to university students," Izmailov said. "That's what we're trying to achieve now."

The Superalignment team is co-led by OpenAI co-founder, chief scientist, and board member Ilya Sutskever. Sutskever is a co-author of the paper released today, but OpenAI declined to let him discuss the project.

After Altman returned to OpenAI last month under an agreement that saw most board members resign, Sutskever's future at the company appears uncertain.

Aschenbrenner said: "We are very grateful to Ilya. He has been a tremendous driving force and inspiration for the project."

OpenAI researchers are not the first to attempt using today's AI technologies to test techniques that might help control future AI systems. Like previous work in corporate and academic labs, there's no way to know if ideas that work in carefully designed experiments will be practical in the future. Researchers describe their efforts to refine the ability of weaker AI models to train more powerful models as 'a key component of the broader superalignment problem'.

The so-called AI alignment experiments also raise questions about the reliability of any control system. The core of OpenAI's new technology relies on more powerful AI systems deciding which guidance from weaker systems can be ignored, which might lead it to disregard information that could prevent unsafe behavior in the future. To make such systems useful, progress in alignment is needed. Burns said: "You ultimately need a very high level of trust."

Stuart Russell, a professor at UC Berkeley working on AI safety, stated that the idea of using less powerful AI models to control more powerful ones has been around for some time. He also mentioned that it remains unclear whether current methods for teaching AI behavior are the way forward, as they have so far failed to make current models behave reliably.

Although OpenAI is promoting the first steps in controlling more advanced AI, the company is also keen to seek external assistance. The company announced today that it will collaborate with influential investor and former Google CEO Eric Schmidt to provide $10 million in grants to external researchers to advance topics including weak-to-strong supervision, advanced model interpretability, and strengthening models against prompts designed to bypass their safeguards. OpenAI also plans to host a conference next year on superalignment, as mentioned by researchers involved in the new paper.

Sutskever是OpenAI的联合创始人和Superalignment团队的共同领导,他领导了公司许多最重要的技术工作,是越来越多担心如何控制AI变得更强大的著名AI人物之一。今年,如何控制未来AI技术的问题引起了新的关注,这在很大程度上要归功于ChatGPT。Sutskever在深度神经网络先驱Geoffrey Hinton的指导下攻读了博士学位,后者今年5月离开谷歌,目的是警告AI现在似乎正在某些任务中迅速接近人类水平。