Together AI Releases AI Model StripedHyena-7B, Outperforming Llama-27B

-

Together AI has released StripedHyena-7B, an innovative force in the field of artificial intelligence models that has garnered widespread attention. The base version of this model is StripedHyena-Hessian-7B (SH7B), and a chat model, StripedHyena-Nous-7B (SH-N7B), has also been introduced. StripedHyena builds on key lessons learned from a series of effective sequence modeling architectures created the previous year, such as H3, Hyena, HyenaDNA, and Monarch Mixer.

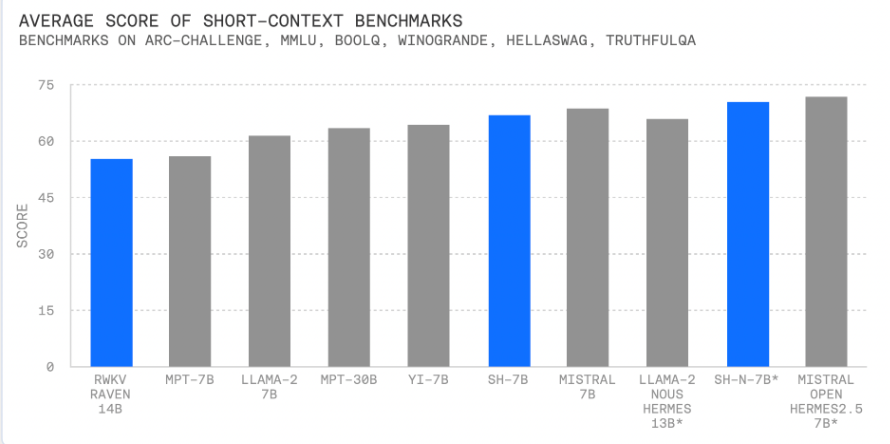

Researchers emphasize that StripedHyena offers higher processing efficiency during training, fine-tuning, and generating long sequences, with faster speeds and better memory efficiency. By employing a unique hybrid technique, StripedHyena combines gated convolutions and attention into what is known as the Hyena operator. In short-sequence tasks, including those on the OpenLLM leaderboard, StripedHyena outperforms Llama-27B, Yi7B, and the most powerful Transformer alternatives, such as RWKV14B.

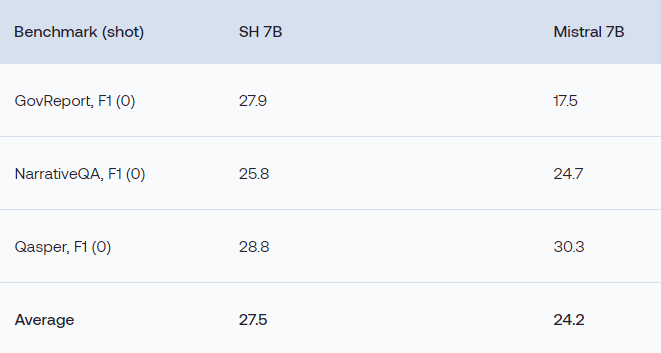

The model was evaluated on various benchmarks for both short sequence tasks and long prompts. Perplexity scaling experiments conducted on Project Gutenberg books showed that perplexity saturates at 32k or decreases below this point, indicating the model's ability to absorb information from longer prompts.

StripedHyena achieves efficiency by combining attention and gated convolution into a unique hybrid structure called Hyena operators. Researchers optimized this hybrid design using innovative grafting techniques, allowing architectural modifications during training.

Researchers emphasize that one of the key advantages of StripedHyena is its speed and memory efficiency in various tasks such as training, fine-tuning, and generating long sequences. In end-to-end training on 32k, 64k, and 128k lines, StripedHyena achieves 30%, 50%, and 100% improvements respectively compared to optimized Transformer baselines (using FlashAttention v2 and custom kernels).

In the future, researchers hope to make significant progress in multiple areas of the StripedHyena model. They plan to build larger models to handle longer contexts, pushing the boundaries of information understanding. Additionally, they aim to introduce multimodal support, enhancing the model's adaptability by allowing it to process and understand data from various sources such as text and images.

The StripedHyena model is expected to surpass Transformer models in performance by introducing additional computations (e.g., using multiple heads in gated convolutions). This method, inspired by linear attention, has been proven to improve model quality during training in architectures like H3 and MultiHyena, while also offering advantages in inference efficiency. Readers can check the project's blog and details to give due credit to all researchers involved in this study.

Project URL: https://huggingface.co/togethercomputer/StripedHyena-Hessian-7B