NVIDIA Sells 500,000 GPUs, Behind the AI Boom Lies a Fierce Battle for Graphics Cards

-

According to statistics, NVIDIA sold approximately 500,000 H100 and A100 GPUs in the third quarter. Behind the explosive growth of large language models lies fierce competition among organizations for GPUs, as well as NVIDIA's shipments of nearly 1,000 tons of graphics cards.

Market research firm Omdia's analysis reveals that NVIDIA sold around 500,000 H100 and A100 GPUs in Q3!

Previously, Omdia estimated NVIDIA's GPU sales at about 900 tons based on its Q2 revenue!

Amid the booming large language model industry, NVIDIA has built a formidable graphics card empire.

In the wave of artificial intelligence, GPUs have become highly sought-after resources for institutions, companies, and even nations worldwide.

In the third quarter of this fiscal year, Nvidia generated $14.5 billion in revenue from data center hardware, nearly quadrupling compared to the same period last year.

—This is clearly attributed to the soaring demand for H100 GPUs fueled by the rapid development of artificial intelligence and high-performance computing (HPC).

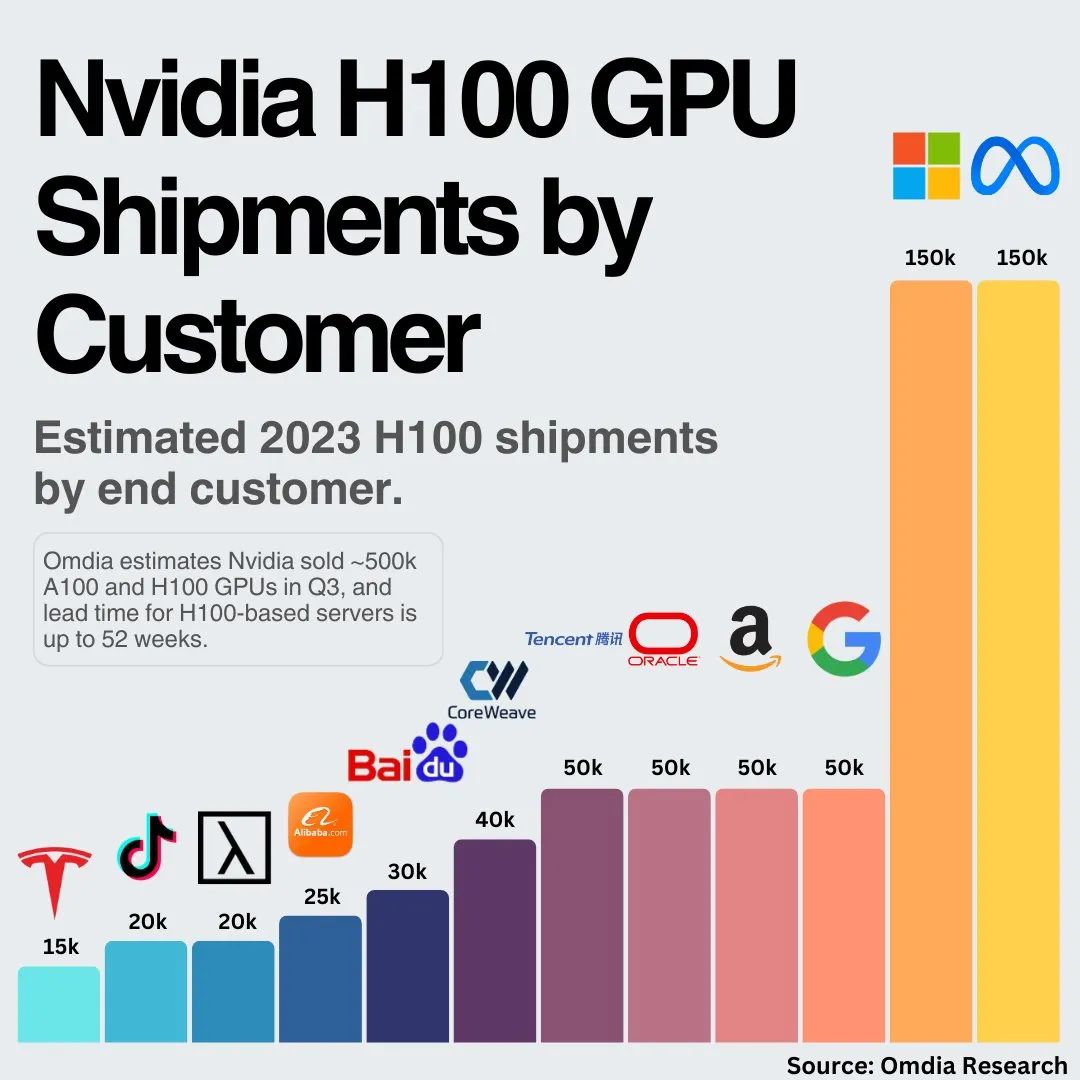

Market research firm Omdia reported that Nvidia sold nearly 500,000 A100 and H100 GPUs, with such high demand leading to delivery times of 36 to 52 weeks for H100-based servers.

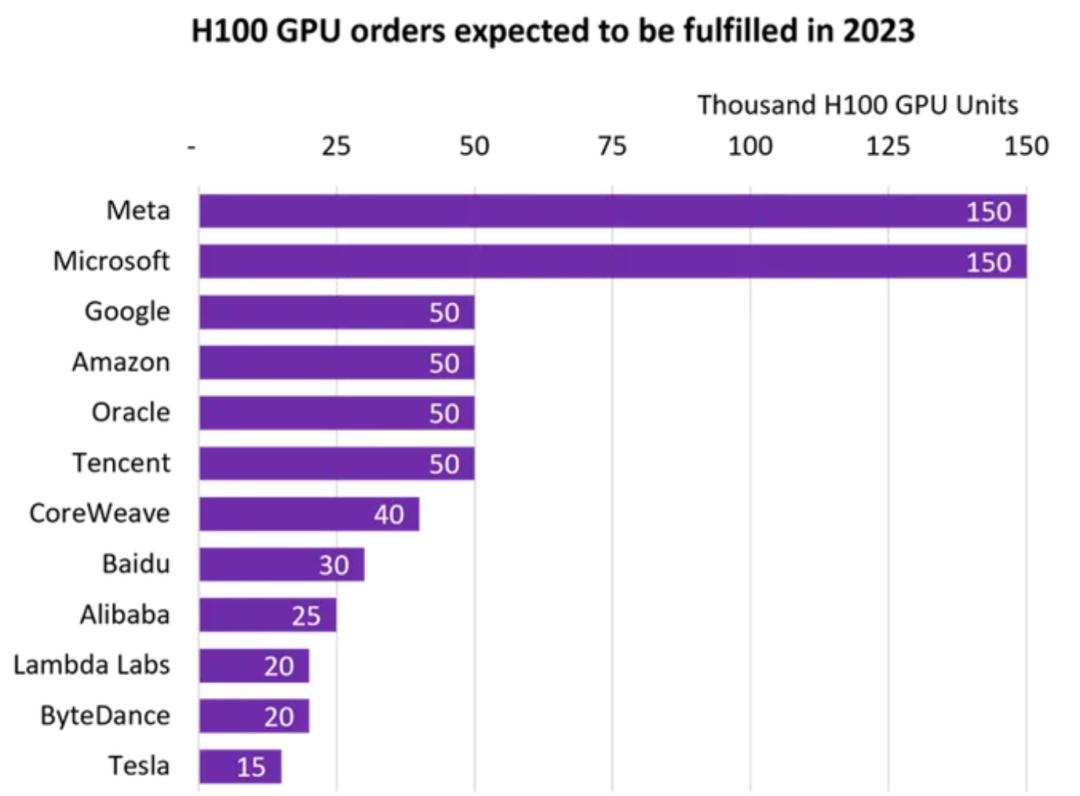

As shown in the figure above, Meta and Microsoft are the largest buyers. Each has procured up to 150,000 H100 GPUs, significantly surpassing the quantities purchased by Google, Amazon, Oracle, and Tencent (50,000 units each).

It is noteworthy that most server GPUs are supplied to hyperscale cloud service providers. Original equipment manufacturers (OEMs) such as Dell, Lenovo, and HPE currently cannot obtain sufficient AI and HPC GPUs.

Omdia predicts that by the fourth quarter of 2023, Nvidia's H100 and A100 GPU sales will exceed 500,000 units.

However, almost all companies purchasing large quantities of Nvidia H100 GPUs are developing their own custom chips for AI, HPC, and video workloads.

Therefore, as they transition to using their own chips, purchases of Nvidia hardware may gradually decrease.

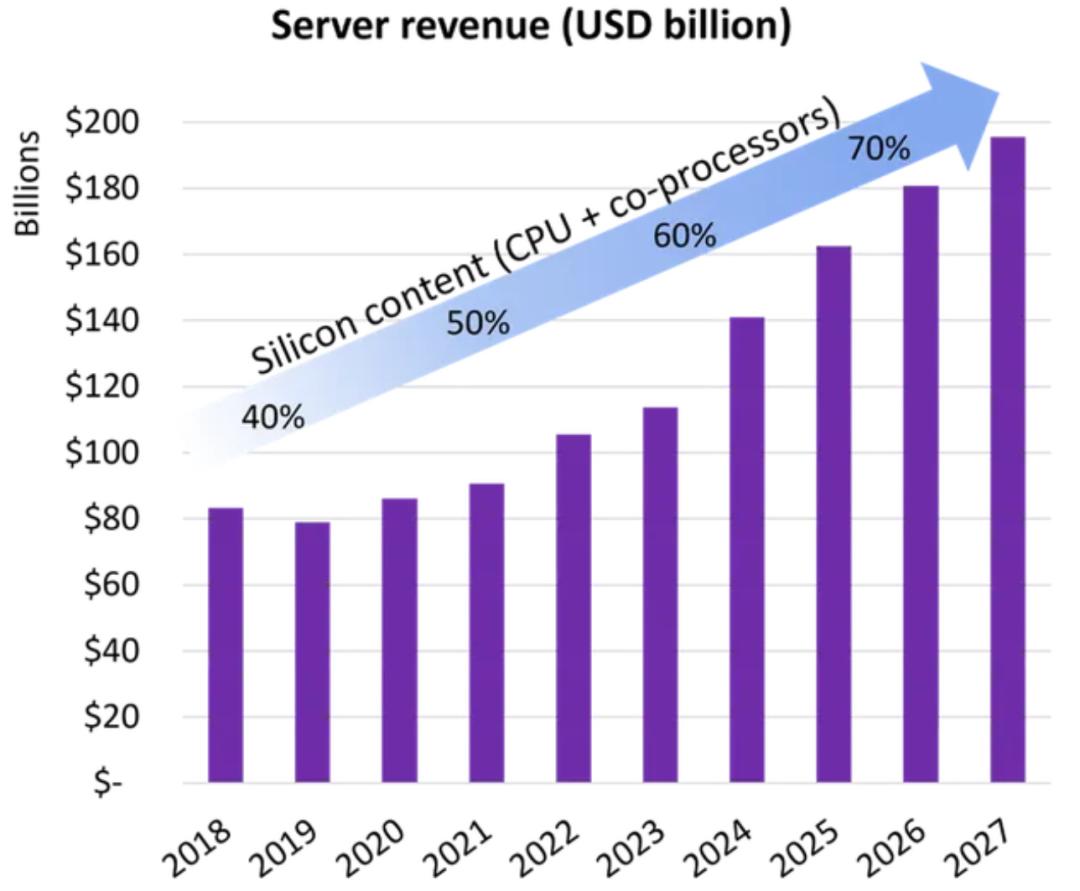

The above chart shows server statistics. In 2023, server shipments decreased by 17% to 20% year-over-year, while server revenue increased by 6% to 8%.

Vlad Galabov, Director of Omdia's Cloud and Data Center Research Practice, and Manoj Sukumaran, Principal Analyst for Data Center Computing and Networking, predict that the server market will reach $195.6 billion by 2027, more than double the value from a decade ago.

As large companies increasingly adopt hyper-heterogeneous computing or use multi-coprocessors to optimize server configurations, demand for server processors and coprocessors will continue to grow.

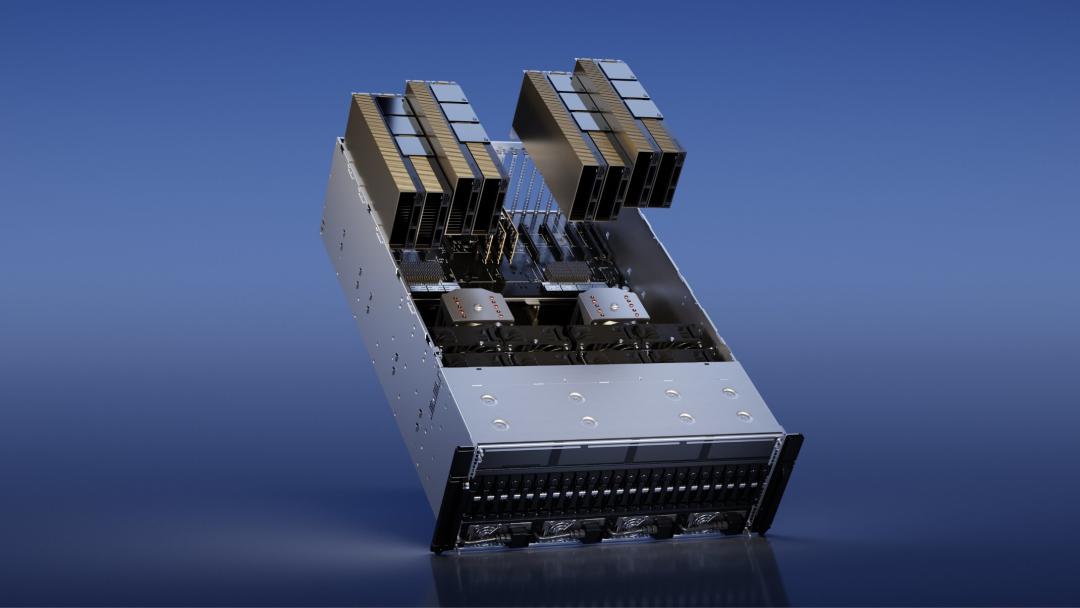

Currently, for servers running AI training and inference, the most popular servers for large language model training are Nvidia DGX servers equipped with 8 H100/A100 GPUs, and Amazon's AI inference servers configured with 16 custom coprocessors (Inferentia 2).

For video transcoding servers equipped with many custom coprocessors, the most popular ones are Google's video transcoding servers with 20 VCUs (Video Coding Units) and Meta's video processing servers using 12 scalable video processors.

As the demands of certain applications mature, the cost-effectiveness of building optimized custom processors will continue to improve.

Media and AI will be early beneficiaries of hyper-heterogeneous computing, followed by similar optimizations for other workloads such as databases and network services.

Omdia's report indicates that the increase in highly configured artificial intelligence servers is driving the development of data center physical infrastructure.

For example, rack power distribution revenue in the first half of this year grew by 17% compared to last year. With the growing trend toward liquid cooling solutions, data cabinet thermal management revenue is expected to achieve 17% growth in 2023.

Additionally, as generative AI services become more widespread, enterprises will widely adopt AI. However, the current bottleneck in AI deployment speed may be power supply.

Beyond the major players mentioned above, various organizations and companies are also procuring NVIDIA's H100 GPUs to develop their own businesses or invest in the future.

Bit Digital is a sustainable digital infrastructure platform providing digital asset and cloud computing services, headquartered in New York. The company has signed terms with clients to launch its Bit Digital AI business, supporting customers' GPU-accelerated workloads.

Under the agreement, Bit Digital will provide leasing services for a minimum of 1,024 and a maximum of 4,096 GPUs to clients.

Additionally, Bit Digital has agreed to purchase 1,056 NVIDIA H100 GPUs and has made the initial deposit payment.

The BlueSea Frontier Compute Cluster (BSFCC), created by US company Del Complex, is essentially a massive barge containing 10,000 Nvidia H100 GPUs worth $500 million.

According to Reuters, a non-profit organization called Voltage Park has purchased 24,000 Nvidia H100 chips for $500 million.

Voltage Park is an AI cloud computing organization funded by billionaire Jed McCaleb, which plans to lease computing power for AI projects.

Voltage Park offers GPUs at prices as low as $1.89 per GPU per hour. On-demand customers can rent 1 to 8 GPUs, while users wishing to rent more GPUs need to commit to a certain lease duration.

In comparison, Amazon provides on-demand services through P5 nodes with 8 H100 GPUs, but at a significantly higher price.

Calculating based on an 8-GPU node, AWS charges $98.32 per hour, while Voltage Park charges $15.12 per hour.

Amid the AI boom, Nvidia is showing ambitious plans. According to the Financial Times, this Silicon Valley chip giant aims to increase production of H100 processors, targeting shipments of 1.5 to 2 million units next year.

Due to the explosive popularity of large language models like ChatGPT, Nvidia's market value soared in May this year, successfully joining the trillion-dollar club.

As a fundamental component for developing large language models, GPUs have become highly sought-after by artificial intelligence companies and even nations worldwide.

The Financial Times reported that Saudi Arabia and the United Arab Emirates have purchased thousands of Nvidia's H100 processors.

Meanwhile, venture capital firms with ample funds are also busy purchasing GPUs for startups in their portfolios to build their own AI models.

Former GitHub CEOs Nat Friedman and Daniel Gross, who have backed successful startups like GitHub and Uber, have purchased thousands of GPUs to establish their own AI cloud service.

The system, named Andromeda Cluster, consists of 2,512 H100 GPUs and can train a 65-billion-parameter AI model in approximately 10 days. While not the largest model currently available, it is still quite impressive.

Although only startups supported by these two investors can access these resources, the initiative has been well-received.

Jack Clark, co-founder of Anthropic, remarked that individual investors are doing more to support compute-intensive startups than most governments.

Compared to the $14.5 billion in the third quarter, Nvidia sold $10.3 billion worth of data center hardware in the second quarter.

Regarding this achievement, Omdia estimated: an Nvidia H100 computing GPU with a heatsink weighs an average of over 3 kg (6.6 lbs), and Nvidia shipped over 300,000 H100 units in the second quarter, totaling more than 900 tons (1.8 million lbs).

To put this 900 tons into perspective, it is equivalent to:

- 4.5 Boeing 747s

- 11 Space Shuttle orbiters

- 215,827 gallons of water

- 299 Ford F150s

18,181 PlayStation 5 consoles

32,727 Golden Retrievers

Some netizens commented:

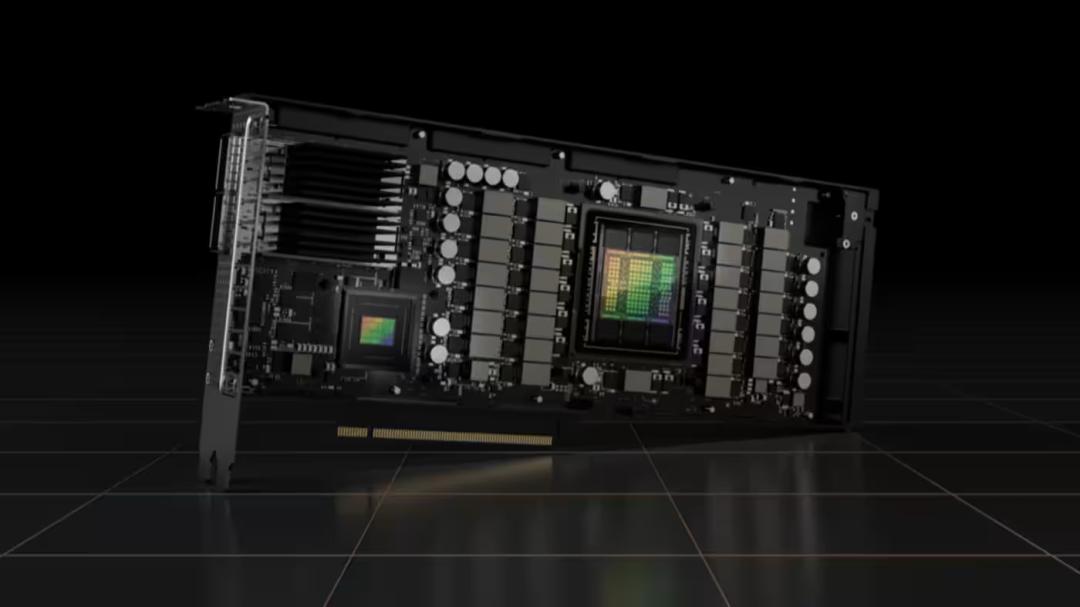

However, some media outlets believe this estimation may not be accurate. The Nvidia H100 comes in three different form factors with varying weights.

The Nvidia H100 PCIe graphics card weighs 1.2 kilograms, while the OAM module with heatsink can weigh up to 2 kilograms.

Assuming 80% of Nvidia H100 shipments are modules and 20% are graphics cards, the average weight of a single H100 is approximately 1.84 kilograms.

Regardless, this is an astonishing figure. Moreover, Nvidia's sales in the third quarter saw significant growth. If we consider 500,000 GPUs at 2 kilograms each, the total weight would be 1,000 tons.

—Nowadays, GPUs are sold by the ton. What do you think about this?