Yann LeCun: Without AI, Facebook Might Have Disappeared Long Ago

-

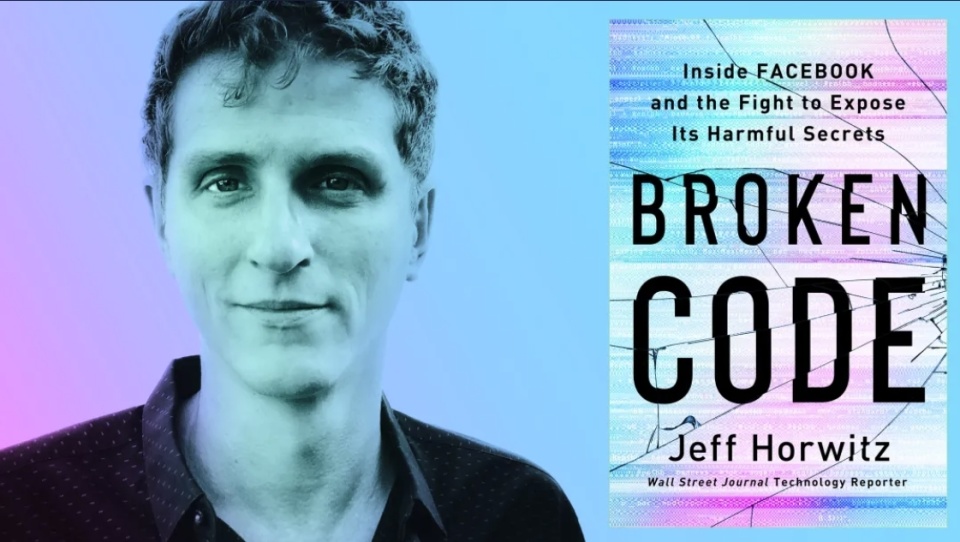

As the world's largest social media giant, Facebook (now Meta) holds a unique position in cultural and political spheres. Alongside platforms like Instagram and WhatsApp, it is among the most widely used software by billions globally. Facebook's success is deeply intertwined with artificial intelligence (AI) technology. But just how reliant is the company on AI? Perhaps we can find answers in tech journalist Jeff Horwitz's new book, 'The Facebook Files: The Battle to Expose Facebook's Dark Secrets.'

In 2006, the U.S. Patent Office received a patent application for 'a method for automatically generating a display containing information about another user within a user's social network.' This system aimed to help users avoid sifting through 'cluttered' content and instead generate a 'preference-ordered' list of 'relevant' information. The applicants listed were 'Zuckerberg et al.,' and the product was News Feed.

The idea of presenting users with an activity stream wasn't new—photo-sharing site Flickr and others had experimented with it—but Facebook revolutionized the concept. Before News Feed, Facebook users primarily interacted with the platform through notifications, reminders, or browsing friends' profiles. With News Feed, users could see a constantly updating stream of posts and status changes.

This transformation shocked Facebook's then 10 million users, who disliked having their activities monitored and seeing their previously static profiles mined for updates. Facing widespread complaints, Zuckerberg wrote a post to reassure users: "Nothing you do will be broadcast widely, but will be shared with people who care about what you do—like your friends."

Hearing user complaints and actually addressing them were two entirely different matters. As Meta CPO Chris Cox later pointed out at a press conference, News Feed achieved immediate success in boosting platform activity and connecting users. Engagement quickly doubled, and within two weeks of launch, over 1 million users showed interest in the same thing for the first time. What united so many people? A petition demanding the removal of the "stalker-style" feed feature.

In hindsight, the opaque system that repelled users was remarkably simple—mostly displaying content in reverse chronological order with manual adjustments to ensure people saw both popular posts and contextual updates. "From the beginning, News Feed's ranking system had issues," Cox admitted.

For a while, this approach worked well. But as everyone's friend lists grew and Facebook introduced new features like ads, Pages, and Groups, entertainment content, memes, and commercial messages began competing with friends' posts in News Feed. Facebook needed to ensure that users logging in would see their best friend's engagement photos before encountering viral taco recipes on cooking pages.

The original content sorting system was called EdgeRank, which, as the name suggests, ranks edges. It was a simple formula that prioritized content based on three main factors: the time of posting, engagement, and the connection between the user and the post. As an algorithm, it was crude, merely attempting to roughly translate these questions: Is it new? Popular? Or from someone you care about?

There was no black magic at work here, but users once again reacted negatively to Facebook's attempts to control what they saw. Meanwhile, Facebook's engagement metrics soared across the board.

At the time, the platform's recommendation system was still in its infancy, but the stark contrast between users' vocal opposition and their frenzied usage led to an inevitable conclusion within the company: it was best to ignore the average user's opinion about Facebook's mechanisms. Despite users' demands to remove the feature, Facebook pressed on, and ultimately, everything worked out.

By 2010, Facebook sought to improve EdgeRank's rudimentary model by adopting a machine learning-based approach to content recommendation. Machine learning, a branch of artificial intelligence, focuses on training computers to design their own decision-making algorithms. Instead of having Facebook's computers rank content based on simple mathematics, engineers would have them analyze user behavior and devise their own ranking formulas. What users saw was the result of continuous experimentation—the platform delivered content it predicted was most likely to receive likes from users, evaluating its results in real-time.

Despite the increasing complexity of Facebook's products and the unprecedented scale of user data collection, it still lacks sufficient understanding of users to display relevant ads to them. Brands enjoy the attention and popularity gained from creating content on Facebook, but they find Facebook's paid products less appealing. In May 2012, General Motors canceled its entire advertising budget on Facebook. A prominent digital advertising executive declared that Facebook ads were 'essentially the worst-performing ad platform on the internet.'

The solution to this problem would fall to the team led by Joaquin Quiñonero Candela. Candela, a Spaniard raised in Morocco, moved to the UK in 2011 to work on artificial intelligence at Microsoft, just as friends scattered across North Africa began excitedly discussing the protests sparked by social media. The machine learning techniques he used to optimize Bing search ads were extensively applied on social networks.

Candela discovered that the way Facebook built products was almost as revolutionary as the products themselves. Invited by a friend, Candela visited Facebook's Menlo Park campus and was astonished to see an engineer making significant, unsupervised updates to Facebook's code. A week later, Candela received a job offer from Facebook, confirming that the company moved much faster than Microsoft.

Candela began helping to improve advertising technology, and his timing was impeccable. Advances in machine learning and raw computational speed allowed the platform not only to categorize users into specific demographic segments (such as "single heterosexual women in their twenties in San Francisco, interested in camping and salsa dancing") but also to identify correlations between the content they click on. This information was then used to predict which ads would be most relevant to them.

After starting with nearly random guesses on how to maximize click-through rates, the system learned from its successes and failures, continuously refining its models to predict which ads were most likely to succeed. It became almost omniscient, though the recommended ads often seemed puzzling. However, the bar for success in digital advertising is low: even a 2% click-through rate can be considered successful. With billions of ads served daily, even minor algorithmic tweaks could generate tens or hundreds of millions in revenue. Candela's research team found that this algorithm could rapidly test and learn from errors, improving in real-time.

This rapid pace of improvement was crucial. The team's AI not only boosted revenue but also enhanced public perception of the platform. Even better, targeted advertising meant Facebook could earn more from each user without increasing ad frequency—and without causing major issues. When Facebook marketed fake toothpaste to teenagers, at least no one died.

Advertising is the frontier of Facebook's machine learning, and soon everyone wanted a piece of the action. For product managers responsible for increasing Facebook group engagement, friend requests, and post interactions, the appeal was obvious. If Kandela's technology could boost user interaction rates with ads, it could also enhance engagement with other platform content.

Every team handling content ranking or recommendations rushed to overhaul their systems, triggering an explosion in Facebook's product complexity. Employees discovered that the biggest gains often came not from carefully planned initiatives but from simple trial-and-error approaches.

Instead of redesigning algorithms, engineers achieved significant success through rapid machine learning experiments that essentially tested hundreds of variations of existing algorithms to identify which versions performed best for users. They didn't necessarily understand why certain variables mattered or how one algorithm outperformed others in predicting comment likelihood. But they could keep refining until the machine learning models produced algorithms that statistically surpassed existing ones - and that was enough.

It's hard to imagine a better method for building systems that embody Facebook's "Move Fast and Break Things" motto. The company wanted more. Zuckerberg courted French computer scientist Yann LeCun, a deep learning specialist - the field focused on creating computer systems that process information similarly to human thinking. Already famous for developing foundational AI technologies enabling facial recognition, LeCun was appointed to lead a division positioning Facebook as a pioneer in fundamental AI research.

After achieving success in advertising, Kandela was assigned an equally challenging task: to integrate machine learning into the company's core operations as quickly as possible. Initially, only 24 employees were responsible for building new core machine learning tools and making them available to other departments. Over the three years following Kandela's hiring, the team expanded, but it was still far from sufficient to assist every product team in need of machine learning support. The skills required to build models from scratch were too specialized for engineers to easily acquire, and the supply of machine learning experts couldn't be increased simply by boosting budgets.

The solution was to create FB Learner, a "digital painting" version of machine learning. It packaged the technology into templates for engineers who had no idea what they were doing. FB Learner did for machine learning within Facebook what services like WordPress once did for website creation—eliminating the need for users to understand HTML or configure servers.

However, these unevenly skilled engineers were disrupting the core of what was rapidly becoming a global communication platform. Many at Facebook became aware of growing external concerns about AI. Algorithms designed to reward good healthcare services ended up penalizing hospitals treating sicker patients due to poor design. Models intended to quantify the risk of recidivism among parole candidates were found to be biased against Black individuals. Yet, on social networks, these issues still seemed distant.

A fervent FB Learner user once described the widespread adoption of machine learning within Facebook as "handing rocket launchers to 25-year-old engineers." At the time, both Candela and Facebook viewed this as a triumph. However, the company's AI algorithms developed habits of propagating lies and hate speech—problems their creators remain unable to rectify.

In 2016, Facebook proclaimed: "Engineers and teams, even those with minimal expertise, can effortlessly build and run experiments, deploying AI-driven products at unprecedented speeds." The company boasted that FB Learner processed trillions of user behavior data points daily, with engineers conducting 500,000 monthly experiments on this system.

The sheer volume of data Facebook collected, combined with its eerily precise ad targeting, led many users to (mistakenly) suspect the company of eavesdropping on offline conversations—fueling the pervasive myth that "Facebook knows everything about you."

This isn't entirely accurate. The marvels of machine learning obscure its limitations. Facebook's recommendation systems operate through crude correlations between user behaviors rather than genuinely understanding tastes and interests. The News Feed cannot discern whether you prefer figure skating or mountain biking, hip-hop or K-pop—nor can it explain in human terms why specific posts appear in your feed.

Although the drawbacks of this inexplicable approach are evident, machine learning-based recommendation systems embody Mark Zuckerberg's steadfast belief in data, code, and personalization. He believes that, freed from human limitations, errors, and biases, Facebook's algorithms can deliver unparalleled objectivity and, perhaps more importantly, exceptional efficiency.

Another machine learning initiative focuses on identifying the actual content within Facebook's recommended posts. These AI systems, known as classifiers, are trained to recognize patterns in vast datasets. Long before Facebook's inception, classifiers had already proven their value in combating spam, enabling email providers to move beyond simple keyword filters and block large volumes of emails containing terms like "Vi@gra."

By receiving and comparing vast numbers of emails—some labeled as spam and others as legitimate—machine learning systems can develop their own criteria for distinguishing between them. Once a classifier is "trained," it is deployed to analyze incoming emails and predict whether each should be sent to the inbox, spam folder, or outright rejected.

By the time machine learning experts began joining Facebook, the list of questions classifiers sought to answer had expanded far beyond "Is this spam?"—thanks in large part to individuals like Yann LeCun. Zuckerberg was confident in their future development and Facebook's applications. By 2016, he predicted that classifiers would surpass human perception, recognition, and comprehension within five to ten years, enabling the company to eliminate misconduct and make significant strides in connecting the world. This prediction, however, proved overly optimistic.

Even as technology improves, datasets grow, and processing speeds increase, one drawback of machine learning persists: the algorithms developed by companies refuse to explain themselves. Engineers can evaluate the success of a classifier through metrics like precision and recall by testing its judgments. However, since the system teaches itself how to recognize certain things based on its own designed logic, it's challenging to identify human-understandable reasons when it makes mistakes.

Sometimes, the errors seem absurd. Other times, they systematically reflect human errors. Arturo Bejar recalls that in Facebook's early efforts to deploy classifiers for detecting pornographic content, the system often attempted to filter out images involving beds.

Similar fundamental errors continue to occur, even as the company begins relying on more advanced AI technologies to make more important and complex decisions than simple "pornographic/non-pornographic" classifications. The company is fully committed to AI, both to determine what people should see and to address any issues that may arise.

There's no doubt that computer science is advancing rapidly, bringing tangible benefits. But the speed, breadth, and scale at which Facebook adopts machine learning come at the cost of understandability. Why does Facebook's "Pages You May Like" algorithm seem so focused on recommending certain topics? How could a computer-animated video clip about dental implants be viewed hundreds of millions of times? Why do some news publishers consistently go viral simply by rewriting reports from other media?

Faced with these issues, Facebook's communications team has noted that the company's systems respond to people's behaviors without accounting for their personal tastes. These are difficult arguments to refute. They also obscure a troubling truth: Facebook is growing in ways it doesn't fully understand.

Five years after announcing the use of machine learning for content recommendations and targeted advertising, Facebook's systems have come to rely so heavily on self-training artificial intelligence that Yann LeCun proudly declared: 'Without this technology, the company's products might have already vanished!'