Kai-Fu Lee's AI Company 01.AI Launches 'Yi' Model Claimed as World's Strongest, with Alibaba Cloud Leading Investment

-

On November 6, 2023, 01.AI, an AI company founded by Kai-Fu Lee, Chairman and CEO of Sinovation Ventures, released its first open-source bilingual large model 'Yi'. Meanwhile, 36Kr learned from informed sources that 01.AI has completed a new round of funding led by Alibaba Cloud. Currently, 01.AI's valuation exceeds $1 billion, entering the unicorn club.

Previously, 'Yi' had quietly uploaded two base models with parameter sizes of 6B and 34B on Hugging Face on November 2. By November 5, Yi-34B had climbed to the top of both the Hugging Face LLM Leaderboard (pretrained) and the Chinese large model benchmark C-Eval rankings.

Context window represents the model's 'memory.' According to reports, Yi currently boasts a 200K context window, capable of processing approximately 400,000 words of text—the longest context window among global large models to date.

Kai-Fu Lee mentioned that due to GPU shortages, as the model size expanded from 6B to larger dimensions, the team had to carefully manage scale to reduce trial-and-error costs, avoiding blind pursuit of 'size.' By refining AI infrastructure, Yi-34B reduced training costs by 40%: 'If competitors need 2,000 GPUs, we only need 1,200.'

Yi's training data primarily comes from publicly crawled corpora and databases. Lee explained that the challenge lies in high repetition rates and low quality. Through meticulous filtering, the team distilled 3T of data from over 100T. Due to the lower quality of Chinese corpora, the proportion of English data in Yi's training set currently exceeds that of Chinese.

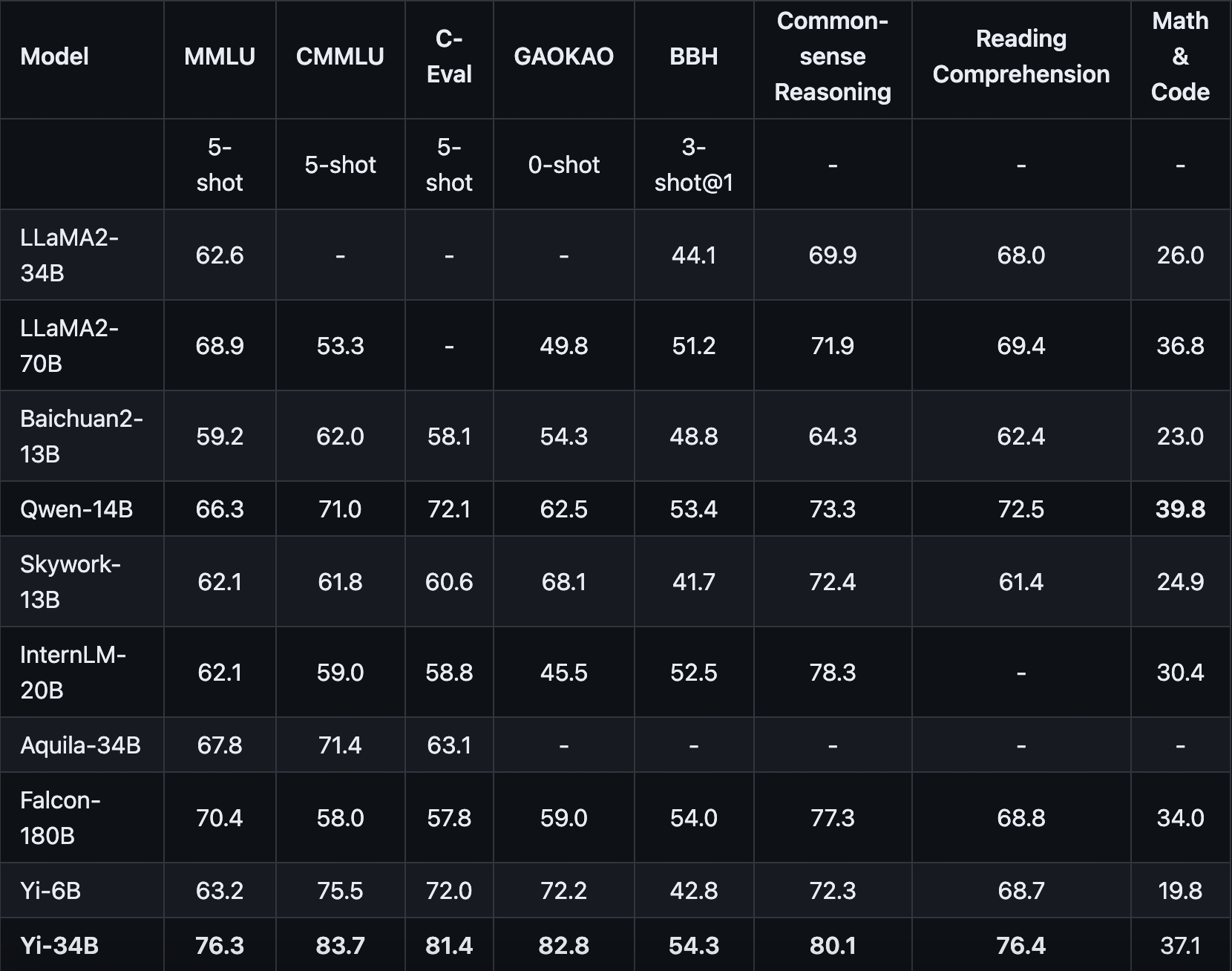

So, how capable is Yi? In evaluations, 01.AI referenced multiple datasets used in Meta's open-source model Llama2 assessments—including PIQA, SIQA, HellaSwag, and WinoGrande—to measure Yi's multidimensional abilities such as 'commonsense reasoning,' 'reading comprehension,' and 'mathematical and coding skills.'

Yi capability.

The results show that Yi-6B achieves average performance in commonsense reasoning and reading comprehension compared to domestic and international open-source models but is weaker in math and coding. In contrast, Yi-34B significantly outperforms other open-source models in both commonsense reasoning and reading comprehension, while also leading in math and coding capabilities.

Unlike the common parameter scales of 7B and 13B in the market, 01.AI (Zero One Everything) offers 6B and 34B solutions. Li Kaifu believes that the 34B size represents a 'golden ratio' for open-source large models—rare in the industry—as it meets the threshold for 'emergence' and precision requirements while enabling efficient single-card inference and cost-friendly training for manufacturers.

Li Kaifu admitted that before securing funding, 01.AI had already incurred tens of millions of dollars in debt to cover computing and training costs. This reflects his determination to go all-in on AI.

As the founder of 01.AI, Li Kaifu is also one of China's leading figures in artificial intelligence. He previously served as Global Vice President at Microsoft and Google, as well as President of Google Greater China. In 2009, he founded the angel investment and incubation platform Innovation Works.

In March 2023, Kai-Fu Lee entered the large model arena by establishing a new company called 01.AI and issued a 'hero recruitment notice': '01.AI welcomes outstanding talents with AI 2.0 technical capabilities and AGI beliefs to join us in building a new AI 2.0 platform and accelerating the arrival of AGI.' By July, 01.AI had already onboarded dozens of core members from companies such as Alibaba, Baidu, Google, and Microsoft. At the launch event, Kai-Fu Lee mentioned, '(The team) wrote their first line of code in June and July.'

Today, 01.AI has gathered a group of top experts in the field of artificial intelligence from both domestic and international backgrounds:

01.AI's pre-training lead Huang Wenhao and AI Infra VP Dai Zonghong.For example, Dai Zonghong, Vice President of AI Infra at 01.AI, was previously a senior algorithm expert at Alibaba DAMO Academy's Machine Intelligence Technology and CTO of Huawei Cloud's AI division. During his time at Alibaba, he built the Alibaba search engine platform and later led the team in developing the image search application Pailitao.

Another example is Huang Wenhao, 01.AI's pre-training lead, who comes from the Beijing Academy of Artificial Intelligence (BAAI), where he served as the technical lead of the Health Computing Research Center. Before joining BAAI, he was a researcher at Microsoft Research Asia, focusing on natural language understanding, entity extraction, dialogue understanding, and human-computer collaboration. After joining 01.AI, Huang Wenhao's team is primarily responsible for the training of Yi.

Kai-Fu Lee believes that in the AI 2.0 era, the biggest business opportunities will emerge in consumer-facing (To C) super applications. He pointed out that the first versions of internet-era Super Apps like WeChat and Douyin were not initially super apps but succeeded by accurately capturing user needs. 01.AI's goal is to create another WeChat or Douyin for the AI 2.0 era.

Regarding 01.AI's business strategy, Lee told 36Kr that companies in the AI 1.0 era that failed to commercialize were quickly phased out, while the biggest challenge for commercialized companies was achieving sustainable and scalable growth—meaning many AI 1.0 companies relied on headcount expansion rather than high-quality revenue.

He emphasized that revenue scaling should not be driven by manpower but by technology. "Guided by this principle, 01.AI will focus on developing consumer applications." Considering that Chinese users' willingness to pay is still in its early stages, 01.AI will simultaneously consider localization and global expansion for its applications.

Currently, 01.AI has initiated training for models with over 100 billion parameters, and its multimodal large model team has already assembled more than ten members. "Within a few weeks, we will have new releases to share," Lee revealed. The "Yi" series is positioned as a general foundation, with quantized versions, dialogue models, mathematical models, code models, and multimodal models to be rolled out rapidly.