Baichuan Intelligence Launches Baichuan2-192K, the World's Longest Context Window AI Model Capable of Processing 350,000 Characters at Once

-

Baichuan Intelligence has unveiled the Baichuan2-192K large language model, which boasts an unprecedented 192K context window—currently the longest in the world.

The model can process approximately 350,000 Chinese characters, making it 4.4 times more capable than Claude2 (which supports a 100K context window and handles about 80,000 characters) and 14 times more powerful than GPT-4 (with a 32K context window handling roughly 25,000 characters). Beyond its superior context length, Baichuan2-192K also leads Claude2 in long-context text generation quality, comprehension, Q&A, and summarization tasks.

On September 25 this year, Baichuan Intelligence opened access to Baichuan2's API, marking its entry into the enterprise market and commencing commercialization. The Baichuan2-192K will be available to enterprise users via API calls and private deployment. Currently, the company has initiated an API beta test for core partners in legal, media, and financial sectors.

SOTA Performance in 7 out of 10 Long-Text Benchmarks, Surpassing Claude2

Context window length is a core technology for large models. A larger window allows the model to incorporate more contextual information, better capture relevance, eliminate ambiguity, and generate more accurate and fluent content, thereby enhancing overall capabilities.

Baichuan2-192K excels in 10 Chinese and English long-text Q&A and summarization benchmarks (including Dureader, NarrativeQA, LSHT, and TriviaQA), achieving state-of-the-art (SOTA) results in 7 categories—significantly outperforming other long-window models.

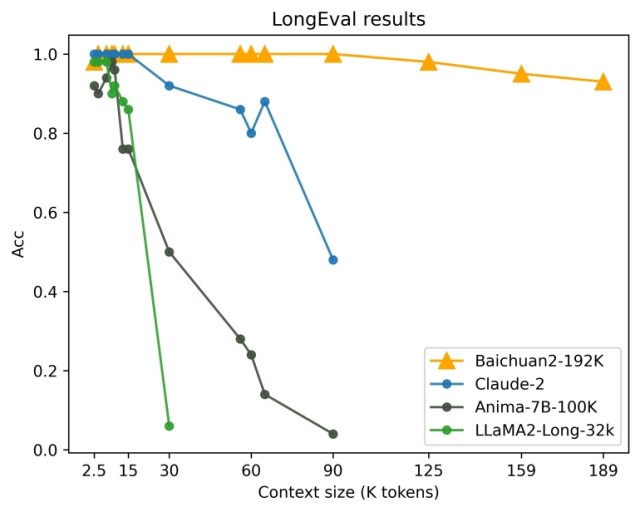

LongEval evaluations further demonstrate that Baichuan2-192K maintains robust performance even beyond 100K context length, while other open-source or commercial models show near-linear performance degradation. Claude2, for instance, suffers severe declines beyond 80K. This highlights Baichuan2-192K's unparalleled memory and comprehension capabilities for long-context content.

(LongEval, a benchmark developed by UC Berkeley and other institutions, evaluates long-context memory and comprehension—widely regarded as the industry standard for long-window model assessment.)

Dynamic Sampling Position Encoding and 4D Parallel Distributed Architecture: Simultaneously Boosting Window Length and Performance

While expanding context windows is recognized as crucial for enhancing AI model performance, ultra-long windows demand greater computational power and GPU memory. Current industry approaches—such as sliding windows, downsampling, or smaller models—often compromise other model capabilities to achieve longer contexts. Baichuan2-192K overcomes these limitations through optimized dynamic sampling position encoding and a 4D parallel distributed framework, advancing both window length and model performance without trade-offs.

The recently launched Baichuan2-192K from Baichuan Intelligence achieves an optimal balance between context window length and model performance through extreme optimization in algorithms and engineering, enabling simultaneous improvement in both aspects.

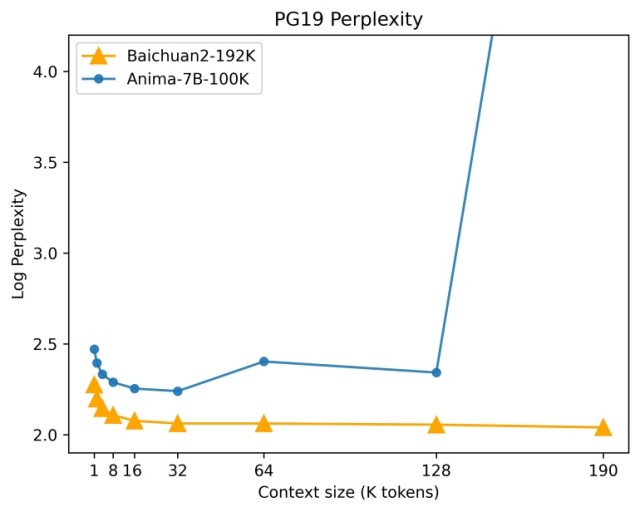

On the algorithmic front, Baichuan Intelligence proposed an innovative extrapolation solution for RoPE and ALiBi dynamic position encoding. This approach dynamically interpolates Attention-mask for ALiBi position encodings of varying lengths, maintaining resolution while strengthening the model's ability to model long-sequence dependencies. On the PG-19 benchmark (DeepMind's language modeling dataset widely recognized as the industry standard for evaluating long-range memory and reasoning), Baichuan2-192K demonstrates continuously enhanced sequence modeling capabilities as the context window expands.

(PG-19 is DeepMind's language modeling benchmark dataset, recognized as the industry standard for evaluating long-range memory and reasoning capabilities in models)On the engineering side, building upon their self-developed distributed training framework, Baichuan Intelligence integrated all advanced optimization technologies currently available in the market, including tensor parallelism, pipeline parallelism, sequence parallelism, recomputation, and Offload functionality. They created a comprehensive 4D parallel distributed solution that automatically identifies the most suitable distributed strategy based on specific model workloads, significantly reducing GPU memory usage during long-context training and inference.

Baichuan Intelligence's innovations in long-context window technology represent not only a breakthrough in large model capabilities but also hold significant academic value. Baichuan2-192K has validated the feasibility of extended context windows, opening new research pathways for enhancing large model performance.

Baichuan2-192K Launches Beta Testing, Already Applied in Legal, Media and Other Real-world Scenarios

Baichuan2-192K has officially entered beta testing, available via API to Baichuan Intelligence's core partners. Collaborations have already been established with financial media outlets and law firms, applying the model's world-leading long-context capabilities in media, finance, legal and other practical scenarios, with plans for full public availability coming soon.

Once the API is fully open, Baichuan2-192K will integrate more deeply with vertical scenarios, truly impacting people's work, life, and learning while helping industry users improve efficiency and reduce costs. The model can process and analyze hundreds of pages of material at once, providing significant assistance in extracting and analyzing key information from long documents, document summarization, long-document review, lengthy article/report writing, complex programming assistance, and other real-world applications.

It can help fund managers summarize and interpret financial statements while analyzing company risks and opportunities; assist lawyers in identifying risks across multiple legal documents and reviewing contracts; enable technical personnel to read hundreds of pages of development documentation and answer technical questions; and help researchers quickly review numerous academic papers to summarize the latest advancements.

Moreover, the extended context window provides foundational support for better processing and understanding complex multimodal inputs and enables more effective transfer learning, establishing a solid technical foundation for exploring cutting-edge areas like Agent systems and multimodal applications.