Alibaba Open-Sources Vivid-VR: An AI-Powered Video Restoration Tool Unlocking New Possibilities for Content Creation

-

With the rapid advancement of generative AI technology, the field of video restoration has witnessed a breakthrough. Alibaba Cloud's newly open-sourced Vivid-VR generative video restoration tool, with its exceptional inter-frame consistency and restoration effects, has quickly become a focal point for content creators and developers.

Vivid-VR: A New Benchmark in AI-Driven Video Restoration

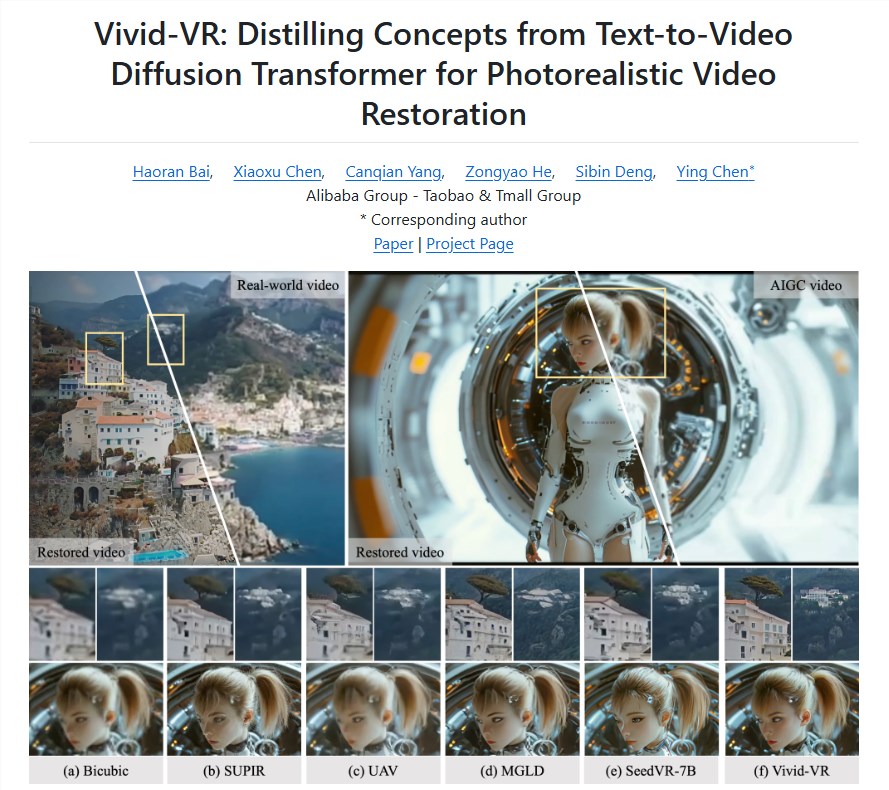

Vivid-VR is an open-source generative video restoration tool launched by Alibaba Cloud, based on an advanced text-to-video (T2V) foundational model combined with ControlNet technology to ensure content consistency during video generation. The tool effectively addresses quality issues in real videos or AI-generated content (AIGC), eliminating common defects such as flickering and jitter, providing content creators with an efficient solution for material remediation. Whether restoring low-quality videos or optimizing generated videos, Vivid-VR demonstrates outstanding performance.

Technical Core: The Perfect Fusion of T2V and ControlNet

The core technology of Vivid-VR lies in its innovative architecture combining the T2V foundational model with ControlNet. The T2V model generates high-quality video content through deep learning, while ControlNet ensures high temporal consistency between frames in the restored video through precise control mechanisms, avoiding common issues like flickering or jitter. It is reported that the tool dynamically adjusts semantic features during generation, significantly enhancing the texture realism and visual vibrancy of videos. This technological combination not only improves restoration efficiency but also maintains higher visual stability for video content.

Broad Applicability: Covering Both Real and AIGC Videos

Another highlight of Vivid-VR is its wide applicability. Whether it's traditionally filmed real videos or AI-generated content, Vivid-VR provides efficient restoration support. For content creators, low-quality materials are often a pain point in the creative process, and Vivid-VR can quickly repair blurry, noisy, or incoherent video clips through intelligent analysis and enhancement, offering a practical tool for fields such as short videos and post-production. Additionally, the tool supports multiple input formats, allowing developers to flexibly adjust restoration parameters to further enhance creative efficiency.

Open-Source Ecosystem: Empowering Global Developers and Creators

As another masterpiece from Alibaba Cloud in the field of generative AI, Vivid-VR is now fully open-source, with its code and models freely available on Hugging Face, GitHub, and Alibaba Cloud's ModelScope platform. This move continues Alibaba Cloud's leading position in the open-source community. Previously, Alibaba Cloud's Wan2.1 series models attracted over 2.2 million downloads, ranking first in the VBench video generation model list. The open-sourcing of Vivid-VR further lowers the technical barrier for content creators and developers, enabling more people to develop customized video restoration applications based on this tool.

Industry Impact: Driving the Intelligent Upgrade of Content Creation

In 2025, video content has become the dominant form of digital communication, but quality issues such as blurriness, jitter, or low resolution remain challenges for creators. The emergence of Vivid-VR provides content creators with an efficient and low-cost solution. Whether restoring old video archives or optimizing details in AI-generated videos, Vivid-VR demonstrates strong potential. AIbase believes that with the widespread adoption of generative AI technology, Vivid-VR will not only help content creators improve the quality of their work but also drive intelligent innovation in the video restoration field, bringing new growth opportunities to the industry.

Vivid-VR Ushers in a New Chapter in Video Restoration

The open-source release of Vivid-VR marks another breakthrough for Alibaba Cloud in the field of generative AI. Its powerful inter-frame consistency restoration capabilities and flexible open-source features provide content creators and developers with a new tool choice. AIbase believes that Vivid-VR can not only address practical pain points in video creation but also inspire more innovative applications through its open-source ecosystem, supporting the intelligent transformation of the global content creation industry.

Project address: https://github.com/csbhr/Vivid-VR