Raking in 2.5 Billion, Zhipu AI Has Grown Accustomed to the Spotlight

-

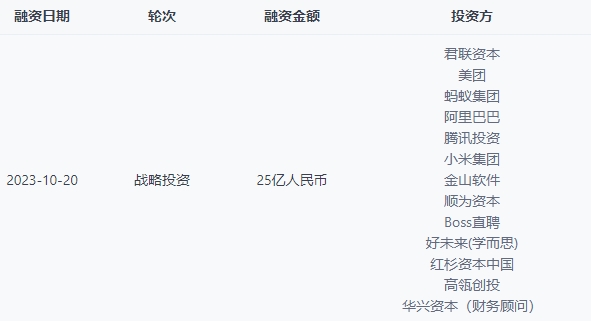

Over 2.5 billion RMB—this is the cumulative funding amount Beijing Zhipu Huazhang Technology Co., Ltd. (hereinafter referred to as Zhipu AI) has secured this year, making it one of the highest-funded large model startups in China with publicly disclosed financing.

Following the release of ChatGPT, the technological wave sparked by large models quickly transformed into an investment frenzy. However, unlike before, it is widely acknowledged that this is a field with even stronger Matthew effects, affecting both investors and startups alike.

On one hand, large model startups at this stage heavily rely on substantial resources and strong financial backing, resulting in fewer capable players entering the arena and fewer investment opportunities for backers. On the other hand, most believe that large models represent an opportunity comparable to or even surpassing the internet, driving up company valuations to the point where even renowned investors and institutions have to 'group buy.'

According to 36Kr, Zhipu AI's officially disclosed funding figures are still conservative estimates, with additional financing currently under negotiation. From 2019 to 2023, Zhipu AI raised over 3 billion RMB across three funding rounds in four years. This year, both the frequency and amount of funding have far exceeded previous levels.

Zhipu AI was officially established in 2019, with Pre-A round investors including CAS Star and Tsinghua Holdings. Series A investors included Fortune Capital and HuaKong Fund, while Series B backers included Legend Capital and Qiming Venture Partners.

According to Zhipu AI's latest disclosure, its investors include the Zhongguancun Independent Innovation Fund under the National Social Security Fund (managed by Legend Capital), Meituan, Ant Group, Alibaba, Tencent, Xiaomi, Kingsoft, Shunwei Capital, Boss Zhipin, TAL Education Group, Sequoia Capital, Hillhouse Capital, and other institutions, as well as follow-on investments from existing shareholders like Legend Capital.

As a star large model startup, Zhipu AI boasts an enviable investor lineup and is not short of funding sources, with backers all being prominent figures. Many investors have noted, 'Right now, it’s definitely Zhipu choosing its investors, not the other way around.'

Currently, multiple large model startups in the industry have secured substantial funding, and the market is not lacking in capital for large models. However, whether and how to secure funding are critical considerations for these startups. Analyzing Zhipu AI's investor list reveals that funding is not the sole criterion—the value represented by the capital can provide large model startups with diverse resources.

For example, the Zhongguancun Independent Innovation Fund under the National Social Security Fund. At the major achievements conference of the 2023 Zhongguancun Forum held in late May, the National Social Security Fund announced the establishment of the 'Zhongguancun Independent Innovation Special Fund,' which carries the attribute of a 'national team.' With an initial scale of 5 billion RMB and a fund duration exceeding 10 years, it exhibits long-term capital characteristics.

Similarly, internet giants like Meituan, Ant Group, Alibaba, and Tencent not only bring substantial funding but also imply potential industry collaborations. Many internet businesses have the potential for transformation based on large models, and Zhipu AI's foundational large models can access vast data and business scenarios—something many startups lack.

In the current narrative landscape of large models, Zhipu AI must learn to adapt to the spotlight, which contrasts with its traditionally low-key style.

The breakout success of ChatGPT marked a singularity moment for AIGC, serving as a clear dividing line between two phases. Some large companies or startups had already conducted technical pre-research or established new firms focused on large models when early signs emerged, while a significant proportion only committed to large models after witnessing ChatGPT's impact.

This has led to differences in perception and, consequently, varying outcomes. While 2023 is widely regarded as the first year of large models, in the eyes of Zhipu AI CEO Zhang Peng, the 'first year of AI large language models' should be 2020—the second year after Zhipu AI's founding.

The birth of Transformer in 2017 gave rise to a series of large-scale Transformer models like GPT-1 and GPT-2, which, with their massive parameters, demonstrated strong generalization capabilities and solved previously intractable problems. By 2020, the advent of GPT-3 ushered generative AI into a new era.

2020 was also the year Zhipu AI decided to fully commit to large model R&D. At the time, due to high training costs and complex development barriers, large models were not favored by the industry, and Zhipu's growth was not as high-profile as it is now. However, after ChatGPT's release, Zhang Peng admitted feeling 'both excited and somewhat pressured'—excited by the clearer direction but pressured by the urgency of catching up with new technologies.

Meanwhile, more and more investors sought out Zhipu AI. The battle over large models had already begun, and investors wanted to find those who had spotted the spark early.

After thorough research, factors such as a stable founding team, solid technical groundwork, and commercialization efforts propelled Zhipu AI from obscurity to prominence.

On the founding team front, Zhipu AI was incubated by Tsinghua's KEG (Knowledge Engineering Group). This team transitioned from the lab to the market, leading to Zhipu AI's official establishment. CEO Zhang Peng holds a bachelor's degree from Tsinghua University's Department of Computer Science and a Ph.D. focused on knowledge graphs from the same department.

Chairman Liu Debing studied under Academician Gao Wen and served as Deputy Director of the Science Big Data Research Center at Tsinghua's Institute for Data Science. President Wang Shaolan is a Tsinghua Innovation Leadership Ph.D., further underscoring the Tsinghua connection.

In Silicon Valley and global academic circles, Zhipu AI has long been well-known. Investors view Zhipu AI as having 'people, technology, and clients' even during its Tsinghua days, akin to a small but elite startup. With key team members having collaborated for some time, the stability aligns with the investment logic of backing people and sectors, making Zhipu AI a natural favorite.

Technology is also Zhipu AI's forte. Tracing back to its early research—from the 'scientific intelligence mining' platform AMiner in 2006, to the company's founding in 2019, to its focus on large model algorithms in 2020, the release of GLM-10B in 2021, GLM-130B in 2022, and the conversational models ChatGLM-6B and the trillion-parameter ChatGLM in March 2023—Zhipu AI has consistently pushed boundaries.

It is reported that Zhipu AI will release a new generation of foundational large models on October 27. In this fiercely competitive October for the large model industry, whether Zhipu can secure a strong position depends on the performance of its new model.

Zhipu AI's research predates that of most large model participants in China, and it has effectively converted its first-mover advantage into a winning edge. In Stanford's evaluation of mainstream global large models, GLM-130B was the only Asian model selected, matching GPT-3 in accuracy and maliciousness while excelling in robustness and calibration error across all models.

As a general-purpose revolutionary technology, AI and large models determine not only competition between individuals and companies but also between nations. Zhipu's origins mean it is not just a company but also a scholarly team driven by technological ideals.

Unlike most companies and institutions, Zhipu AI is forging its own path.

36Kr learned that many domestic tech giants base their large model research on GPT, BERT, and T5. While this is understandable for commercial entities, from a national perspective, China must have its own pre-training framework—not following but carving out its own path. GLM (General Language Model) is Zhipu's answer.

Developing proprietary large models is no easy feat and carries high risks. 'GPT-3 reinforced our resolve to develop a dense, trillion-parameter, bilingual model. After our research, we knew the investment required—especially in computing power, talent, team, and data—would be enormous,' Zhang Peng previously told 36Kr.

'China lacks its own pre-training model framework. Whether GPT, BERT, or T5, the underlying technologies were proposed by Western scientists, leaving the path monopolized by the West. We aim to break this monopoly, so we didn’t simply replicate OpenAI’s approach,' he said.

Of course, Zhipu AI's proactive distancing from Western technological systems also represents potential risks. If future development encounters new bottlenecks, there may be additional challenges. Currently, Zhipu is one of the few fully domestically funded/self-developed large model enterprises with a clear plan for domestic chip adaptation, making it more suitable for Chinese companies and, to some extent, able to mitigate strategic divergences.

In the latest bidding results for the "2023 Industrial Technology Basic Public Service Platform - Industrial Public Service Platform Project for Engineering Technology and Applications of AI Large Models" released by the Ministry of Industry and Information Technology (MIIT), a consortium involving Zhipu AI won the bid. According to reports, this project is the MIIT's first major large model initiative and the only major public service platform project for large models this year. It will provide support to the winning entities to promote research on key engineering technologies for large models in key industries and the implementation of intelligent generation applications.

Open source is another hallmark of Zhipu AI. While many companies have chosen closed-source routes, Zhipu aims to foster a thriving community and ecosystem. To further advance the development of the large model open-source community, Zhipu AI released ChatGLM2, upgrading and open-sourcing its full series of 100-billion-parameter base dialogue models, including 6B, 12B, 32B, 66B, and 130B variants to meet diverse customer needs.

"We are quite open in academic and technical exchanges as well as product collaborations. Large models cannot be perfected by a single dominant player; an open ecosystem is essential. Whether it's open source or free offerings, these efforts are not driven by commercial interests," said Zhang Peng.

In terms of commercialization, Zhipu AI appears more measured. Taking the currently hyped industry-specific large models as an example, Zhipu AI has not rushed to develop them to cater to clients. Instead, it chooses to "lay eggs along the path" toward AGI, rather than treating industry-specific models as the ultimate goal.

"Industry models essentially reuse the shell of large models to reinvent the wheels of traditional algorithms," Zhang Peng pointed out, highlighting the limitations of industry-specific large models. "We believe only large-scale (general) models can achieve the emergence of human-like cognitive abilities."

AI is an endless marathon, and large models are one of its milestones. Everyone wants to carve their name on it. Capital is Zhipu AI's means to achieve its technological ideals. As Zhipu is compelled to step into the spotlight, its resilience, confidence, and patience will be put to the test.